Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Guide to Experimental Design | Overview, Steps, & Examples

Guide to Experimental Design | Overview, 5 steps & Examples

Published on December 3, 2019 by Rebecca Bevans . Revised on June 21, 2023.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design create a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead. This minimizes several types of research bias, particularly sampling bias , survivorship bias , and attrition bias as time passes.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, other interesting articles, frequently asked questions about experiments.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

| Research question | Independent variable | Dependent variable |

|---|---|---|

| Phone use and sleep | Minutes of phone use before sleep | Hours of sleep per night |

| Temperature and soil respiration | Air temperature just above the soil surface | CO2 respired from soil |

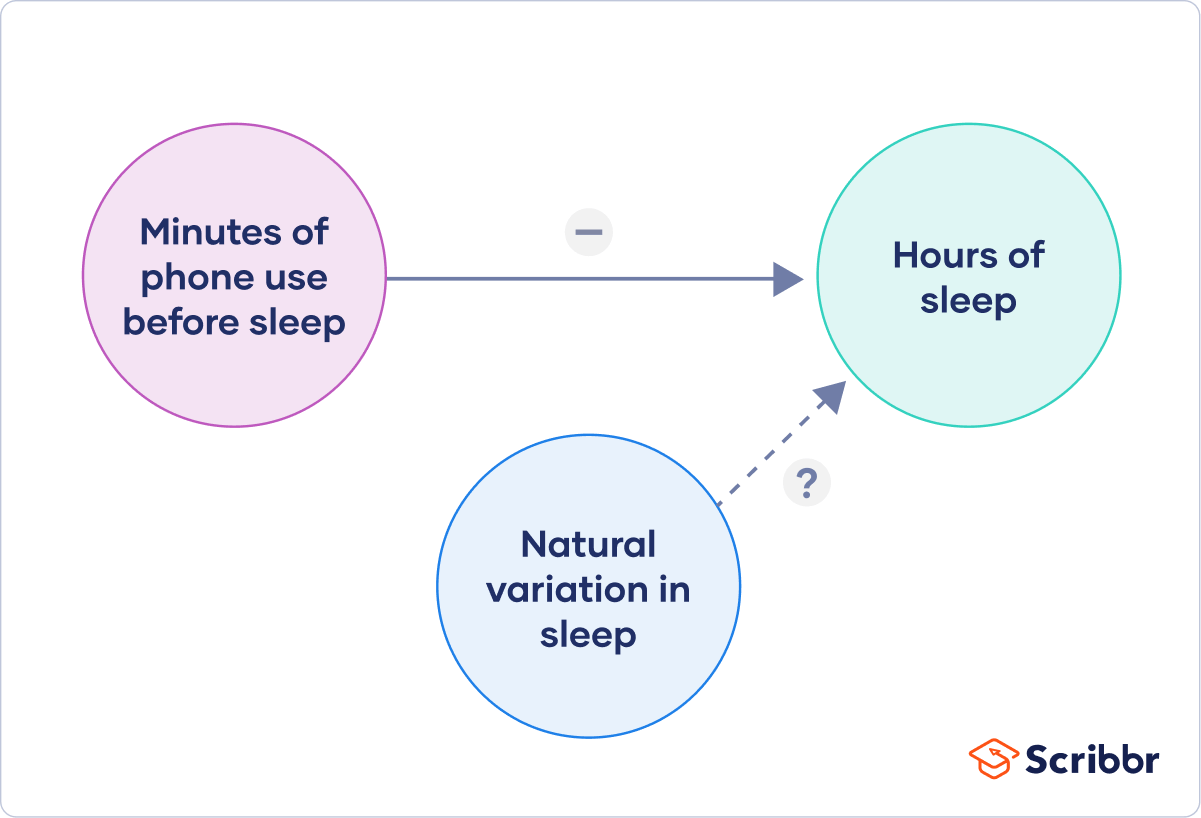

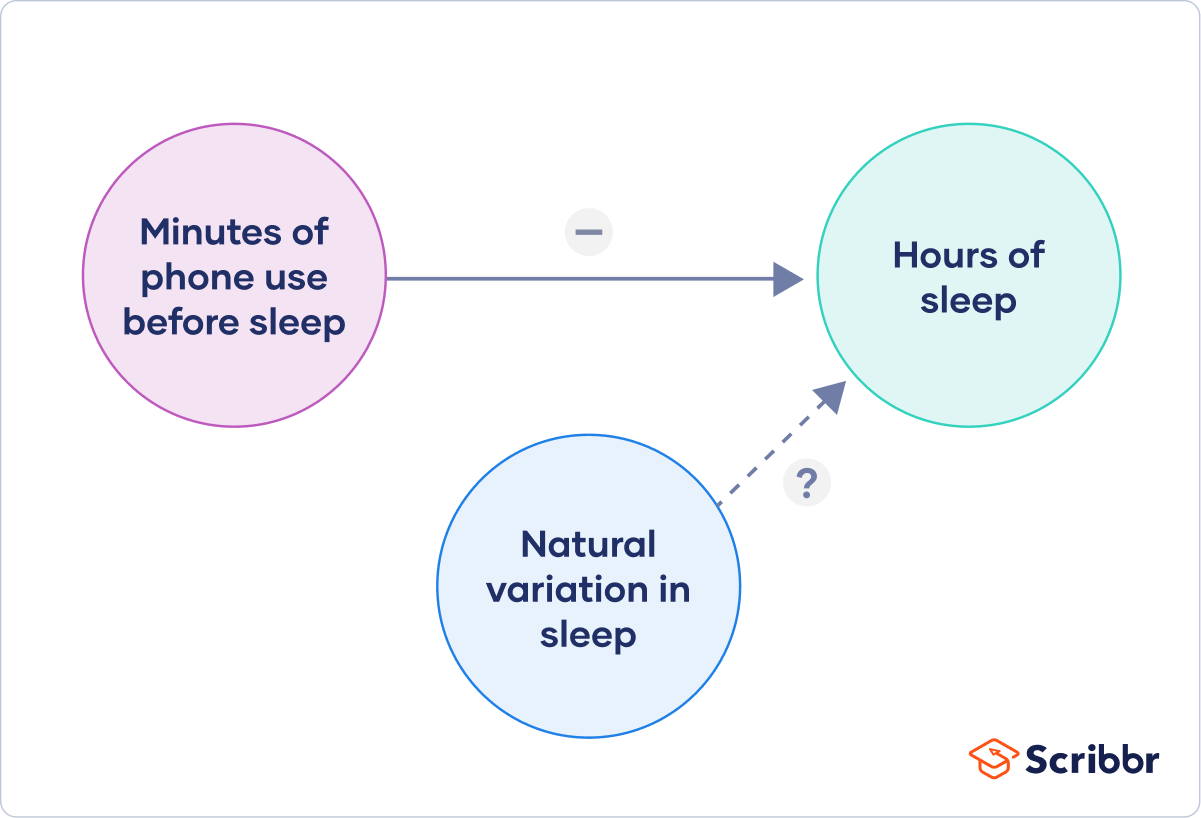

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

| Extraneous variable | How to control | |

|---|---|---|

| Phone use and sleep | in sleep patterns among individuals. | measure the average difference between sleep with phone use and sleep without phone use rather than the average amount of sleep per treatment group. |

| Temperature and soil respiration | also affects respiration, and moisture can decrease with increasing temperature. | monitor soil moisture and add water to make sure that soil moisture is consistent across all treatment plots. |

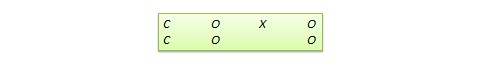

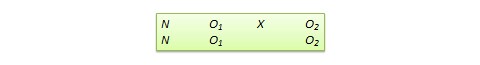

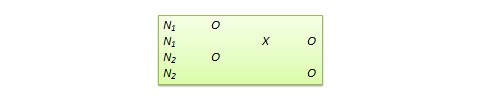

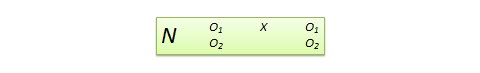

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

| Null hypothesis (H ) | Alternate hypothesis (H ) | |

|---|---|---|

| Phone use and sleep | Phone use before sleep does not correlate with the amount of sleep a person gets. | Increasing phone use before sleep leads to a decrease in sleep. |

| Temperature and soil respiration | Air temperature does not correlate with soil respiration. | Increased air temperature leads to increased soil respiration. |

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalized and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomized design vs a randomized block design .

- A between-subjects design vs a within-subjects design .

Randomization

An experiment can be completely randomized or randomized within blocks (aka strata):

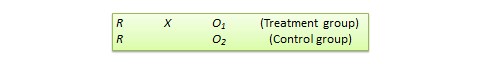

- In a completely randomized design , every subject is assigned to a treatment group at random.

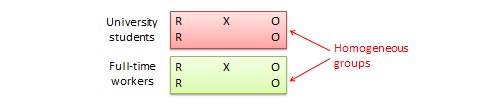

- In a randomized block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

| Completely randomized design | Randomized block design | |

|---|---|---|

| Phone use and sleep | Subjects are all randomly assigned a level of phone use using a random number generator. | Subjects are first grouped by age, and then phone use treatments are randomly assigned within these groups. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random by using a number generator to generate map coordinates within the study area. | Soils are first grouped by average rainfall, and then treatment plots are randomly assigned within these groups. |

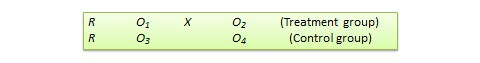

Sometimes randomization isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs. within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

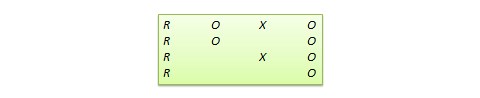

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomizing or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

| Between-subjects (independent measures) design | Within-subjects (repeated measures) design | |

|---|---|---|

| Phone use and sleep | Subjects are randomly assigned a level of phone use (none, low, or high) and follow that level of phone use throughout the experiment. | Subjects are assigned consecutively to zero, low, and high levels of phone use throughout the experiment, and the order in which they follow these treatments is randomized. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random and the soils are kept at this temperature throughout the experiment. | Every plot receives each warming treatment (1, 3, 5, 8, and 10C above ambient temperatures) consecutively over the course of the experiment, and the order in which they receive these treatments is randomized. |

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimize research bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalized to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

Experimental design means planning a set of procedures to investigate a relationship between variables . To design a controlled experiment, you need:

- A testable hypothesis

- At least one independent variable that can be precisely manipulated

- At least one dependent variable that can be precisely measured

When designing the experiment, you decide:

- How you will manipulate the variable(s)

- How you will control for any potential confounding variables

- How many subjects or samples will be included in the study

- How subjects will be assigned to treatment levels

Experimental design is essential to the internal and external validity of your experiment.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word “between” means that you’re comparing different conditions between groups, while the word “within” means you’re comparing different conditions within the same group.

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 21). Guide to Experimental Design | Overview, 5 steps & Examples. Scribbr. Retrieved September 26, 2024, from https://www.scribbr.com/methodology/experimental-design/

Is this article helpful?

Rebecca Bevans

Other students also liked, random assignment in experiments | introduction & examples, quasi-experimental design | definition, types & examples, how to write a lab report, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Reference.com

What's Your Question?

- History & Geography

- Science & Technology

- Business & Finance

- Pets & Animals

What Is an Experimental Setup in Science?

In science, the experimental setup is the part of research in which the experimenter analyzes the effect of a specific variable. This setup is quite similar to the control setup; ideally, the only difference involves the variable that the experimenter wants to test in the current project.

Consider a scenario in which a researcher wants to determine whether scuffing a baseball with an emery board provides more distortion to the baseball’s flight than a dab or two of Vaseline. Both of these methods were used, primarily in the 1970s and 1980s, to help pitchers gain an advantage over batters. The researcher would engage the services of a pitcher, and after a warm-up period, would have the pitcher throw a set number of pitches, such as 10 fastballs and 10 curve balls with no doctoring to the baseball at all. This is the control setup.

Next, the pitcher would use an emery board to scuff the surface of the ball. It would be important for these pitches to take place at the same time and place as the control pitches to keep the environmental factors the same. The experimental setup would involve 10 fastballs and 10 curve balls with the doctored baseball. Continuing with 10 fastballs and 10 curve balls with a ball that has some Vaseline on it and comparing observations of the flight of the baseball would constitute the experimental setup. The observer could be a potential batter or someone standing behind the catcher — or the catcher himself.

MORE FROM REFERENCE.COM

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- A Quick Guide to Experimental Design | 5 Steps & Examples

A Quick Guide to Experimental Design | 5 Steps & Examples

Published on 11 April 2022 by Rebecca Bevans . Revised on 5 December 2022.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design means creating a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If if random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, frequently asked questions about experimental design.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

| Research question | Independent variable | Dependent variable |

|---|---|---|

| Phone use and sleep | Minutes of phone use before sleep | Hours of sleep per night |

| Temperature and soil respiration | Air temperature just above the soil surface | CO2 respired from soil |

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

| Extraneous variable | How to control | |

|---|---|---|

| Phone use and sleep | in sleep patterns among individuals. | measure the average difference between sleep with phone use and sleep without phone use rather than the average amount of sleep per treatment group. |

| Temperature and soil respiration | also affects respiration, and moisture can decrease with increasing temperature. | monitor soil moisture and add water to make sure that soil moisture is consistent across all treatment plots. |

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism, run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

| Null hypothesis (H ) | Alternate hypothesis (H ) | |

|---|---|---|

| Phone use and sleep | Phone use before sleep does not correlate with the amount of sleep a person gets. | Increasing phone use before sleep leads to a decrease in sleep. |

| Temperature and soil respiration | Air temperature does not correlate with soil respiration. | Increased air temperature leads to increased soil respiration. |

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalised and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomised design vs a randomised block design .

- A between-subjects design vs a within-subjects design .

Randomisation

An experiment can be completely randomised or randomised within blocks (aka strata):

- In a completely randomised design , every subject is assigned to a treatment group at random.

- In a randomised block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

| Completely randomised design | Randomised block design | |

|---|---|---|

| Phone use and sleep | Subjects are all randomly assigned a level of phone use using a random number generator. | Subjects are first grouped by age, and then phone use treatments are randomly assigned within these groups. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random by using a number generator to generate map coordinates within the study area. | Soils are first grouped by average rainfall, and then treatment plots are randomly assigned within these groups. |

Sometimes randomisation isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomising or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

| Between-subjects (independent measures) design | Within-subjects (repeated measures) design | |

|---|---|---|

| Phone use and sleep | Subjects are randomly assigned a level of phone use (none, low, or high) and follow that level of phone use throughout the experiment. | Subjects are assigned consecutively to zero, low, and high levels of phone use throughout the experiment, and the order in which they follow these treatments is randomised. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random and the soils are kept at this temperature throughout the experiment. | Every plot receives each warming treatment (1, 3, 5, 8, and 10C above ambient temperatures) consecutively over the course of the experiment, and the order in which they receive these treatments is randomised. |

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimise bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalised to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

Experimental designs are a set of procedures that you plan in order to examine the relationship between variables that interest you.

To design a successful experiment, first identify:

- A testable hypothesis

- One or more independent variables that you will manipulate

- One or more dependent variables that you will measure

When designing the experiment, first decide:

- How your variable(s) will be manipulated

- How you will control for any potential confounding or lurking variables

- How many subjects you will include

- How you will assign treatments to your subjects

The key difference between observational studies and experiments is that, done correctly, an observational study will never influence the responses or behaviours of participants. Experimental designs will have a treatment condition applied to at least a portion of participants.

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word ‘between’ means that you’re comparing different conditions between groups, while the word ‘within’ means you’re comparing different conditions within the same group.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bevans, R. (2022, December 05). A Quick Guide to Experimental Design | 5 Steps & Examples. Scribbr. Retrieved 23 September 2024, from https://www.scribbr.co.uk/research-methods/guide-to-experimental-design/

Is this article helpful?

Rebecca Bevans

19+ Experimental Design Examples (Methods + Types)

Ever wondered how scientists discover new medicines, psychologists learn about behavior, or even how marketers figure out what kind of ads you like? Well, they all have something in common: they use a special plan or recipe called an "experimental design."

Imagine you're baking cookies. You can't just throw random amounts of flour, sugar, and chocolate chips into a bowl and hope for the best. You follow a recipe, right? Scientists and researchers do something similar. They follow a "recipe" called an experimental design to make sure their experiments are set up in a way that the answers they find are meaningful and reliable.

Experimental design is the roadmap researchers use to answer questions. It's a set of rules and steps that researchers follow to collect information, or "data," in a way that is fair, accurate, and makes sense.

Long ago, people didn't have detailed game plans for experiments. They often just tried things out and saw what happened. But over time, people got smarter about this. They started creating structured plans—what we now call experimental designs—to get clearer, more trustworthy answers to their questions.

In this article, we'll take you on a journey through the world of experimental designs. We'll talk about the different types, or "flavors," of experimental designs, where they're used, and even give you a peek into how they came to be.

What Is Experimental Design?

Alright, before we dive into the different types of experimental designs, let's get crystal clear on what experimental design actually is.

Imagine you're a detective trying to solve a mystery. You need clues, right? Well, in the world of research, experimental design is like the roadmap that helps you find those clues. It's like the game plan in sports or the blueprint when you're building a house. Just like you wouldn't start building without a good blueprint, researchers won't start their studies without a strong experimental design.

So, why do we need experimental design? Think about baking a cake. If you toss ingredients into a bowl without measuring, you'll end up with a mess instead of a tasty dessert.

Similarly, in research, if you don't have a solid plan, you might get confusing or incorrect results. A good experimental design helps you ask the right questions ( think critically ), decide what to measure ( come up with an idea ), and figure out how to measure it (test it). It also helps you consider things that might mess up your results, like outside influences you hadn't thought of.

For example, let's say you want to find out if listening to music helps people focus better. Your experimental design would help you decide things like: Who are you going to test? What kind of music will you use? How will you measure focus? And, importantly, how will you make sure that it's really the music affecting focus and not something else, like the time of day or whether someone had a good breakfast?

In short, experimental design is the master plan that guides researchers through the process of collecting data, so they can answer questions in the most reliable way possible. It's like the GPS for the journey of discovery!

History of Experimental Design

Around 350 BCE, people like Aristotle were trying to figure out how the world works, but they mostly just thought really hard about things. They didn't test their ideas much. So while they were super smart, their methods weren't always the best for finding out the truth.

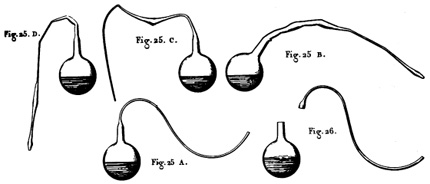

Fast forward to the Renaissance (14th to 17th centuries), a time of big changes and lots of curiosity. People like Galileo started to experiment by actually doing tests, like rolling balls down inclined planes to study motion. Galileo's work was cool because he combined thinking with doing. He'd have an idea, test it, look at the results, and then think some more. This approach was a lot more reliable than just sitting around and thinking.

Now, let's zoom ahead to the 18th and 19th centuries. This is when people like Francis Galton, an English polymath, started to get really systematic about experimentation. Galton was obsessed with measuring things. Seriously, he even tried to measure how good-looking people were ! His work helped create the foundations for a more organized approach to experiments.

Next stop: the early 20th century. Enter Ronald A. Fisher , a brilliant British statistician. Fisher was a game-changer. He came up with ideas that are like the bread and butter of modern experimental design.

Fisher invented the concept of the " control group "—that's a group of people or things that don't get the treatment you're testing, so you can compare them to those who do. He also stressed the importance of " randomization ," which means assigning people or things to different groups by chance, like drawing names out of a hat. This makes sure the experiment is fair and the results are trustworthy.

Around the same time, American psychologists like John B. Watson and B.F. Skinner were developing " behaviorism ." They focused on studying things that they could directly observe and measure, like actions and reactions.

Skinner even built boxes—called Skinner Boxes —to test how animals like pigeons and rats learn. Their work helped shape how psychologists design experiments today. Watson performed a very controversial experiment called The Little Albert experiment that helped describe behaviour through conditioning—in other words, how people learn to behave the way they do.

In the later part of the 20th century and into our time, computers have totally shaken things up. Researchers now use super powerful software to help design their experiments and crunch the numbers.

With computers, they can simulate complex experiments before they even start, which helps them predict what might happen. This is especially helpful in fields like medicine, where getting things right can be a matter of life and death.

Also, did you know that experimental designs aren't just for scientists in labs? They're used by people in all sorts of jobs, like marketing, education, and even video game design! Yes, someone probably ran an experiment to figure out what makes a game super fun to play.

So there you have it—a quick tour through the history of experimental design, from Aristotle's deep thoughts to Fisher's groundbreaking ideas, and all the way to today's computer-powered research. These designs are the recipes that help people from all walks of life find answers to their big questions.

Key Terms in Experimental Design

Before we dig into the different types of experimental designs, let's get comfy with some key terms. Understanding these terms will make it easier for us to explore the various types of experimental designs that researchers use to answer their big questions.

Independent Variable : This is what you change or control in your experiment to see what effect it has. Think of it as the "cause" in a cause-and-effect relationship. For example, if you're studying whether different types of music help people focus, the kind of music is the independent variable.

Dependent Variable : This is what you're measuring to see the effect of your independent variable. In our music and focus experiment, how well people focus is the dependent variable—it's what "depends" on the kind of music played.

Control Group : This is a group of people who don't get the special treatment or change you're testing. They help you see what happens when the independent variable is not applied. If you're testing whether a new medicine works, the control group would take a fake pill, called a placebo , instead of the real medicine.

Experimental Group : This is the group that gets the special treatment or change you're interested in. Going back to our medicine example, this group would get the actual medicine to see if it has any effect.

Randomization : This is like shaking things up in a fair way. You randomly put people into the control or experimental group so that each group is a good mix of different kinds of people. This helps make the results more reliable.

Sample : This is the group of people you're studying. They're a "sample" of a larger group that you're interested in. For instance, if you want to know how teenagers feel about a new video game, you might study a sample of 100 teenagers.

Bias : This is anything that might tilt your experiment one way or another without you realizing it. Like if you're testing a new kind of dog food and you only test it on poodles, that could create a bias because maybe poodles just really like that food and other breeds don't.

Data : This is the information you collect during the experiment. It's like the treasure you find on your journey of discovery!

Replication : This means doing the experiment more than once to make sure your findings hold up. It's like double-checking your answers on a test.

Hypothesis : This is your educated guess about what will happen in the experiment. It's like predicting the end of a movie based on the first half.

Steps of Experimental Design

Alright, let's say you're all fired up and ready to run your own experiment. Cool! But where do you start? Well, designing an experiment is a bit like planning a road trip. There are some key steps you've got to take to make sure you reach your destination. Let's break it down:

- Ask a Question : Before you hit the road, you've got to know where you're going. Same with experiments. You start with a question you want to answer, like "Does eating breakfast really make you do better in school?"

- Do Some Homework : Before you pack your bags, you look up the best places to visit, right? In science, this means reading up on what other people have already discovered about your topic.

- Form a Hypothesis : This is your educated guess about what you think will happen. It's like saying, "I bet this route will get us there faster."

- Plan the Details : Now you decide what kind of car you're driving (your experimental design), who's coming with you (your sample), and what snacks to bring (your variables).

- Randomization : Remember, this is like shuffling a deck of cards. You want to mix up who goes into your control and experimental groups to make sure it's a fair test.

- Run the Experiment : Finally, the rubber hits the road! You carry out your plan, making sure to collect your data carefully.

- Analyze the Data : Once the trip's over, you look at your photos and decide which ones are keepers. In science, this means looking at your data to see what it tells you.

- Draw Conclusions : Based on your data, did you find an answer to your question? This is like saying, "Yep, that route was faster," or "Nope, we hit a ton of traffic."

- Share Your Findings : After a great trip, you want to tell everyone about it, right? Scientists do the same by publishing their results so others can learn from them.

- Do It Again? : Sometimes one road trip just isn't enough. In the same way, scientists often repeat their experiments to make sure their findings are solid.

So there you have it! Those are the basic steps you need to follow when you're designing an experiment. Each step helps make sure that you're setting up a fair and reliable way to find answers to your big questions.

Let's get into examples of experimental designs.

1) True Experimental Design

In the world of experiments, the True Experimental Design is like the superstar quarterback everyone talks about. Born out of the early 20th-century work of statisticians like Ronald A. Fisher, this design is all about control, precision, and reliability.

Researchers carefully pick an independent variable to manipulate (remember, that's the thing they're changing on purpose) and measure the dependent variable (the effect they're studying). Then comes the magic trick—randomization. By randomly putting participants into either the control or experimental group, scientists make sure their experiment is as fair as possible.

No sneaky biases here!

True Experimental Design Pros

The pros of True Experimental Design are like the perks of a VIP ticket at a concert: you get the best and most trustworthy results. Because everything is controlled and randomized, you can feel pretty confident that the results aren't just a fluke.

True Experimental Design Cons

However, there's a catch. Sometimes, it's really tough to set up these experiments in a real-world situation. Imagine trying to control every single detail of your day, from the food you eat to the air you breathe. Not so easy, right?

True Experimental Design Uses

The fields that get the most out of True Experimental Designs are those that need super reliable results, like medical research.

When scientists were developing COVID-19 vaccines, they used this design to run clinical trials. They had control groups that received a placebo (a harmless substance with no effect) and experimental groups that got the actual vaccine. Then they measured how many people in each group got sick. By comparing the two, they could say, "Yep, this vaccine works!"

So next time you read about a groundbreaking discovery in medicine or technology, chances are a True Experimental Design was the VIP behind the scenes, making sure everything was on point. It's been the go-to for rigorous scientific inquiry for nearly a century, and it's not stepping off the stage anytime soon.

2) Quasi-Experimental Design

So, let's talk about the Quasi-Experimental Design. Think of this one as the cool cousin of True Experimental Design. It wants to be just like its famous relative, but it's a bit more laid-back and flexible. You'll find quasi-experimental designs when it's tricky to set up a full-blown True Experimental Design with all the bells and whistles.

Quasi-experiments still play with an independent variable, just like their stricter cousins. The big difference? They don't use randomization. It's like wanting to divide a bag of jelly beans equally between your friends, but you can't quite do it perfectly.

In real life, it's often not possible or ethical to randomly assign people to different groups, especially when dealing with sensitive topics like education or social issues. And that's where quasi-experiments come in.

Quasi-Experimental Design Pros

Even though they lack full randomization, quasi-experimental designs are like the Swiss Army knives of research: versatile and practical. They're especially popular in fields like education, sociology, and public policy.

For instance, when researchers wanted to figure out if the Head Start program , aimed at giving young kids a "head start" in school, was effective, they used a quasi-experimental design. They couldn't randomly assign kids to go or not go to preschool, but they could compare kids who did with kids who didn't.

Quasi-Experimental Design Cons

Of course, quasi-experiments come with their own bag of pros and cons. On the plus side, they're easier to set up and often cheaper than true experiments. But the flip side is that they're not as rock-solid in their conclusions. Because the groups aren't randomly assigned, there's always that little voice saying, "Hey, are we missing something here?"

Quasi-Experimental Design Uses

Quasi-Experimental Design gained traction in the mid-20th century. Researchers were grappling with real-world problems that didn't fit neatly into a laboratory setting. Plus, as society became more aware of ethical considerations, the need for flexible designs increased. So, the quasi-experimental approach was like a breath of fresh air for scientists wanting to study complex issues without a laundry list of restrictions.

In short, if True Experimental Design is the superstar quarterback, Quasi-Experimental Design is the versatile player who can adapt and still make significant contributions to the game.

3) Pre-Experimental Design

Now, let's talk about the Pre-Experimental Design. Imagine it as the beginner's skateboard you get before you try out for all the cool tricks. It has wheels, it rolls, but it's not built for the professional skatepark.

Similarly, pre-experimental designs give researchers a starting point. They let you dip your toes in the water of scientific research without diving in head-first.

So, what's the deal with pre-experimental designs?

Pre-Experimental Designs are the basic, no-frills versions of experiments. Researchers still mess around with an independent variable and measure a dependent variable, but they skip over the whole randomization thing and often don't even have a control group.

It's like baking a cake but forgetting the frosting and sprinkles; you'll get some results, but they might not be as complete or reliable as you'd like.

Pre-Experimental Design Pros

Why use such a simple setup? Because sometimes, you just need to get the ball rolling. Pre-experimental designs are great for quick-and-dirty research when you're short on time or resources. They give you a rough idea of what's happening, which you can use to plan more detailed studies later.

A good example of this is early studies on the effects of screen time on kids. Researchers couldn't control every aspect of a child's life, but they could easily ask parents to track how much time their kids spent in front of screens and then look for trends in behavior or school performance.

Pre-Experimental Design Cons

But here's the catch: pre-experimental designs are like that first draft of an essay. It helps you get your ideas down, but you wouldn't want to turn it in for a grade. Because these designs lack the rigorous structure of true or quasi-experimental setups, they can't give you rock-solid conclusions. They're more like clues or signposts pointing you in a certain direction.

Pre-Experimental Design Uses

This type of design became popular in the early stages of various scientific fields. Researchers used them to scratch the surface of a topic, generate some initial data, and then decide if it's worth exploring further. In other words, pre-experimental designs were the stepping stones that led to more complex, thorough investigations.

So, while Pre-Experimental Design may not be the star player on the team, it's like the practice squad that helps everyone get better. It's the starting point that can lead to bigger and better things.

4) Factorial Design

Now, buckle up, because we're moving into the world of Factorial Design, the multi-tasker of the experimental universe.

Imagine juggling not just one, but multiple balls in the air—that's what researchers do in a factorial design.

In Factorial Design, researchers are not satisfied with just studying one independent variable. Nope, they want to study two or more at the same time to see how they interact.

It's like cooking with several spices to see how they blend together to create unique flavors.

Factorial Design became the talk of the town with the rise of computers. Why? Because this design produces a lot of data, and computers are the number crunchers that help make sense of it all. So, thanks to our silicon friends, researchers can study complicated questions like, "How do diet AND exercise together affect weight loss?" instead of looking at just one of those factors.

Factorial Design Pros

This design's main selling point is its ability to explore interactions between variables. For instance, maybe a new study drug works really well for young people but not so great for older adults. A factorial design could reveal that age is a crucial factor, something you might miss if you only studied the drug's effectiveness in general. It's like being a detective who looks for clues not just in one room but throughout the entire house.

Factorial Design Cons

However, factorial designs have their own bag of challenges. First off, they can be pretty complicated to set up and run. Imagine coordinating a four-way intersection with lots of cars coming from all directions—you've got to make sure everything runs smoothly, or you'll end up with a traffic jam. Similarly, researchers need to carefully plan how they'll measure and analyze all the different variables.

Factorial Design Uses

Factorial designs are widely used in psychology to untangle the web of factors that influence human behavior. They're also popular in fields like marketing, where companies want to understand how different aspects like price, packaging, and advertising influence a product's success.

And speaking of success, the factorial design has been a hit since statisticians like Ronald A. Fisher (yep, him again!) expanded on it in the early-to-mid 20th century. It offered a more nuanced way of understanding the world, proving that sometimes, to get the full picture, you've got to juggle more than one ball at a time.

So, if True Experimental Design is the quarterback and Quasi-Experimental Design is the versatile player, Factorial Design is the strategist who sees the entire game board and makes moves accordingly.

5) Longitudinal Design

Alright, let's take a step into the world of Longitudinal Design. Picture it as the grand storyteller, the kind who doesn't just tell you about a single event but spins an epic tale that stretches over years or even decades. This design isn't about quick snapshots; it's about capturing the whole movie of someone's life or a long-running process.

You know how you might take a photo every year on your birthday to see how you've changed? Longitudinal Design is kind of like that, but for scientific research.

With Longitudinal Design, instead of measuring something just once, researchers come back again and again, sometimes over many years, to see how things are going. This helps them understand not just what's happening, but why it's happening and how it changes over time.

This design really started to shine in the latter half of the 20th century, when researchers began to realize that some questions can't be answered in a hurry. Think about studies that look at how kids grow up, or research on how a certain medicine affects you over a long period. These aren't things you can rush.

The famous Framingham Heart Study , started in 1948, is a prime example. It's been studying heart health in a small town in Massachusetts for decades, and the findings have shaped what we know about heart disease.

Longitudinal Design Pros

So, what's to love about Longitudinal Design? First off, it's the go-to for studying change over time, whether that's how people age or how a forest recovers from a fire.

Longitudinal Design Cons

But it's not all sunshine and rainbows. Longitudinal studies take a lot of patience and resources. Plus, keeping track of participants over many years can be like herding cats—difficult and full of surprises.

Longitudinal Design Uses

Despite these challenges, longitudinal studies have been key in fields like psychology, sociology, and medicine. They provide the kind of deep, long-term insights that other designs just can't match.

So, if the True Experimental Design is the superstar quarterback, and the Quasi-Experimental Design is the flexible athlete, then the Factorial Design is the strategist, and the Longitudinal Design is the wise elder who has seen it all and has stories to tell.

6) Cross-Sectional Design

Now, let's flip the script and talk about Cross-Sectional Design, the polar opposite of the Longitudinal Design. If Longitudinal is the grand storyteller, think of Cross-Sectional as the snapshot photographer. It captures a single moment in time, like a selfie that you take to remember a fun day. Researchers using this design collect all their data at one point, providing a kind of "snapshot" of whatever they're studying.

In a Cross-Sectional Design, researchers look at multiple groups all at the same time to see how they're different or similar.

This design rose to popularity in the mid-20th century, mainly because it's so quick and efficient. Imagine wanting to know how people of different ages feel about a new video game. Instead of waiting for years to see how opinions change, you could just ask people of all ages what they think right now. That's Cross-Sectional Design for you—fast and straightforward.

You'll find this type of research everywhere from marketing studies to healthcare. For instance, you might have heard about surveys asking people what they think about a new product or political issue. Those are usually cross-sectional studies, aimed at getting a quick read on public opinion.

Cross-Sectional Design Pros

So, what's the big deal with Cross-Sectional Design? Well, it's the go-to when you need answers fast and don't have the time or resources for a more complicated setup.

Cross-Sectional Design Cons

Remember, speed comes with trade-offs. While you get your results quickly, those results are stuck in time. They can't tell you how things change or why they're changing, just what's happening right now.

Cross-Sectional Design Uses

Also, because they're so quick and simple, cross-sectional studies often serve as the first step in research. They give scientists an idea of what's going on so they can decide if it's worth digging deeper. In that way, they're a bit like a movie trailer, giving you a taste of the action to see if you're interested in seeing the whole film.

So, in our lineup of experimental designs, if True Experimental Design is the superstar quarterback and Longitudinal Design is the wise elder, then Cross-Sectional Design is like the speedy running back—fast, agile, but not designed for long, drawn-out plays.

7) Correlational Design

Next on our roster is the Correlational Design, the keen observer of the experimental world. Imagine this design as the person at a party who loves people-watching. They don't interfere or get involved; they just observe and take mental notes about what's going on.

In a correlational study, researchers don't change or control anything; they simply observe and measure how two variables relate to each other.

The correlational design has roots in the early days of psychology and sociology. Pioneers like Sir Francis Galton used it to study how qualities like intelligence or height could be related within families.

This design is all about asking, "Hey, when this thing happens, does that other thing usually happen too?" For example, researchers might study whether students who have more study time get better grades or whether people who exercise more have lower stress levels.

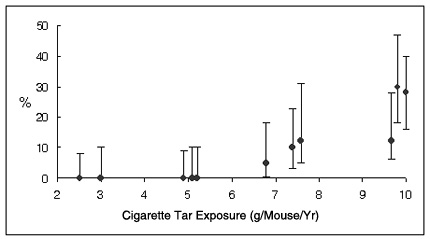

One of the most famous correlational studies you might have heard of is the link between smoking and lung cancer. Back in the mid-20th century, researchers started noticing that people who smoked a lot also seemed to get lung cancer more often. They couldn't say smoking caused cancer—that would require a true experiment—but the strong correlation was a red flag that led to more research and eventually, health warnings.

Correlational Design Pros

This design is great at proving that two (or more) things can be related. Correlational designs can help prove that more detailed research is needed on a topic. They can help us see patterns or possible causes for things that we otherwise might not have realized.

Correlational Design Cons

But here's where you need to be careful: correlational designs can be tricky. Just because two things are related doesn't mean one causes the other. That's like saying, "Every time I wear my lucky socks, my team wins." Well, it's a fun thought, but those socks aren't really controlling the game.

Correlational Design Uses

Despite this limitation, correlational designs are popular in psychology, economics, and epidemiology, to name a few fields. They're often the first step in exploring a possible relationship between variables. Once a strong correlation is found, researchers may decide to conduct more rigorous experimental studies to examine cause and effect.

So, if the True Experimental Design is the superstar quarterback and the Longitudinal Design is the wise elder, the Factorial Design is the strategist, and the Cross-Sectional Design is the speedster, then the Correlational Design is the clever scout, identifying interesting patterns but leaving the heavy lifting of proving cause and effect to the other types of designs.

8) Meta-Analysis

Last but not least, let's talk about Meta-Analysis, the librarian of experimental designs.

If other designs are all about creating new research, Meta-Analysis is about gathering up everyone else's research, sorting it, and figuring out what it all means when you put it together.

Imagine a jigsaw puzzle where each piece is a different study. Meta-Analysis is the process of fitting all those pieces together to see the big picture.

The concept of Meta-Analysis started to take shape in the late 20th century, when computers became powerful enough to handle massive amounts of data. It was like someone handed researchers a super-powered magnifying glass, letting them examine multiple studies at the same time to find common trends or results.

You might have heard of the Cochrane Reviews in healthcare . These are big collections of meta-analyses that help doctors and policymakers figure out what treatments work best based on all the research that's been done.

For example, if ten different studies show that a certain medicine helps lower blood pressure, a meta-analysis would pull all that information together to give a more accurate answer.

Meta-Analysis Pros

The beauty of Meta-Analysis is that it can provide really strong evidence. Instead of relying on one study, you're looking at the whole landscape of research on a topic.

Meta-Analysis Cons

However, it does have some downsides. For one, Meta-Analysis is only as good as the studies it includes. If those studies are flawed, the meta-analysis will be too. It's like baking a cake: if you use bad ingredients, it doesn't matter how good your recipe is—the cake won't turn out well.

Meta-Analysis Uses

Despite these challenges, meta-analyses are highly respected and widely used in many fields like medicine, psychology, and education. They help us make sense of a world that's bursting with information by showing us the big picture drawn from many smaller snapshots.

So, in our all-star lineup, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, the Factorial Design is the strategist, the Cross-Sectional Design is the speedster, and the Correlational Design is the scout, then the Meta-Analysis is like the coach, using insights from everyone else's plays to come up with the best game plan.

9) Non-Experimental Design

Now, let's talk about a player who's a bit of an outsider on this team of experimental designs—the Non-Experimental Design. Think of this design as the commentator or the journalist who covers the game but doesn't actually play.

In a Non-Experimental Design, researchers are like reporters gathering facts, but they don't interfere or change anything. They're simply there to describe and analyze.

Non-Experimental Design Pros

So, what's the deal with Non-Experimental Design? Its strength is in description and exploration. It's really good for studying things as they are in the real world, without changing any conditions.

Non-Experimental Design Cons

Because a non-experimental design doesn't manipulate variables, it can't prove cause and effect. It's like a weather reporter: they can tell you it's raining, but they can't tell you why it's raining.

The downside? Since researchers aren't controlling variables, it's hard to rule out other explanations for what they observe. It's like hearing one side of a story—you get an idea of what happened, but it might not be the complete picture.

Non-Experimental Design Uses

Non-Experimental Design has always been a part of research, especially in fields like anthropology, sociology, and some areas of psychology.

For instance, if you've ever heard of studies that describe how people behave in different cultures or what teens like to do in their free time, that's often Non-Experimental Design at work. These studies aim to capture the essence of a situation, like painting a portrait instead of taking a snapshot.

One well-known example you might have heard about is the Kinsey Reports from the 1940s and 1950s, which described sexual behavior in men and women. Researchers interviewed thousands of people but didn't manipulate any variables like you would in a true experiment. They simply collected data to create a comprehensive picture of the subject matter.

So, in our metaphorical team of research designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, and Meta-Analysis is the coach, then Non-Experimental Design is the sports journalist—always present, capturing the game, but not part of the action itself.

10) Repeated Measures Design

Time to meet the Repeated Measures Design, the time traveler of our research team. If this design were a player in a sports game, it would be the one who keeps revisiting past plays to figure out how to improve the next one.

Repeated Measures Design is all about studying the same people or subjects multiple times to see how they change or react under different conditions.

The idea behind Repeated Measures Design isn't new; it's been around since the early days of psychology and medicine. You could say it's a cousin to the Longitudinal Design, but instead of looking at how things naturally change over time, it focuses on how the same group reacts to different things.

Imagine a study looking at how a new energy drink affects people's running speed. Instead of comparing one group that drank the energy drink to another group that didn't, a Repeated Measures Design would have the same group of people run multiple times—once with the energy drink, and once without. This way, you're really zeroing in on the effect of that energy drink, making the results more reliable.

Repeated Measures Design Pros

The strong point of Repeated Measures Design is that it's super focused. Because it uses the same subjects, you don't have to worry about differences between groups messing up your results.

Repeated Measures Design Cons

But the downside? Well, people can get tired or bored if they're tested too many times, which might affect how they respond.

Repeated Measures Design Uses

A famous example of this design is the "Little Albert" experiment, conducted by John B. Watson and Rosalie Rayner in 1920. In this study, a young boy was exposed to a white rat and other stimuli several times to see how his emotional responses changed. Though the ethical standards of this experiment are often criticized today, it was groundbreaking in understanding conditioned emotional responses.

In our metaphorical lineup of research designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, and Non-Experimental Design is the journalist, then Repeated Measures Design is the time traveler—always looping back to fine-tune the game plan.

11) Crossover Design

Next up is Crossover Design, the switch-hitter of the research world. If you're familiar with baseball, you'll know a switch-hitter is someone who can bat both right-handed and left-handed.

In a similar way, Crossover Design allows subjects to experience multiple conditions, flipping them around so that everyone gets a turn in each role.

This design is like the utility player on our team—versatile, flexible, and really good at adapting.

The Crossover Design has its roots in medical research and has been popular since the mid-20th century. It's often used in clinical trials to test the effectiveness of different treatments.

Crossover Design Pros

The neat thing about this design is that it allows each participant to serve as their own control group. Imagine you're testing two new kinds of headache medicine. Instead of giving one type to one group and another type to a different group, you'd give both kinds to the same people but at different times.

Crossover Design Cons

What's the big deal with Crossover Design? Its major strength is in reducing the "noise" that comes from individual differences. Since each person experiences all conditions, it's easier to see real effects. However, there's a catch. This design assumes that there's no lasting effect from the first condition when you switch to the second one. That might not always be true. If the first treatment has a long-lasting effect, it could mess up the results when you switch to the second treatment.

Crossover Design Uses

A well-known example of Crossover Design is in studies that look at the effects of different types of diets—like low-carb vs. low-fat diets. Researchers might have participants follow a low-carb diet for a few weeks, then switch them to a low-fat diet. By doing this, they can more accurately measure how each diet affects the same group of people.

In our team of experimental designs, if True Experimental Design is the quarterback and Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, and Repeated Measures Design is the time traveler, then Crossover Design is the versatile utility player—always ready to adapt and play multiple roles to get the most accurate results.

12) Cluster Randomized Design

Meet the Cluster Randomized Design, the team captain of group-focused research. In our imaginary lineup of experimental designs, if other designs focus on individual players, then Cluster Randomized Design is looking at how the entire team functions.

This approach is especially common in educational and community-based research, and it's been gaining traction since the late 20th century.

Here's how Cluster Randomized Design works: Instead of assigning individual people to different conditions, researchers assign entire groups, or "clusters." These could be schools, neighborhoods, or even entire towns. This helps you see how the new method works in a real-world setting.

Imagine you want to see if a new anti-bullying program really works. Instead of selecting individual students, you'd introduce the program to a whole school or maybe even several schools, and then compare the results to schools without the program.

Cluster Randomized Design Pros

Why use Cluster Randomized Design? Well, sometimes it's just not practical to assign conditions at the individual level. For example, you can't really have half a school following a new reading program while the other half sticks with the old one; that would be way too confusing! Cluster Randomization helps get around this problem by treating each "cluster" as its own mini-experiment.

Cluster Randomized Design Cons

There's a downside, too. Because entire groups are assigned to each condition, there's a risk that the groups might be different in some important way that the researchers didn't account for. That's like having one sports team that's full of veterans playing against a team of rookies; the match wouldn't be fair.

Cluster Randomized Design Uses

A famous example is the research conducted to test the effectiveness of different public health interventions, like vaccination programs. Researchers might roll out a vaccination program in one community but not in another, then compare the rates of disease in both.

In our metaphorical research team, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, and Crossover Design is the utility player, then Cluster Randomized Design is the team captain—always looking out for the group as a whole.

13) Mixed-Methods Design

Say hello to Mixed-Methods Design, the all-rounder or the "Renaissance player" of our research team.

Mixed-Methods Design uses a blend of both qualitative and quantitative methods to get a more complete picture, just like a Renaissance person who's good at lots of different things. It's like being good at both offense and defense in a sport; you've got all your bases covered!

Mixed-Methods Design is a fairly new kid on the block, becoming more popular in the late 20th and early 21st centuries as researchers began to see the value in using multiple approaches to tackle complex questions. It's the Swiss Army knife in our research toolkit, combining the best parts of other designs to be more versatile.

Here's how it could work: Imagine you're studying the effects of a new educational app on students' math skills. You might use quantitative methods like tests and grades to measure how much the students improve—that's the 'numbers part.'

But you also want to know how the students feel about math now, or why they think they got better or worse. For that, you could conduct interviews or have students fill out journals—that's the 'story part.'

Mixed-Methods Design Pros

So, what's the scoop on Mixed-Methods Design? The strength is its versatility and depth; you're not just getting numbers or stories, you're getting both, which gives a fuller picture.

Mixed-Methods Design Cons

But, it's also more challenging. Imagine trying to play two sports at the same time! You have to be skilled in different research methods and know how to combine them effectively.

Mixed-Methods Design Uses

A high-profile example of Mixed-Methods Design is research on climate change. Scientists use numbers and data to show temperature changes (quantitative), but they also interview people to understand how these changes are affecting communities (qualitative).

In our team of experimental designs, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, Crossover Design is the utility player, and Cluster Randomized Design is the team captain, then Mixed-Methods Design is the Renaissance player—skilled in multiple areas and able to bring them all together for a winning strategy.

14) Multivariate Design

Now, let's turn our attention to Multivariate Design, the multitasker of the research world.

If our lineup of research designs were like players on a basketball court, Multivariate Design would be the player dribbling, passing, and shooting all at once. This design doesn't just look at one or two things; it looks at several variables simultaneously to see how they interact and affect each other.

Multivariate Design is like baking a cake with many ingredients. Instead of just looking at how flour affects the cake, you also consider sugar, eggs, and milk all at once. This way, you understand how everything works together to make the cake taste good or bad.

Multivariate Design has been a go-to method in psychology, economics, and social sciences since the latter half of the 20th century. With the advent of computers and advanced statistical software, analyzing multiple variables at once became a lot easier, and Multivariate Design soared in popularity.

Multivariate Design Pros

So, what's the benefit of using Multivariate Design? Its power lies in its complexity. By studying multiple variables at the same time, you can get a really rich, detailed understanding of what's going on.

Multivariate Design Cons

But that complexity can also be a drawback. With so many variables, it can be tough to tell which ones are really making a difference and which ones are just along for the ride.

Multivariate Design Uses

Imagine you're a coach trying to figure out the best strategy to win games. You wouldn't just look at how many points your star player scores; you'd also consider assists, rebounds, turnovers, and maybe even how loud the crowd is. A Multivariate Design would help you understand how all these factors work together to determine whether you win or lose.

A well-known example of Multivariate Design is in market research. Companies often use this approach to figure out how different factors—like price, packaging, and advertising—affect sales. By studying multiple variables at once, they can find the best combination to boost profits.

In our metaphorical research team, if True Experimental Design is the quarterback, Longitudinal Design is the wise elder, Factorial Design is the strategist, Cross-Sectional Design is the speedster, Correlational Design is the scout, Meta-Analysis is the coach, Non-Experimental Design is the journalist, Repeated Measures Design is the time traveler, Crossover Design is the utility player, Cluster Randomized Design is the team captain, and Mixed-Methods Design is the Renaissance player, then Multivariate Design is the multitasker—juggling many variables at once to get a fuller picture of what's happening.

15) Pretest-Posttest Design

Let's introduce Pretest-Posttest Design, the "Before and After" superstar of our research team. You've probably seen those before-and-after pictures in ads for weight loss programs or home renovations, right?

Well, this design is like that, but for science! Pretest-Posttest Design checks out what things are like before the experiment starts and then compares that to what things are like after the experiment ends.

This design is one of the classics, a staple in research for decades across various fields like psychology, education, and healthcare. It's so simple and straightforward that it has stayed popular for a long time.

In Pretest-Posttest Design, you measure your subject's behavior or condition before you introduce any changes—that's your "before" or "pretest." Then you do your experiment, and after it's done, you measure the same thing again—that's your "after" or "posttest."

Pretest-Posttest Design Pros

What makes Pretest-Posttest Design special? It's pretty easy to understand and doesn't require fancy statistics.

Pretest-Posttest Design Cons

But there are some pitfalls. For example, what if the kids in our math example get better at multiplication just because they're older or because they've taken the test before? That would make it hard to tell if the program is really effective or not.

Pretest-Posttest Design Uses

Let's say you're a teacher and you want to know if a new math program helps kids get better at multiplication. First, you'd give all the kids a multiplication test—that's your pretest. Then you'd teach them using the new math program. At the end, you'd give them the same test again—that's your posttest. If the kids do better on the second test, you might conclude that the program works.

One famous use of Pretest-Posttest Design is in evaluating the effectiveness of driver's education courses. Researchers will measure people's driving skills before and after the course to see if they've improved.

16) Solomon Four-Group Design

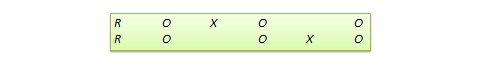

Next up is the Solomon Four-Group Design, the "chess master" of our research team. This design is all about strategy and careful planning. Named after Richard L. Solomon who introduced it in the 1940s, this method tries to correct some of the weaknesses in simpler designs, like the Pretest-Posttest Design.

Here's how it rolls: The Solomon Four-Group Design uses four different groups to test a hypothesis. Two groups get a pretest, then one of them receives the treatment or intervention, and both get a posttest. The other two groups skip the pretest, and only one of them receives the treatment before they both get a posttest.

Sound complicated? It's like playing 4D chess; you're thinking several moves ahead!

Solomon Four-Group Design Pros

What's the pro and con of the Solomon Four-Group Design? On the plus side, it provides really robust results because it accounts for so many variables.

Solomon Four-Group Design Cons

The downside? It's a lot of work and requires a lot of participants, making it more time-consuming and costly.

Solomon Four-Group Design Uses

Let's say you want to figure out if a new way of teaching history helps students remember facts better. Two classes take a history quiz (pretest), then one class uses the new teaching method while the other sticks with the old way. Both classes take another quiz afterward (posttest).

Meanwhile, two more classes skip the initial quiz, and then one uses the new method before both take the final quiz. Comparing all four groups will give you a much clearer picture of whether the new teaching method works and whether the pretest itself affects the outcome.

The Solomon Four-Group Design is less commonly used than simpler designs but is highly respected for its ability to control for more variables. It's a favorite in educational and psychological research where you really want to dig deep and figure out what's actually causing changes.

17) Adaptive Designs

Now, let's talk about Adaptive Designs, the chameleons of the experimental world.