Scientific Method

The Scientific Method was first used during the Scientific Revolution (1500-1700). The method combined theoretical knowledge such as mathematics with practical experimentation using scientific instruments, results analysis and comparisons, and finally peer reviews, all to better determine how the world around us works. In this way, hypotheses were rigorously tested, and laws could be formulated which explained observable phenomena. The goal of this scientific method was to not only increase human knowledge but to do so in a way that practically benefitted everyone and improved the human condition.

A New Approach: Bacon 's Vision

Francis Bacon (1561-1626) was an English philosopher, statesman, and author. He is considered one of the founders of modern scientific research and scientific method, even as "the father of modern science " because he proposed a new combined method of empirical (observable) experimentation and shared data collection so that humanity might finally discover all of nature's secrets and improve itself. Bacon championed the need for systematic and detailed empirical study, as this was the only way to increase humanity's understanding and, for him, more importantly, gain control of nature. This approach sounds quite obvious today, but at the time, the highly theoretical approach of the Greek philosopher Aristotle (l. 384-322 BCE) still dominated thought. Verbal arguments had become more important than what could actually be seen in the world. Further, natural philosophers had become preoccupied with why things happen instead of first ascertaining what was happening in nature.

Bacon rejected the current backward-looking approach to knowledge, that is, the seemingly never-ending attempt to prove the ancients right. Instead, new thinkers and experimenters, said Bacon, should act like the new navigators who had pushed beyond the limits of the known world. Christopher Columbus (1451-1506) had shown there was land across the Atlantic Ocean. Vasco da Gama (c. 1469-1524) had explored the globe in the other direction. Scientists, as we would call them today, had to be similarly bold. Old knowledge had to be rigorously tested to see that it was worth keeping. New knowledge had to be acquired by thoroughly testing nature without preconceived ideas. Reason had to be applied to data collected from experiments, and the same data had to be openly shared with other thinkers so that it could be tested again, comparing it to what others had discovered. Finally, this knowledge must then be used to improve the human condition; otherwise, it was no use pursuing it in the first place. This was Bacon's vision. What he proposed did indeed come about but with three notable factors added to the scientific method. These were mathematics, hypotheses, and technology.

The Importance of Experiments & Instruments

Experiments had always been carried out by thinkers, from ancient figures like Archimedes (l. 287-212 BCE) to the alchemists of the Middle Ages, but their experiments were usually haphazard, and very often thinkers were trying to prove a preconceived idea. In the Scientific Revolution, experimentation became a more systematic and multi-layered activity involving many different people. This more rigorous approach to gathering observable data was also a reaction against traditional activities and methods such as magic, astrology, and alchemy , all ancient and secret worlds of knowledge-gathering that now came under attack.

At the outset of the Scientific Revolution, experiments were any sort of activity carried out to see what would happen, a sort of anything-goes approach to satisfying scientific curiosity. It is important to note, though, that the modern meaning of scientific experiment is rather different, summarised here by W. E. Burns: "the creation of an artificial situation designed to study scientific principles held to apply in all situations" (95). It is fair to say, though, that the modern approach to experimentation, with its highly specialised focus where only one specific hypothesis is being tested, would not have become possible without the pioneering experimenters of the Scientific Revolution.

The first well-documented practical experiment of our period was made by William Gilbert using magnets; he published his findings in 1600 in On the Magnet . The work was pioneering because "Central to Gilbert's enterprise was the claim that you could reproduce his experiments and confirm his results: his book was, in effect, a collection of experimental recipes" (Wootton, 331).

There remained sceptics of experimentation, those who stressed that the senses could be misled when the reason of the mind could not be. One such doubter was René Descartes (1596-1650), but if anything, he and other natural philosophers who questioned the value of the work of the practical experimenters were responsible for creating a lasting new division between philosophy and what we would today call science. The term "science" was still not widely used in the 17th century, instead, many experimenters referred to themselves as practitioners of "experimental philosophy". The first use in English of the term "experimental method" was in 1675.

The first truly international effort in coordinated experiments involved the development of the barometer. This process began with the efforts of the Italian Evangelista Torricelli (1608-1647) in 1643. Torricelli discovered that mercury could be raised within a glass tube when one end of that tube was placed in a container of mercury. The air pressure on the mercury in the container pushed the mercury in the tube up around 30 inches (76 cm) higher than the level in the container. In 1648, Blaise Pascal (1623-1662) and his brother-in- law Florin Périer conducted experiments using similar apparatus, but this time tested under different atmospheric pressures by setting up the devices at a variety of altitudes on the side of a mountain. The scientists noted that the level of the mercury in the glass tube fell the higher up the mountain readings were taken.

The Anglo-Irish chemist Robert Boyle (1627-1691) named the new instrument a barometer and conclusively demonstrated the effect of air pressure by using a barometer inside an air pump where a vacuum was established. Boyle formulated a principle which became known as 'Boyle's Law'. This law states that the pressure exerted by a certain quantity of air varies inversely in proportion to its volume (provided temperatures are constant). The story of the development of the barometer became typical throughout the Scientific Revolution: natural phenomena were observed, instruments were invented to measure and understand these observable facts, scientists collaborated (sometimes even competed), and so they extended the work of each other until, finally, a universal law could be devised which explained what was being seen. This law could then be used as a predictive device in future experiments.

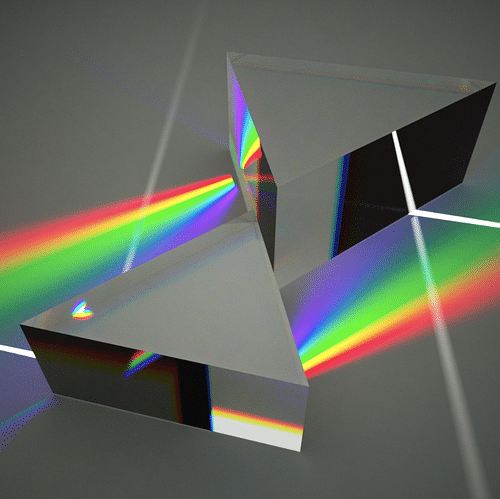

Experiments like Robert Boyle's air pump demonstrations and Isaac Newton 's use of a prism to demonstrate white light is made up of different coloured light continued the trend of experimentation to prove, test, and adjust theories. Further, these endeavours highlight the importance of scientific instruments in the new method of inquiry. The scientific method was employed to invent useful and accurate instruments, which were, in turn, used in further experiments. The invention of the telescope (c. 1608), microscope (c. 1610), barometer (1643), thermometer (c. 1650), pendulum clock (1657), air pump (1659), and balance spring watch (1675) all allowed fine measurements to be made which previously had been impossible. New instruments meant that a whole new range of experiments could be carried out. Whole new specialisations of study became possible, such as meteorology, microscopic anatomy, embryology, and optics.

The scientific method came to involve the following key components:

- conducting practical experiments

- conducting experiments without prejudice of what they should prove

- using deductive reasoning (creating a generalisation from specific examples) to form a hypothesis (untested theory), which is then tested by an experiment, after which the hypothesis might be accepted, altered, or rejected based on empirical (observable) evidence

- conducting multiple experiments and doing so in different places and by different people to confirm the reliability of the results

- an open and critical review of the results of an experiment by peers

- the formulation of universal laws (inductive reasoning or logic) using, for example, mathematics

- a desire to gain practical benefits from scientific experiments and a belief in the idea of scientific progress

(Note: the above criteria are expressed in modern linguistic terms, not necessarily those terms 17th-century scientists would have used since the revolution in science also caused a revolution in the language to describe it).

Scientific Institutions

The scientific method really took hold when it became institutionalised, that is, when it was endorsed and employed by official institutions like the learned societies where thinkers tested their theories in the real world and worked collaboratively. The first such society was the Academia del Cimento in Florence, founded in 1657. Others soon followed, notably the Royal Academy of Sciences in Paris in 1667. Four years earlier, London had gained its own academy with the foundation of the Royal Society . The founding fellows of this society credited Bacon with the idea, and they were keen to follow his principles of scientific method and his emphasis on sharing and communicating scientific data and results. The Berlin Academy was founded in 1700 and the St. Petersburg Academy in 1724. These academies and societies became the focal points of an international network of scientists who corresponded, read each other's works, and even visited each other as the new scientific method took hold.

Official bodies were able to fund expensive experiments and assemble or commission new equipment. They showed these experiments to the public, a practice that illustrates that what was new here was not the act of discovery but the creation of a culture of discovery. Scientists went much further than a real-time audience and ensured their results were printed for a far wider (and more critical) readership in journals and books. Here, in print, the experiments were described in great detail, and the results were presented for all to see. In this way, scientists were able to create "virtual witnesses" to their experiments. Now, anyone who cared to be could become a participant in the development of knowledge acquired through science.

Subscribe to topic Related Content Books Cite This Work License

Bibliography

- Burns, William E. The Scientific Revolution in Global Perspective. Oxford University Press, 2015.

- Burns, William E. The Scientific Revolution. ABC-CLIO, 2001.

- Bynum, William F. & Browne, Janet & Porter, Roy. Dictionary of the History of Science . Princeton University Press, 1982.

- Henry, John. The Scientific Revolution and the Origins of Modern Science . Red Globe Press, 2008.

- Jardine, Lisa. Ingenious Pursuits. Nan A. Talese, 1999.

- Moran, Bruce T. Distilling Knowledge. Harvard University Press, 2006.

- Wootton, David. The Invention of Science. Harper, 2015.

About the Author

Translations

We want people all over the world to learn about history. Help us and translate this definition into another language!

Questions & Answers

What are the different steps of the scientific method, what was the scientific method in the scientific revolution, related content.

Scientific Revolution

Ancient Greek Science

The Scientific Revolution

Roman Science

Women Scientists in the Scientific Revolution

Free for the world, supported by you.

World History Encyclopedia is a non-profit organization. For only $5 per month you can become a member and support our mission to engage people with cultural heritage and to improve history education worldwide.

Recommended Books

Cite this work.

Cartwright, M. (2023, November 07). Scientific Method . World History Encyclopedia . Retrieved from https://www.worldhistory.org/Scientific_Method/

Chicago Style

Cartwright, Mark. " Scientific Method ." World History Encyclopedia . Last modified November 07, 2023. https://www.worldhistory.org/Scientific_Method/.

Cartwright, Mark. " Scientific Method ." World History Encyclopedia . World History Encyclopedia, 07 Nov 2023. Web. 27 Sep 2024.

License & Copyright

Submitted by Mark Cartwright , published on 07 November 2023. The copyright holder has published this content under the following license: Creative Commons Attribution-NonCommercial-ShareAlike . This license lets others remix, tweak, and build upon this content non-commercially, as long as they credit the author and license their new creations under the identical terms. When republishing on the web a hyperlink back to the original content source URL must be included. Please note that content linked from this page may have different licensing terms.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- News & Views

- Published: 26 October 2016

In retrospect

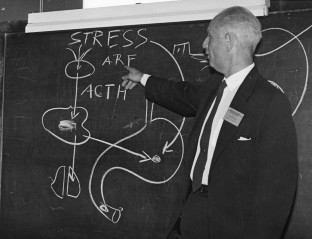

Eighty years of stress

- George Fink 1

Nature volume 539 , pages 175–176 ( 2016 ) Cite this article

7951 Accesses

13 Citations

19 Altmetric

Metrics details

- Neuroscience

- Post-traumatic stress disorder

The discovery in 1936 that rats respond to various damaging stimuli with a general response that involves alarm, resistance and exhaustion launched the discipline of stress research.

This is a preview of subscription content, access via your institution

Relevant articles

Open Access articles citing this article.

C. elegans electrotaxis behavior is modulated by heat shock response and unfolded protein response signaling pathways

- Shane K. B. Taylor

- , Muhammad H. Minhas

- … Bhagwati P. Gupta

Scientific Reports Open Access 04 February 2021

Access options

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Don Graham/Division de la Gestion de Documents et des Archives, Univ. Montréal

See all news & views

Selye, H. Nature 138 , 32 (1936).

Article ADS Google Scholar

Selye, H. Br. Med. J. 1 , 1383–1392 (1950).

Article CAS Google Scholar

Bernard, C. Leçons sur les propriétés physiologiques et les altérations pathologiques des liquides de l'organisme (Baillière, 1859).

Google Scholar

Cannon, W. B. The Wisdom of the Body (Norton, 1932).

Book Google Scholar

Sherwin, R. R. & Sacca, L. Am. J. Physiol. Endocrinol. Metab. 245 , E157–E165 (1984).

Article Google Scholar

Selye, H. J. Hum. Stress 1 , 37–44 (1975).

Selye, H. Stress in Health and Disease (Butterworth, 1976).

Haslbeck, M. & Vierling, E. J. Mol. Biol. 427 , 1537–1548 (2015).

Park, C.-J. & Seo, Y.-S. Plant Pathol. J. 31 , 323–333 (2015).

Pacak, K. et al. Am. J. Physiol. 275 , R1247–R1255 (1998).

Sterling, P. & Eyer, J. in Handbook of Life Stress, Cognition and Health (eds Fisher, S. & Reason, J.) 629–649 (Wiley, 1988).

Schulkin, J. (ed.) Allostasis, Homeostasis, and the Costs of Physiological Adaptation (Cambridge Univ. Press, 2004).

McEwen, B. S. Physiol. Rev. 87 , 873–904 (2007).

Vale, W., Spiess, J., Rivier, C. & Rivier, J. Science 213 , 1394–1397 (1981).

Article ADS CAS Google Scholar

Fink, G. J. Neuroendocrinol. 23 , 107–117 (2011).

Download references

Author information

Authors and affiliations.

George Fink is at the Florey Institute of Neuroscience and Mental Health, University of Melbourne, Parkville, Victoria 3010, Australia.,

George Fink

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to George Fink .

Related links

Related links in nature research.

Depression: The best way forward

Psychiatry: A molecular shield from trauma

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Fink, G. Eighty years of stress. Nature 539 , 175–176 (2016). https://doi.org/10.1038/nature20473

Download citation

Published : 26 October 2016

Issue Date : 10 November 2016

DOI : https://doi.org/10.1038/nature20473

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

- Muhammad H. Minhas

- Bhagwati P. Gupta

Scientific Reports (2021)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Table of Contents

- New in this Archive

- Chronological

- Editorial Information

- About the SEP

- Editorial Board

- How to Cite the SEP

- Special Characters

- Support the SEP

- PDFs for SEP Friends

- Make a Donation

- SEPIA for Libraries

- Entry Contents

Bibliography

Academic tools.

- Friends PDF Preview

- Author and Citation Info

- Back to Top

Theory and Observation in Science

Scientists obtain a great deal of the evidence they use by observing natural and experimentally generated objects and effects. Much of the standard philosophical literature on this subject comes from 20 th century logical empiricists, their followers, and critics who embraced their issues and accepted some of their assumptions even as they objected to specific views. Their discussions of observational evidence tend to focus on epistemological questions about its role in theory testing. This entry follows their lead even though observational evidence also plays important and philosophically interesting roles in other areas including scientific discovery, the development of experimental tools and techniques, and the application of scientific theories to practical problems.

The issues that get the most attention in the standard philosophical literature on observation and theory have to do with the distinction between observables and unobservables, the form and content of observation reports, and the epistemic bearing of observational evidence on theories it is used to evaluate. This entry discusses these topics under the following headings:

1. Introduction

2. what do observation reports describe, 3. is observation an exclusively perceptual process, 4. how observational evidence might be theory laden, 5. salience and theoretical stance, 6. semantic theory loading, 7. operationalization and observation reports, 8. is perception theory laden, 9. how do observational data bear on the acceptability of theoretical claims, 10. data and phenomena, 11. conclusion, other internet resources, related entries.

Reasoning from observations has been important to scientific practice at least since the time of Aristotle who mentions a number of sources of observational evidence including animal dissection (Aristotle(a) 763a/30–b/15, Aristotle(b) 511b/20–25). But philosophers didn’t talk about observation as extensively, in as much detail, or in the way we have become accustomed to, until the 20 th century when logical empiricists transformed philosophical thinking about it.

The first transformation was accomplished by ignoring the implications of a long standing distinction between observing and experimenting. To experiment is to isolate, prepare, and manipulate things in hopes of producing epistemically useful evidence. It had been customary to think of observing as noticing and attending to interesting details of things perceived under more or less natural conditions, or by extension, things perceived during the course of an experiment. To look at a berry on a vine and attend to its color and shape would be to observe it. To extract its juice and apply reagents to test for the presence of copper compounds would be to perform an experiment. Contrivance and manipulation influence epistemically significant features of observable experimental results to such an extent that epistemologists ignore them at their peril. Robert Boyle (1661), John Herschell (1830), Bruno Latour and Steve Woolgar (1979), Ian Hacking (1983), Harry Collins (1985) Allan Franklin (1986), Peter Galison (1987), Jim Bogen and Jim Woodward (1988), and Hans-Jörg Rheinberger(1997), are some of the philosophers and philosophically minded scientists, historians, and sociologists of science who gave serious consideration to the distinction between observing and experimenting. The logical empiricists tended to ignore it.

A second transformation, characteristic of the linguistic turn in philosophy, was to shift attention away from things observed in natural or experimental settings and concentrate instead on the logic of observation reports. The shift developed from the assumption that a scientific theory is a system of sentences or sentence like structures (propositions, statements, claims, and so on) to be tested by comparison to observational evidence. Secondly it was assumed that the comparisons must be understood in terms of inferential relations. If inferential relations hold only between sentence like structures, it follows that theories must be tested, not against observations or things observed, but against sentences, propositions, etc. used to report observations. (Hempel 1935, 50–51. Schlick 1935)

Friends of this line of thought theorized about the syntax, semantics, and pragmatics of observation sentences, and inferential connections between observation and theoretical sentences. In doing so they hoped to articulate and explain the authoritativeness widely conceded to the best natural, social and behavioral scientific theories. Some pronouncements from astrologers, medical quacks, and other pseudo scientists gain wide acceptance, as do those of religious leaders who rest their cases on faith or personal revelation, and rulers and governmental officials who use their political power to secure assent. But such claims do not enjoy the kind of credibility that scientific theories can attain. The logical empiricists tried to account for this by appeal to the objectivity and accessibility of observation reports, and the logic of theory testing.

Part of what they meant by calling observational evidence objective was that cultural and ethnic factors have no bearing on what can validly be inferred about the merits of a theory from observation reports. So conceived, objectivity was important to the logical empiricists’ criticism of the Nazi idea that Jews and Aryans have fundamentally different thought processes such that physical theories suitable for Einstein and his kind should not be inflicted on German students. In response to this rationale for ethnic and cultural purging of the German educational system the logical empiricists argued that because of its objectivity, observational evidence, rather than ethnic and cultual factors should be used to evaluate scientific theories.(Galison 1990). Less dramatically, the efforts working scientists put into producing objective evidence attest to the importance they attach to objectivity. Furthermore it is possible, in principle at least, to make observation reports and the reasoning used to draw conclusions from them available for public scrutiny. If observational evidence is objective in this sense , it can provide people with what they need to decide for themselves which theories to accept without having to rely unquestioningly on authorities.

Francis Bacon argued long ago that the best way to discover things about nature is to use experiences (his term for observations as well as experimental results) to develop and improve scientific theories (Bacon1620 49ff). The role of observational evidence in scientific discovery was an important topic for Whewell (1858) and Mill (1872) among others in the 19 th century. Recently, Judaea Pearl, Clark Glymour, and their students and associates addressed it rigorously in the course of developing techniques for inferring claims about causal structures from statistical features of the data they give rise to (Pearl, 2000; Spirtes, Glymour, and Scheines 2000). But such work is exceptional. For the most part, philosophers followed Karl Popper who maintained, contrary to the title of one of his best known books, that there is no such thing as a ‘logic of discovery’.(Popper 1959, 31) Drawing a sharp distinction between discovery and justification, the standard philosophical literature devotes most of its attention to the latter.

Theories are customarily represented as collections of sentences, propositions, statements or beliefs, etc., and their logical consequences. Among these are maximally general explanatory and predictive laws (Coulomb’s law of electrical attraction and repulsion, and Maxwellian electromagnetism equations for example), along with lesser generalizations that describe more limited natural and experimental phenomena (e.g., the ideal gas equations describing relations between temperatures and pressures of enclosed gasses, and general descriptions of positional astronomical regularities). Observations are used in testing generalizations of both kinds.

Some philosophers prefer to represent theories as collections of ‘states of physical or phenomenal systems’ and laws. The laws for any given theory are

…relations over states which determine…possible behaviors of phenomenal systems within the theory’s scope. (Suppe 1977, 710)

So conceived, a theory can be adequately represented by more than one linguistic formulation because it is not a system of sentences or propositions. Instead, it is a non-linguistic structure which can function as a semantic model of its sentential or propositional representations. (Suppe 1977, 221–230) This entry treats theories as collections of sentences or sentential structures with or without deductive closure. But the questions it takes up arise in pretty much the same way when theories are represented in accordance with this semantic account.

One answer to this question assumes that observation is a perceptual process so that to observe is to look at, listen to, touch, taste, or smell something, attending to details of the resulting perceptual experience. Observers may have the good fortune to obtain useful perceptual evidence simply by noticing what’s going on around them, but in many cases they must arrange and manipulate things to produce informative perceptible results. In either case, observation sentences describe perceptions or things perceived.

Observers use magnifying glasses, microscopes, or telescopes to see things that are too small or far away to be seen, or seen clearly enough, without them. Similarly, amplification devices are used to hear faint sounds. But if to observe something is to perceive it, not every use of instruments to augment the senses qualifies as observational. Philosophers agree that you can observe the moons of Jupiter with a telescope, or a heart beat with a stethoscope. But minimalist empiricists like Bas Van Fraassen (1980, 16–17) deny that one can observe things that can be visualized only by using electron (and perhaps even) light microscopes. Many philosophers don’t mind microscopes but find it unnatural to say that high energy physicists observe particles or particle interactions when they look at bubble chamber photographs. Their intuitions come from the plausible assumption that one can observe only what one can see by looking, hear by listening, feel by touching, and so on. Investigators can neither look at (direct their gazes toward and attend to) nor visually experience charged particles moving through a bubble chamber. Instead they can look at and see tracks in the chamber, or in bubble chamber photographs.

The identification of observation and perceptual experience persisted well into the 20 th century—so much so that Carl Hempel could characterize the scientific enterprise as an attempt to predict and explain the deliverances of the senses (Hempel 1952, 653). This was to be accomplished by using laws or lawlike generalizations along with descriptions of initial conditions, correspondence rules, and auxiliary hypotheses to derive observation sentences describing the sensory deliverances of interest. Theory testing was treated as a matter of comparing observation sentences describing observations made in natural or laboratory settings to observation sentences that should be true according to the theory to be tested. This makes it imperative to ask what observation sentences report. Even though scientists often record their evidence non-sententially, e.g., in the form of pictures, graphs, and tables of numbers, some of what Hempel says about the meanings of observation sentences applies to non-sentential observational records as well.

According to what Hempel called the phenomenalist account, observation reports describe the observer’s subjective perceptual experiences.

…Such experiential data might be conceived of as being sensations, perceptions, and similar phenomena of immediate experience. (Hempel 1952, 674)

This view is motivated by the assumption that the epistemic value of an observation report depends upon its truth or accuracy, and that with regard to perception, the only thing observers can know with certainty to be true or accurate is how things appear to them. This means that we can’t be confident that observation reports are true or accurate if they describe anything beyond the observer’s own perceptual experience. Presumably one’s confidence in a conclusion should not exceed one’s confidence in one’s best reasons to believe it. For the phenomenalist it follows that reports of subjective experience can provide better reasons to believe claims they support than reports of other kinds of evidence. Furthermore, if C.I. Lewis had been right to think that probabilities cannot be established on the basis of dubitable evidence, (Lewis 1950, 182) observation sentences would have no evidential value unless they report the observer’s subjective experiences. [ 1 ]

But given the expressive limitations of the language available for reporting subjective experiences we can’t expect phenomenalistic reports to be precise and unambiguous enough to test theoretical claims whose evaluation requires accurate, fine- grained perceptual discriminations. Worse yet, if experiences are directly available only to those who have them, there is room to doubt whether different people can understand the same observation sentence in the same way. Suppose you had to evaluate a claim on the basis of someone else’s subjective report of how a litmus solution looked to her when she dripped a liquid of unknown acidity into it. How could you decide whether her visual experience was the same as the one you would use her words to report?

Such considerations led Hempel to propose, contrary to the phenomenalists, that observation sentences report ‘directly observable’, ‘intersubjectively ascertainable’ facts about physical objects

…such as the coincidence of the pointer of an instrument with a numbered mark on a dial; a change of color in a test substance or in the skin of a patient; the clicking of an amplifier connected with a Geiger counter; etc. (ibid.)

Observers do sometmes have trouble making fine pointer position and color discriminations but such things are more susceptible to precise, intersubjectively understandable descriptions than subjective experiences. How much precision and what degree of intersubjective agreement are required in any given case depends on what is being tested and how the observation sentence is used to evaluate it. But all things being equal, we can’t expect data whose acceptability depends upon delicate subjective discriminations to be as reliable as data whose acceptability depends upon facts that can be ascertained intersubjectively. And similarly for non-sentential records; a drawing of what the observer takes to be the position of a pointer can be more reliable and easier to assess than a drawing that purports to capture her subjective visual experience of the pointer.

The fact that science is seldom a solitary pursuit suggests that one might be able to use pragmatic considerations to finesse questions about what observation reports express. Scientific claims—especially those with practical and policy applications—are typically used for purposes that are best served by public evaluation. Furthermore the development and application of a scientific theory typically requires collaboration and in many cases is promoted by competition. This, together with the fact that investigators must agree to accept putative evidence before they use it to test a theoretical claim, imposes a pragmatic condition on observation reports: an observation report must be such that investigators can reach agreement relatively quickly and relatively easily about whether it provides good evidence with which to test a theory (Cf. Neurath 1913). Feyerabend took this requirement seriously enough to characterize observation sentences pragmatically in terms of widespread decidability. In order to be an observation sentence, he said, a sentence must be contingently true or false, and such that competent speakers of the relevant language can quickly and unanimously decide whether to accept or reject it on the basis what happens when they look, listen, etc. in the appropriate way under the appropriate observation conditions (Feyerabend 1959, 18ff).

The requirement of quick, easy decidability and general agreement favors Hempel’s account of what observation sentences report over the phenomenalist’s. But one shouldn’t rely on data whose only virtue is widespread acceptance. Presumably the data must possess additional features by virtue of which it can serve as an epistemically trustworthy guide to a theory’s acceptability. If epistemic trustworthiness requires certainty, this requirement favors the phenomenalists. Even if trustworthiness doesn’t require certainty, it is not the same thing as quick and easy decidability. Philosophers need to address the question of how these two requirements can be mutually satisfied.

Many of the things scientists investigate do not interact with human perceptual systems as required to produce perceptual experiences of them. The methods investigators use to study such things argue against the idea—however plausible it may once have seemed—that scientists do or should rely exclusively on their perceptual systems to obtain the evidence they need. Thus Feyerabend proposed as a thought experiment that if measuring equipment was rigged up to register the magnitude of a quantity of interest, a theory could be tested just as well against its outputs as against records of human perceptions (Feyerabend 1969, 132–137).

Feyerabend could have made his point with historical examples instead of thought experiments. A century earlier Helmholtz estimated the speed of excitatory impulses traveling through a motor nerve. To initiate impulses whose speed could be estimated, he implanted an electrode into one end of a nerve fiber and ran a current into it from a coil. The other end was attached to a bit of muscle whose contraction signaled the arrival of the impulse. To find out how long it took the impulse to reach the muscle he had to know when the stimulating current reached the nerve. But

[o]ur senses are not capable of directly perceiving an individual moment of time with such small duration…

and so Helmholtz had to resort to what he called ‘artificial methods of observation’ (Olesko and Holmes 1994, 84). This meant arranging things so that current from the coil could deflect a galvanometer needle. Assuming that the magnitude of the deflection is proportional to the duration of current passing from the coil, Helmholtz could use the deflection to estimate the duration he could not see ( ibid ). This ‘artificial observation’ is not to be confused e.g., with using magnifying glasses or telescopes to see tiny or distant objects. Such devices enable the observer to scrutinize visible objects. The miniscule duration of the current flow is not a visible object. Helmholtz studied it by looking at and seeing something else. (Hooke (1705, 16–17) argued for and designed instruments to execute the same kind of strategy in the 17 th century.) The moral of Feyerabend’s thought experiment and Helmholtz’s distinction between perception and artificial observation is that working scientists are happy to call things that register on their experimental equipment observables even if they don’t or can’t register on their senses.

Some evidence is produced by processes so convoluted that it’s hard to decide what, if anything has been observed. Consider functional magnetic resonance images (fMRI) of the brain decorated with colors to indicate magnitudes of electrical activity in different regions during the performance of a cognitive task. To produce these images, brief magnetic pulses are applied to the subject’s brain. The magnetic force coordinates the precessions of protons in hemoglobin and other bodily stuffs to make them emit radio signals strong enough for the equipment to respond to. When the magnetic force is relaxed, the signals from protons in highly oxygenated hemoglobin deteriorate at a detectably different rate than signals from blood that carries less oxygen. Elaborate algorithms are applied to radio signal records to estimate blood oxygen levels at the places from which the signals are calculated to have originated. There is good reason to believe that blood flowing just downstream from spiking neurons carries appreciably more oxygen than blood in the vicinity of resting neurons. Assumptions about the relevant spatial and temporal relations are used to estimate levels of electrical activity in small regions of the brain corresponding to pixels in the finished image. The results of all of these computations are used to assign the appropriate colors to pixels in a computer generated image of the brain. The role of the senses in fMRI data production is limited to such things as monitoring the equipment and keeping an eye on the subject. Their epistemic role is limited to discriminating the colors in the finished image, reading tables of numbers the computer used to assign them, and so on.

If fMRI images record observations, it’s hard to say what was observed—neuronal activity, blood oxygen levels, proton precessions, radio signals, or something else. (If anything is observed, the radio signals that interact directly with the equipment would seem to be better candidates than blood oxygen levels or neuronal activity.) Furthermore, it’s hard to reconcile the idea that fMRI images record observations with the traditional empiricist notion that much as they may be needed to draw conclusions from observational evidence, calculations involving theoretical assumptions and background beliefs must not be allowed (on pain of loss of objectively) to intrude into the process of data production. The production of fMRI images requires extensive statistical manipulation based on theories about the radio signals, and a variety of factors having to do with their detection along with beliefs about relations between blood oxygen levels and neuronal activity, sources of systematic error, and so on.

In view of all of this, functional brain imaging differs, e.g., from looking and seeing, photographing, and measuring with a thermometer or a galvanometer in ways that make it uninformative to call it observation at all. And similarly for many other methods scientists use to produce non-perceptual evidence.

Terms like ‘observation’ and ‘observation reports’ don’t occur nearly as much in scientific as in philosophical writings. In their place, working scientists tend to talk about data . Philosophers who adopt this usage are free to think about standard examples of observation as members of a large, diverse, and growing family of data production methods. Instead of trying to decide which methods to classify as observational and which things qualify as observables, philosophers can then concentrate on the epistemic influence of the factors that differentiate members of the family. In particular, they can focus their attention on what questions data produced by a given method can be used to answer, what must be done to use that data fruitfully, and the credibility of the answers they afford.(Bogen 2016)

It is of interest that records of perceptual observation are not always epistemically superior to data from experimental equipment. Indeed it is not unusual for investigators to use non-perceptual evidence to evaluate perceptual data and correct for its errors. For example, Rutherford and Pettersson conducted similar experiments to find out if certain elements disintegrated to emit charged particles under radioactive bombardment. To detect emissions, observers watched a scintillation screen for faint flashes produced by particle strikes. Pettersson’s assistants reported seeing flashes from silicon and certain other elements. Rutherford’s did not. Rutherford’s colleague, James Chadwick, visited Petterson’s laboratory to evaluate his data. Instead of watching the screen and checking Pettersson’s data against what he saw, Chadwick arranged to have Pettersson’s assistants watch the screen while unbeknownst to them he manipulated the equipment, alternating normal operating conditions with a condition in which particles, if any, could not hit the screen. Pettersson’s data were discredited by the fact that his assistants reported flashes at close to the same rate in both conditions (Steuwer 1985, 284–288).

Related considerations apply to the distinction between observable and unobservable objects of investigation. Some data are produced to help answer questions about things that do not themselves register on the senses or experimental equipment. Solar neutrino fluxes are a frequently discussed case in point. Neutrinos cannot interact directly with the senses or measuring equipment to produce recordable effects. Fluxes in their emission were studied by trapping the neutrinos and allowing them to interact with chlorine to produce a radioactive argon isotope. Experimentalists could then calculate fluxes in solar neutrino emission from Geiger counter measurements of radiation from the isotope. The epistemic significance of the neutrinos’ unobservability depends upon factors having to do with the reliability of the data the investigators managed to produce, and its validity as a source of information about the fluxes. It’s validity will depend, among many other things, on the correctness of the investigators ideas about how neutrinos interact with chlorine (Pinch 1985). But there are also unobservables that cannot be detected, and whose features cannot be inferred from data of any kind. These are the only unobservables that are epistemically unavailable. Whether they remain so depends upon whether scientists can figure out how to produce data to study them. The history of particle physics (see e.g. Morrison 2015) and neuro-science (see e.g., Valenstein 2005).

Empirically minded philosophers assume that the evidential value of an observation or observational process depends on how sensitive it is to whatever it is used to study. But this in turn depends on the adequacy of any theoretical claims its sensitivity may depend on. For example we can challenge the use of a thermometer reading, e , to support a description, prediction, or explanation of a patient’s temperature, t , by challenging theoretical claims, C , having to do with whether a reading from a thermometer like this one, applied in the same way under similar conditions, should indicate the patient’s temperature well enough to count in favor of or against t . At least some of the C will be such that regardless of whether an investigator explicitly endorses, or is even aware of them, her use of e would be undermined by their falsity. All observations and uses of observations evidence are theory laden in this sense. (Cf. Chang 2005), Azzouni 2004.) As the example of the thermometer illustrates, analogues of Norwood Hanson’claim that seeing is a theory laden undertaking apply just as well to equipment generated observations.(Hanson 1958, 19). But if all observations and observational processes are theory laden, how can they provide reality based, objective epistemic constraints on scientific reasoning? One thing to say about this is that the theoretical claims the epistemic value of a parcel of observational evidence depends on may be may be quite correct. If so, even if we don’t know, or have no way to establish their correctness, the evidence may be good enough for the uses to which we put it. But this is cold comfort for investigators who can’t establish it. The next thing to say is that scientific investigation is an ongoing process during the course of which theoretical claims whose unacceptability would reduce the epistemic value of a parcel of evidence can be challenged and defended in different ways at different times as new considerations and investigative techniques are introduced. We can hope that the acceptability of the evidence can be established relative to one or more stretches of time even though success in dealing with challenges at one time is no guarantee that all future challenges can be satisfactorily dealt with. Thus as long as scientists continue their work there need be no time at which the epistemic value of a parcel of evidence can be established once and for all. This should come as no surprise to anyone who is aware that science is fallible. But it is no grounds for skepticism. It can be perfectly reasonable to trust the evidence available at present even though it is logically possible for epistemic troubles to arise in the future.

Thomas Kuhn (1962), Norwood Hanson (1958), Paul Feyerabend (1959) and others cast suspicion on the objectivity of observational evidence in another way by arguing that one can’t use empirical evidence to teat a theory without committing oneself to that very theory. Although some of the examples they use to present their case feature equipment generated evidence, they tend to talk about observation as a perceptual process. Kuhn’s writings contain three different versions of this idea.

K1 . Perceptual Theory Loading. Perceptual psychologists, Bruner and Postman, found that subjects who were briefly shown anomalous playing cards, e.g., a black four of hearts, reported having seen their normal counterparts e.g., a red four of hearts. It took repeated exposures to get subjects to say the anomalous cards didn’t look right, and eventually, to describe them correctly. (Kuhn 1962, 63). Kuhn took such studies to indicate that things don’t look the same to observers with different conceptual resources. (For a more up-to-date discussion of theory and conceptual perceptual loading see Lupyan 2015.) If so, black hearts didn’t look like black hearts until repeated exposures somehow allowed subjects to acquire the concept of a black heart. By analogy, Kuhn supposed, when observers working in conflicting paradigms look at the same thing, their conceptual limitations should keep them from having the same visual experiences (Kuhn 1962, 111, 113–114, 115, 120–1). This would mean, for example, that when Priestley and Lavoisier watched the same experiment, Lavioisier should have seen what accorded with his theory that combustion and respiration are oxidation processes, while Priestley’s visual experiences should have agreed with his theory that burning and respiration are processes of phlogiston release. K2 . Semantical Theory Loading: Kuhn argued that theoretical commitments exert a strong influence on observation descriptions, and what they are understood to mean (Kuhn 1962, 127ff, Longino 1979,38-42). If so, proponents of a caloric account of heat won’t describe or understand descriptions of observed results of heat experiments in the same way as investigators who think of heat in terms of mean kinetic energy or radiation. They might all use the same words (e.g., ‘temperature’) to report an observation without understanding them in the same way. K3 . Salience: Kuhn claimed that if Galileo and an Aristotelian physicist had watched the same pendulum experiment, they would not have looked at or attended to the same things. The Aristotelian’s paradigm would have required the experimenter to measure …the weight of the stone, the vertical height to which it had been raised, and the time required for it to achieve rest (Kuhn 1992, 123)

and ignore radius, angular displacement, and time per swing (Kuhn 1962, 124).

These last were salient to Galileo because he treated pendulum swings as constrained circular motions. The Galilean quantities would be of no interest to an Aristotelian who treats the stone as falling under constraint toward the center of the earth (Kuhn 1962, 123). Thus Galileo and the Aristotelian would not have collected the same data. (Absent records of Aristotelian pendulum experiments we can think of this as a thought experiment.)

Taking K1, K2, and K3 in order of plausibility, K3 points to an important fact about scientific practice. Data production (including experimental design and execution) is heavily influenced by investigators’ background assumptions. Sometimes these include theoretical commitments that lead experimentalists to produce non-illuminating or misleading evidence. In other cases they may lead experimentalists to ignore, or even fail to produce useful evidence. For example, in order to obtain data on orgasms in female stumptail macaques, one researcher wired up females to produce radio records of orgasmic muscle contractions, heart rate increases, etc. But as Elisabeth Lloyd reports, “… the researcher … wired up the heart rate of the male macaques as the signal to start recording the female orgasms. When I pointed out that the vast majority of female stumptail orgasms occurred during sex among the females alone, he replied that yes he knew that, but he was only interested in important orgasms” (Lloyd 1993, 142). Although female stumptail orgasms occuring during sex with males are atypical, the experimental design was driven by the assumption that what makes features of female sexuality worth studying is their contribution to reproduction (Lloyd 1993, 139).

Fortunately, such things don’t always happen. When they do, investigators are often able eventually to make corrections, and come to appreciate the significance of data that had not originally been salient to them. Thus paradigms and theoretical commitments actually do influence saliency, but their influence is neither inevitable nor irremediable.

With regard to semantic theory loading (K2), it’s important to bear in mind that observers don’t always use declarative sentences to report observational and experimental results. They often draw, photograph, make audio recordings, etc. instead or set up their experimental devices to generate graphs, pictorial images, tables of numbers, and other non-sentential records. Obviously investigators’ conceptual resources and theoretical biases can exert epistemically significant influences on what they record (or set their equipment to record), which details they include or emphasize, and which forms of representation they choose (Daston and Galison 2007,115–190 309–361). But disagreements about the epistemic import of a graph, picture or other non-sentential bit of data often turn on causal rather than semantical considerations. Anatomists may have to decide whether a dark spot in a micrograph was caused by a staining artifact or by light reflected from an anatomically significant structure. Physicists may wonder whether a blip in a Geiger counter record reflects the causal influence of the radiation they wanted to monitor, or a surge in ambient radiation. Chemists may worry about the purity of samples used to obtain data. Such questions are not, and are not well represented as, semantic questions to which K2 is relevant. Late 20 th century philosophers may have ignored such cases and exaggerated the influence of semantic theory loading because they thought of theory testing in terms of inferential relations between observation and theoretical sentences.

With regard to sentential observation reports, the significance of semantic theory loading is less ubiquitous than one might expect. The interpretation of verbal reports often depends on ideas about causal structure rather than the meanings of signs. Rather than worrying about the meaning of words used to describe their observations, scientists are more likely to wonder whether the observers made up or withheld information, whether one or more details were artifacts of observation conditions, whether the specimens were atypical, and so on.

Kuhnian paradigms are heterogeneous collections of experimental practices, theoretical principles, problems selected for investigation, approaches to their solution, etc. Connections between components are loose enough to allow investigators who disagree profoundly over one or more theoretical claims to agree about how to design, execute, and record the results of their experiments. That is why neuroscientists who disagreed about whether nerve impulses consisted of electrical currents could measure the same electrical quantities, and agree on the linguistic meaning and the accuracy of observation reports including such terms as ‘potential’, ‘resistance’, ‘voltage’ and ‘current’.

The issues this section touches on are distant, linguistic descendents of issues that arose in connection with Locke’s view that mundane and scientific concepts (the empiricists called them ideas) derive their contents from experience (Locke 1700, 104–121,162–164, 404–408).

Looking at a patient with red spots and a fever, an investigator might report having seen the spots, or measles symptoms, or a patient with measles. Watching an unknown liquid dripping into a litmus solution an observer might report seeing a change in color, a liquid with a PH of less than 7, or an acid. The appropriateness of a description of a test outcome depends on how the relevant concepts are operationalized. What justifies an observer to report having observed a case of measles according to one operationalization might require her to say no more than that she had observed measles symptoms, or just red spots according to another.

In keeping with Percy Bridgman’s view that

…in general, we mean by a concept nothing more than a set of operations; the concept is synonymous with the corresponding sets of operations. (Bridgman 1927, 5)

one might suppose that operationalizations are definitions or meaning rules such that it is analytically true, e.g., that every liquid that turns litmus red in a properly conducted test is acidic. But it is more faithful to actual scientific practice to think of operationalizations as defeasible rules for the application of a concept such that both the rules and their applications are subject to revision on the basis of new empirical or theoretical developments. So understood, to operationalize is to adopt verbal and related practices for the purpose of enabling scientists to do their work. Operationalizations are thus sensitive and subject to change on the basis of findings that influence their usefulness (Feest, 2005).

Definitional or not, investigators in different research traditions may be trained to report their observations in conformity with conflicting operationalizations. Thus instead of training observers to describe what they see in a bubble chamber as a whitish streak or a trail, one might train them to say they see a particle track or even a particle. This may reflect what Kuhn meant by suggesting that some observers might be justified or even required to describe themselves as having seen oxygen, transparent and colorless though it is, or atoms, invisible though they are. (Kuhn 1962, 127ff) To the contrary, one might object that what one sees should not be confused with what one is trained to say when one sees it, and therefore that talking about seeing a colorless gas or an invisible particle may be nothing more than a picturesque way of talking about what certain operationalizations entitle observers to say. Strictly speaking, the objection concludes, the term ‘observation report’ should be reserved for descriptionsthat are neutral with respect to conflicting operationalizations.

If observational data are just those utterances that meet Feyerabend’s decidability and agreeability conditions, the import of semantic theory loading depends upon how quickly, and for which sentences reasonably sophisticated language users who stand in different paradigms can non-inferentially reach the same decisions about what to assert or deny. Some would expect enough agreement to secure the objectivity of observational data. Others would not. Still others would try to supply different standards for objectivity.

The example of Pettersson’s and Rutherford’s scintillation screen evidence (above) attests to the fact that observers working in different laboratories sometimes report seeing different things under similar conditions. It’s plausible that their expectations influence their reports. It’s plausible that their expectations are shaped by their training and by their supervisors’ and associates’ theory driven behavior. But as happens in other cases as well, all parties to the dispute agreed to reject Pettersson’s data by appeal to results of mechanical manipulations both laboratories could obtain and interpret in the same way without compromising their theoretical commitments.

Furthermore proponents of incompatible theories often produce impressively similar observational data. Much as they disagreed about the nature of respiration and combustion, Priestley and Lavoisier gave quantitatively similar reports of how long their mice stayed alive and their candles kept burning in closed bell jars. Priestley taught Lavoisier how to obtain what he took to be measurements of the phlogiston content of an unknown gas. A sample of the gas to be tested is run into a graduated tube filled with water and inverted over a water bath. After noting the height of the water remaining in the tube, the observer adds “nitrous air” (we call it nitric oxide) and checks the water level again. Priestley, who thought there was no such thing as oxygen, believed the change in water level indicated how much phlogiston the gas contained. Lavoisier reported observing the same water levels as Priestley even after he abandoned phlogiston theory and became convinced that changes in water level indicated free oxygen content (Conant 1957, 74–109).

The moral of these examples is that although paradigms or theoretical commitments sometimes have an epistemically significant influence on what observers perceive, it can be relatively easy to nullify or correct for their effects.

Typical responses to this question maintain that the acceptability of theoretical claims depends upon whether they are true (approximately true, probable, or significantly more probable than their competitors) or whether they “save” observable phenomena. They then try to explain how observational data argue for or against the possession of one or more of these virtues.

Truth. It’s natural to think that computability, range of application, and other things being equal, true theories are better than false ones, good approximations are better than bad ones, and highly probable theoretical claims are better than less probable ones. One way to decide whether a theory or a theoretical claim is true, close to the truth, or acceptably probable is to derive predictions from it and use observational data to evaluate them. Hypothetico-Deductive (HD) confirmation theorists propose that observational evidence argues for the truth of theories whose deductive consequences it verifies, and against those whose consequences it falsifies (Popper 1959, 32–34). But laws and theoretical generalization seldom if ever entail observational predictions unless they are conjoined with one or more auxiliary hypotheses taken from the theory they belong to. When the prediction turns to be false, HD has trouble explaining which of the conjuncts is to blame. If a theory entails a true prediction, it will continue to do so in conjunction with arbitrarily selected irrelevant claims. HD has trouble explaining why the prediction doesn’t confirm the irrelevancies along with the theory of interest.

Ignoring details, large and small, bootstrapping confirmation theories hold that an observation report confirms a theoretical generalization if an instance of the generalization follows from the observation report conjoined with auxiliary hypotheses from the theory the generalization belongs to. Observation counts against a theoretical claim if the conjunction entails a counter-instance. Here, as with HD, an observation argues for or against a theoretical claim only on the assumption that the auxiliary hypotheses are true (Glymour 1980, 110–175).

Bayesians hold that the evidential bearing of observational evidence on a theoretical claim is to be understood in terms of likelihood or conditional probability. For example, whether observational evidence argues for a theoretical claim might be thought to depend upon whether it is more probable (and if so how much more probable) than its denial conditional on a description of the evidence together with background beliefs, including theoretical commitments. But by Bayes’ theorem, the conditional probability of the claim of interest will depend in part upon that claim’s prior probability. Once again, one’s use of evidence to evaluate a theory depends in part upon one’s theoretical commitments. (Earman 1992, 33–86. Roush 2005, 149–186)

Francis Bacon (Bacon 1620, 70) said that allowing one’s commitment to a theory to determine what one takes to be the epistemic bearing of observational evidence on that very theory is, if anything, even worse than ignoring the evidence altogether. HD, Bootstrap, Bayesian, and related accounts of conformation run the risk of earning Bacon’s disapproval. According to all of them it can be reasonable for adherents of competing theories to disagree about how observational data bear on the same claims. As a matter of historical fact, such disagreements do occur. The moral of this fact depends upon whether and how such disagreements can be resolved. Because some of the components of a theory are logically and more or less probabilistically independent of one another, adherents of competing theories can often can find ways to bring themselves into close enough agreement about auxiliary hypotheses or prior probabilities to draw the same conclusions from the evidence.

Saving observable phenomena. Theories are said to save observable phenomena if they satisfactorily predict, describe, or systematize them. How well a theory performs any of these tasks need not depend upon the truth or accuracy of its basic principles. Thus according to Osiander’s preface to Copernicus’ On the Revolutions, a locus classicus, astronomers ‘…cannot in any way attain to true causes’ of the regularities among observable astronomical events, and must content themselves with saving the phenomena in the sense of using

…whatever suppositions enable …[them] to be computed correctly from the principles of geometry for the future as well as the past…(Osiander 1543, XX)

Theorists are to use those assumptions as calculating tools without committing themselves to their truth. In particular, the assumption that the planets rotate around the sun must be evaluated solely in terms of how useful it is in calculating their observable relative positions to a satisfactory approximation.

Pierre Duhem’s Aim and Structure of Physical Theory articulates a related conception. For Duhem a physical theory

…is a system of mathematical propositions, deduced from a small number of principles, which aim to represent as simply and completely, and exactly as possible, a set of experimental laws. (Duhem 1906, 19)

‘Experimental laws’ are general, mathematical descriptions of observable experimental results. Investigators produce them by performing measuring and other experimental operations and assigning symbols to perceptible results according to pre-established operational definitions (Duhem 1906, 19). For Duhem, the main function of a physical theory is to help us store and retrieve information about observables we would not otherwise be able to keep track of. If that’s what a theory is supposed to accomplish, its main virtue should be intellectual economy. Theorists are to replace reports of individual observations with experimental laws and devise higher level laws (the fewer, the better) from which experimental laws (the more, the better) can be mathematically derived (Duhem 1906, 21ff).

A theory’s experimental laws can be tested for accuracy and comprehensiveness by comparing them to observational data. Let EL be one or more experimental laws that perform acceptably well on such tests. Higher level laws can then be evaluated on the basis of how well they integrate EL into the rest of the theory. Some data that don’t fit integrated experimental laws won’t be interesting enough to worry about. Other data may need to be accommodated by replacing or modifying one or more experimental laws or adding new ones. If the required additions, modifications or replacements deliver experimental laws that are harder to integrate, the data count against the theory. If the required changes are conducive to improved systematization the data count in favor of it. If the required changes make no difference, the data don’t argue for or against the theory.

It is an unwelcome fact for all of these ideas about theory testing that data are typically produced in ways that make it impossible to predict them from the generalizations they are used to test, or to derive instances of those generalizations from data and non ad hoc auxiliary hypotheses. Indeed, it’s unusual for many members of a set of reasonably precise quantitative data to agree with one another, let alone with a quantitative prediction. That is because precise, publicly accessible data typically cannot be produced except through processes whose results reflect the influence of causal factors that are too numerous, too different in kind, and too irregular in behavior for any single theory to account for them. When Bernard Katz recorded electrical activity in nerve fiber preparations, the numerical values of his data were influenced by factors peculiar to the operation of his galvanometers and other pieces of equipment, variations among the positions of the stimulating and recording electrodes that had to be inserted into the nerve, the physiological effects of their insertion, and changes in the condition of the nerve as it deteriorated during the course of the experiment. There were variations in the investigators’ handling of the equipment. Vibrations shook the equipment in response to a variety of irregularly occurring causes ranging from random error sources to the heavy tread of Katz’s teacher, A.V. Hill, walking up and down the stairs outside of the laboratory. That’s a short list. To make matters worse, many of these factors influenced the data as parts of irregularly occurring, transient, and shifting assemblies of causal influences.

With regard to kinds of data that should be of interest to philosophers of physics, consider how many extraneous causes influenced radiation data in solar neutrino detection experiments, or spark chamber photographs produced to detect particle interactions. The effects of systematic and random sources of error are typically such that considerable analysis and interpretation are required to take investigators from data sets to conclusions that can be used to evaluate theoretical claims.

This applies as much to clear cases of perceptual data as to machine produced records. When 19 th and early 20 th century astronomers looked through telescopes and pushed buttons to record the time at which they saw a moon pass a crosshair, the values of their data points depended, not only upon light reflected from the moon, but also upon features of perceptual processes, reaction times, and other psychological factors that varied non-systematically from time to time and observer to observer. No astronomical theory has the resources to take such things into account. Similar considerations apply to the probabilities of specific data points conditional on theoretical principles, and the probabilities of confirming or disconfirming instances of theoretical claims conditional on the values of specific data points.

Instead of testing theoretical claims by direct comparison to raw data, investigators use data to infer facts about phenomena, i.e., events, regularities, processes, etc. whose instances, are uniform and uncomplicated enough to make them susceptible to systematic prediction and explanation (Bogen and Woodward 1988, 317). The fact that lead melts at temperatures at or close to 327.5 C is an example of a phenomenon, as are widespread regularities among electrical quantities involved in the action potential, the periods and orbital paths of the planets, etc. Theories that cannot be expected to predict or explain such things as individual temperature readings can nevertheless be evaluated on the basis of how useful they they are in predicting or explaining phenomena they are used to detect. The same holds for the action potential as opposed to the electrical data from which its features are calculated, and the orbits of the planets in contrast to the data of positional astronomy. It’s reasonable to ask a genetic theory how probable it is (given similar upbringings in similar environments) that the offspring of a schizophrenic parent or parents will develop one or more symptoms the DSM classifies as indicative of schizophrenia. But it would be quite unreasonable to ask it to predict or explain one patient’s numerical score on one trial of a particular diagnostic test, or why a diagnostician wrote a particular entry in her report of an interview with an offspring of a schizophrenic parents (Bogen and Woodward, 1988, 319–326).

The fact that theories are better at predicting and explaining facts about or features of phenomena than data isn’t such a bad thing. For many purposes, theories that predict and explain phenomena would be more illuminating, and more useful for practical purposes than theories (if there were any) that predicted or explained members of a data set. Suppose you could choose between a theory that predicted or explained the way in which neurotransmitter release relates to neuronal spiking (e.g., the fact that on average, transmitters are released roughly once for every 10 spikes) and a theory which explained or predicted the numbers displayed on the relevant experimental equipment in one, or a few single cases. For most purposes, the former theory would be preferable to the latter at the very least because it applies to so many more cases. And similarly for theories that predict or explain something about the probability of schizophrenia conditional on some genetic factor or a theory that predicted or explained the probability of faulty diagnoses of schizophrenia conditional on facts about the psychiatrist’s training. For most purposes, these would be preferable to a theory that predicted specific descriptions in a case history.

In view of all of this, together with the fact that a great many theoretical claims can only be tested directly against facts about phenomena, it behooves epistemologists to think about how data are used to answer questions about phenomena. Lacking space for a detailed discussion, the most this entry can do is to mention two main kinds of things investigators do in order to draw conclusions from data. The first is causal analysis carried out with or without the use of statistical techniques. The second is non-causal statistical analysis.

First, investigators must distinguish features of the data that are indicative of facts about the phenomenon of interest from those which can safely be ignored, and those which must be corrected for. Sometimes background knowledge makes this easy. Under normal circumstances investigators know that their thermometers are sensitive to temperature, and their pressure gauges, to pressure. An astronomer or a chemist who knows what spectrographic equipment does, and what she has applied it to will know what her data indicate. Sometimes it’s less obvious. When Ramon y Cajal looked through his microscope at a thin slice of stained nerve tissue, he had to figure out which if any of the fibers he could see at one focal length connected to or extended from things he could see only at another focal length, or in another slice.

Analogous considerations apply to quantitative data. It was easy for Katz to tell when his equipment was responding more to Hill’s footfalls on the stairs than to the electrical quantities is was set up to measure. It can be harder to tell whether an abrupt jump in the amplitude of a high frequency EEG oscillation was due to a feature of the subjects brain activity or an artifact of extraneous electrical activity in the laboratory or operating room where the measurements were made. The answers to questions about which features of numerical and non-numerical data are indicative of a phenomenon of interest typically depend at least in part on what is known about the causes that conspire to produce the data.

Statistical arguments are often used to deal with questions about the influence of epistemically relevant causal factors. For example, when it is known that similar data can be produced by factors that have nothing to do with the phenomenon of interest, Monte Carlo simulations, regression analyses of sample data, and a variety of other statistical techniques sometimes provide investigators with their best chance of deciding how seriously to take a putatively illuminating feature of their data.

But statistical techniques are also required for purposes other than causal analysis. To calculate the magnitude of a quantity like the melting point of lead from a scatter of numerical data, investigators throw out outliers, calculate the mean and the standard deviation, etc., and establish confidence and significance levels. Regression and other techniques are applied to the results to estimate how far from the mean the magnitude of interest can be expected to fall in the population of interest (e.g., the range of temperatures at which pure samples of lead can be expected to melt).

The fact that little can be learned from data without causal, statistical, and related argumentation has interesting consequences for received ideas about how the use of observational evidence distinguishes science from pseudo science, religion, and other non-scientific cognitive endeavors.First, scientists aren’t the only ones who use observational evidence to support their claims; astrologers and medical quacks use them too. To find epistemically significant differences, one must carefully consider what sorts of data they use, where it comes from, and how it is employed. The virtues of scientific as opposed to non-scientific theory evaluations depend not only on its reliance on empirical data, but also on how the data are produced, analyzed and interpreted to draw conclusions against which theories can be evaluated. Secondly, it doesn’t take many examples to refute the notion that adherence to a single, universally applicable “scientific method” differentiates the sciences from the non-sciences. Data are produced, and used in far too many different ways to treat informatively as instance of any single method. Thirdly, it is usually, if not always, impossible for investigators to draw conclusions to test theories against observational data without explicit or implicit reliance on theoretical principles. This means that when counterparts to Kuhnian questions about theory loading and its epistemic significance arise in connection with the analysis and interpretation of observational evidence, such questions must be answered by appeal to details that vary from case to case.

Grammatical variants of the term ‘observation’ have been applied to impressively different perceptual and non-perceptual process and to records of the results they produce. Their diversity is a reason to doubt whether general philosophical accounts of observation, observables, and observational data can tell epistemologists as much as local accounts grounded in close studies of specific kinds of cases. Furthermore, scientists continue to find ways to produce data that can’t be called observational without stretching the term to the point of vagueness.

It’s plausible that philosophers who value the kind of rigor, precision, and generality to which l logical empiricists and other exact philosophers aspired could do better by examining and developing techniques and results from logic, probability theory, statistics, machine learning, and computer modeling, etc. than by trying to construct highly general theories of observation and its role in science. Logic and the rest seem unable to deliver satisfactory, universally applicable accounts of scientific reasoning. But they have illuminating local applications, some of which can be of use to scientists as well as philosophers.

- Aristotle(a), Generation of Animals in Complete Works of Aristotle (Volume 1), J. Barnes (ed.), Princeton: Princeton University Press, 1995, pp. 774–993

- Aristotle(b), History of Animals in Complete Works of Aristotle (Volume 1), J. Barnes (ed.), Princeton: Princeton University Press, 1995, pp. 1111–1228.

- Azzouni, J., 2004, “Theory, Observation, and Scientific Realism,” British Journal for the Philosophy of Science , 55(3): 371-92.