- Privacy Policy

Home » Correlational Research Vs Experimental Research

Correlational Research Vs Experimental Research

Table of Contents

Correlational research and experimental research are two different research approaches used in social sciences and other fields of research.

Correlational Research

Correlational Research is a research approach that examines the relationship between two or more variables. It involves measuring the degree of association or correlation between the variables without manipulating them. The goal of correlational research is to identify whether there is a relationship between the variables and the strength of that relationship. Correlational research is typically conducted through surveys, observational studies, or secondary data analysis.

Experimental Research

Experimental Research , on the other hand, is a research approach that involves the manipulation of one or more variables to observe the effect on another variable. The goal of experimental research is to establish a cause-and-effect relationship between the variables. Experimental research is typically conducted in a controlled environment and involves random assignment of participants to different groups to ensure that the groups are equivalent. The data is collected through measurements and observations, and statistical analysis is used to test the hypotheses.

Difference Between Correlational Research and Experimental Research

Here’s a comparison table that highlights the differences between correlational research and experimental research:

| Correlational Research | Experimental Research | |

|---|---|---|

| Examines the relationship between two or more variables without manipulating them | Involves the manipulation of one or more variables to observe the effect on another variable | |

| To identify the strength and direction of the relationship between variables | To establish a cause-and-effect relationship between variables | |

| Surveys, observational studies, or secondary data analysis | Controlled experiments with random assignment of participants | |

| Correlation coefficients, regression analysis | Inferential statistics, analysis of variance (ANOVA) | |

| Association between variables | Causality between variables | |

| Examining the relationship between smoking and lung cancer | Testing the effect of a new medication on a particular disease |

Also see Research Methods

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Hypothesis Vs Null Hypothesis

Primary Vs Secondary Research

Basic Vs Applied Research

Generative Vs Evaluative Research

Clinical Research Vs Lab Research

Descriptive vs Experimental Research

Experimental and Quasi-Experimental Research

Guide Title: Experimental and Quasi-Experimental Research Guide ID: 64

You approach a stainless-steel wall, separated vertically along its middle where two halves meet. After looking to the left, you see two buttons on the wall to the right. You press the top button and it lights up. A soft tone sounds and the two halves of the wall slide apart to reveal a small room. You step into the room. Looking to the left, then to the right, you see a panel of more buttons. You know that you seek a room marked with the numbers 1-0-1-2, so you press the button marked "10." The halves slide shut and enclose you within the cubicle, which jolts upward. Soon, the soft tone sounds again. The door opens again. On the far wall, a sign silently proclaims, "10th floor."

You have engaged in a series of experiments. A ride in an elevator may not seem like an experiment, but it, and each step taken towards its ultimate outcome, are common examples of a search for a causal relationship-which is what experimentation is all about.

You started with the hypothesis that this is in fact an elevator. You proved that you were correct. You then hypothesized that the button to summon the elevator was on the left, which was incorrect, so then you hypothesized it was on the right, and you were correct. You hypothesized that pressing the button marked with the up arrow would not only bring an elevator to you, but that it would be an elevator heading in the up direction. You were right.

As this guide explains, the deliberate process of testing hypotheses and reaching conclusions is an extension of commonplace testing of cause and effect relationships.

Basic Concepts of Experimental and Quasi-Experimental Research

Discovering causal relationships is the key to experimental research. In abstract terms, this means the relationship between a certain action, X, which alone creates the effect Y. For example, turning the volume knob on your stereo clockwise causes the sound to get louder. In addition, you could observe that turning the knob clockwise alone, and nothing else, caused the sound level to increase. You could further conclude that a causal relationship exists between turning the knob clockwise and an increase in volume; not simply because one caused the other, but because you are certain that nothing else caused the effect.

Independent and Dependent Variables

Beyond discovering causal relationships, experimental research further seeks out how much cause will produce how much effect; in technical terms, how the independent variable will affect the dependent variable. You know that turning the knob clockwise will produce a louder noise, but by varying how much you turn it, you see how much sound is produced. On the other hand, you might find that although you turn the knob a great deal, sound doesn't increase dramatically. Or, you might find that turning the knob just a little adds more sound than expected. The amount that you turned the knob is the independent variable, the variable that the researcher controls, and the amount of sound that resulted from turning it is the dependent variable, the change that is caused by the independent variable.

Experimental research also looks into the effects of removing something. For example, if you remove a loud noise from the room, will the person next to you be able to hear you? Or how much noise needs to be removed before that person can hear you?

Treatment and Hypothesis

The term treatment refers to either removing or adding a stimulus in order to measure an effect (such as turning the knob a little or a lot, or reducing the noise level a little or a lot). Experimental researchers want to know how varying levels of treatment will affect what they are studying. As such, researchers often have an idea, or hypothesis, about what effect will occur when they cause something. Few experiments are performed where there is no idea of what will happen. From past experiences in life or from the knowledge we possess in our specific field of study, we know how some actions cause other reactions. Experiments confirm or reconfirm this fact.

Experimentation becomes more complex when the causal relationships they seek aren't as clear as in the stereo knob-turning examples. Questions like "Will olestra cause cancer?" or "Will this new fertilizer help this plant grow better?" present more to consider. For example, any number of things could affect the growth rate of a plant-the temperature, how much water or sun it receives, or how much carbon dioxide is in the air. These variables can affect an experiment's results. An experimenter who wants to show that adding a certain fertilizer will help a plant grow better must ensure that it is the fertilizer, and nothing else, affecting the growth patterns of the plant. To do this, as many of these variables as possible must be controlled.

Matching and Randomization

In the example used in this guide (you'll find the example below), we discuss an experiment that focuses on three groups of plants -- one that is treated with a fertilizer named MegaGro, another group treated with a fertilizer named Plant!, and yet another that is not treated with fetilizer (this latter group serves as a "control" group). In this example, even though the designers of the experiment have tried to remove all extraneous variables, results may appear merely coincidental. Since the goal of the experiment is to prove a causal relationship in which a single variable is responsible for the effect produced, the experiment would produce stronger proof if the results were replicated in larger treatment and control groups.

Selecting groups entails assigning subjects in the groups of an experiment in such a way that treatment and control groups are comparable in all respects except the application of the treatment. Groups can be created in two ways: matching and randomization. In the MegaGro experiment discussed below, the plants might be matched according to characteristics such as age, weight and whether they are blooming. This involves distributing these plants so that each plant in one group exactly matches characteristics of plants in the other groups. Matching may be problematic, though, because it "can promote a false sense of security by leading [the experimenter] to believe that [the] experimental and control groups were really equated at the outset, when in fact they were not equated on a host of variables" (Jones, 291). In other words, you may have flowers for your MegaGro experiment that you matched and distributed among groups, but other variables are unaccounted for. It would be difficult to have equal groupings.

Randomization, then, is preferred to matching. This method is based on the statistical principle of normal distribution. Theoretically, any arbitrarily selected group of adequate size will reflect normal distribution. Differences between groups will average out and become more comparable. The principle of normal distribution states that in a population most individuals will fall within the middle range of values for a given characteristic, with increasingly fewer toward either extreme (graphically represented as the ubiquitous "bell curve").

Differences between Quasi-Experimental and Experimental Research

Thus far, we have explained that for experimental research we need:

- a hypothesis for a causal relationship;

- a control group and a treatment group;

- to eliminate confounding variables that might mess up the experiment and prevent displaying the causal relationship; and

- to have larger groups with a carefully sorted constituency; preferably randomized, in order to keep accidental differences from fouling things up.

But what if we don't have all of those? Do we still have an experiment? Not a true experiment in the strictest scientific sense of the term, but we can have a quasi-experiment, an attempt to uncover a causal relationship, even though the researcher cannot control all the factors that might affect the outcome.

A quasi-experimenter treats a given situation as an experiment even though it is not wholly by design. The independent variable may not be manipulated by the researcher, treatment and control groups may not be randomized or matched, or there may be no control group. The researcher is limited in what he or she can say conclusively.

The significant element of both experiments and quasi-experiments is the measure of the dependent variable, which it allows for comparison. Some data is quite straightforward, but other measures, such as level of self-confidence in writing ability, increase in creativity or in reading comprehension are inescapably subjective. In such cases, quasi-experimentation often involves a number of strategies to compare subjectivity, such as rating data, testing, surveying, and content analysis.

Rating essentially is developing a rating scale to evaluate data. In testing, experimenters and quasi-experimenters use ANOVA (Analysis of Variance) and ANCOVA (Analysis of Co-Variance) tests to measure differences between control and experimental groups, as well as different correlations between groups.

Since we're mentioning the subject of statistics, note that experimental or quasi-experimental research cannot state beyond a shadow of a doubt that a single cause will always produce any one effect. They can do no more than show a probability that one thing causes another. The probability that a result is the due to random chance is an important measure of statistical analysis and in experimental research.

Example: Causality

Let's say you want to determine that your new fertilizer, MegaGro, will increase the growth rate of plants. You begin by getting a plant to go with your fertilizer. Since the experiment is concerned with proving that MegaGro works, you need another plant, using no fertilizer at all on it, to compare how much change your fertilized plant displays. This is what is known as a control group.

Set up with a control group, which will receive no treatment, and an experimental group, which will get MegaGro, you must then address those variables that could invalidate your experiment. This can be an extensive and exhaustive process. You must ensure that you use the same plant; that both groups are put in the same kind of soil; that they receive equal amounts of water and sun; that they receive the same amount of exposure to carbon-dioxide-exhaling researchers, and so on. In short, any other variable that might affect the growth of those plants, other than the fertilizer, must be the same for both plants. Otherwise, you can't prove absolutely that MegaGro is the only explanation for the increased growth of one of those plants.

Such an experiment can be done on more than two groups. You may not only want to show that MegaGro is an effective fertilizer, but that it is better than its competitor brand of fertilizer, Plant! All you need to do, then, is have one experimental group receiving MegaGro, one receiving Plant! and the other (the control group) receiving no fertilizer. Those are the only variables that can be different between the three groups; all other variables must be the same for the experiment to be valid.

Controlling variables allows the researcher to identify conditions that may affect the experiment's outcome. This may lead to alternative explanations that the researcher is willing to entertain in order to isolate only variables judged significant. In the MegaGro experiment, you may be concerned with how fertile the soil is, but not with the plants'; relative position in the window, as you don't think that the amount of shade they get will affect their growth rate. But what if it did? You would have to go about eliminating variables in order to determine which is the key factor. What if one receives more shade than the other and the MegaGro plant, which received more shade, died? This might prompt you to formulate a plausible alternative explanation, which is a way of accounting for a result that differs from what you expected. You would then want to redo the study with equal amounts of sunlight.

Methods: Five Steps

Experimental research can be roughly divided into five phases:

Identifying a research problem

The process starts by clearly identifying the problem you want to study and considering what possible methods will affect a solution. Then you choose the method you want to test, and formulate a hypothesis to predict the outcome of the test.

For example, you may want to improve student essays, but you don't believe that teacher feedback is enough. You hypothesize that some possible methods for writing improvement include peer workshopping, or reading more example essays. Favoring the former, your experiment would try to determine if peer workshopping improves writing in high school seniors. You state your hypothesis: peer workshopping prior to turning in a final draft will improve the quality of the student's essay.

Planning an experimental research study

The next step is to devise an experiment to test your hypothesis. In doing so, you must consider several factors. For example, how generalizable do you want your end results to be? Do you want to generalize about the entire population of high school seniors everywhere, or just the particular population of seniors at your specific school? This will determine how simple or complex the experiment will be. The amount of time funding you have will also determine the size of your experiment.

Continuing the example from step one, you may want a small study at one school involving three teachers, each teaching two sections of the same course. The treatment in this experiment is peer workshopping. Each of the three teachers will assign the same essay assignment to both classes; the treatment group will participate in peer workshopping, while the control group will receive only teacher comments on their drafts.

Conducting the experiment

At the start of an experiment, the control and treatment groups must be selected. Whereas the "hard" sciences have the luxury of attempting to create truly equal groups, educators often find themselves forced to conduct their experiments based on self-selected groups, rather than on randomization. As was highlighted in the Basic Concepts section, this makes the study a quasi-experiment, since the researchers cannot control all of the variables.

For the peer workshopping experiment, let's say that it involves six classes and three teachers with a sample of students randomly selected from all the classes. Each teacher will have a class for a control group and a class for a treatment group. The essay assignment is given and the teachers are briefed not to change any of their teaching methods other than the use of peer workshopping. You may see here that this is an effort to control a possible variable: teaching style variance.

Analyzing the data

The fourth step is to collect and analyze the data. This is not solely a step where you collect the papers, read them, and say your methods were a success. You must show how successful. You must devise a scale by which you will evaluate the data you receive, therefore you must decide what indicators will be, and will not be, important.

Continuing our example, the teachers' grades are first recorded, then the essays are evaluated for a change in sentence complexity, syntactical and grammatical errors, and overall length. Any statistical analysis is done at this time if you choose to do any. Notice here that the researcher has made judgments on what signals improved writing. It is not simply a matter of improved teacher grades, but a matter of what the researcher believes constitutes improved use of the language.

Writing the paper/presentation describing the findings

Once you have completed the experiment, you will want to share findings by publishing academic paper (or presentations). These papers usually have the following format, but it is not necessary to follow it strictly. Sections can be combined or not included, depending on the structure of the experiment, and the journal to which you submit your paper.

- Abstract : Summarize the project: its aims, participants, basic methodology, results, and a brief interpretation.

- Introduction : Set the context of the experiment.

- Review of Literature : Provide a review of the literature in the specific area of study to show what work has been done. Should lead directly to the author's purpose for the study.

- Statement of Purpose : Present the problem to be studied.

- Participants : Describe in detail participants involved in the study; e.g., how many, etc. Provide as much information as possible.

- Materials and Procedures : Clearly describe materials and procedures. Provide enough information so that the experiment can be replicated, but not so much information that it becomes unreadable. Include how participants were chosen, the tasks assigned them, how they were conducted, how data were evaluated, etc.

- Results : Present the data in an organized fashion. If it is quantifiable, it is analyzed through statistical means. Avoid interpretation at this time.

- Discussion : After presenting the results, interpret what has happened in the experiment. Base the discussion only on the data collected and as objective an interpretation as possible. Hypothesizing is possible here.

- Limitations : Discuss factors that affect the results. Here, you can speculate how much generalization, or more likely, transferability, is possible based on results. This section is important for quasi-experimentation, since a quasi-experiment cannot control all of the variables that might affect the outcome of a study. You would discuss what variables you could not control.

- Conclusion : Synthesize all of the above sections.

- References : Document works cited in the correct format for the field.

Experimental and Quasi-Experimental Research: Issues and Commentary

Several issues are addressed in this section, including the use of experimental and quasi-experimental research in educational settings, the relevance of the methods to English studies, and ethical concerns regarding the methods.

Using Experimental and Quasi-Experimental Research in Educational Settings

Charting causal relationships in human settings.

Any time a human population is involved, prediction of casual relationships becomes cloudy and, some say, impossible. Many reasons exist for this; for example,

- researchers in classrooms add a disturbing presence, causing students to act abnormally, consciously or unconsciously;

- subjects try to please the researcher, just because of an apparent interest in them (known as the Hawthorne Effect); or, perhaps

- the teacher as researcher is restricted by bias and time pressures.

But such confounding variables don't stop researchers from trying to identify causal relationships in education. Educators naturally experiment anyway, comparing groups, assessing the attributes of each, and making predictions based on an evaluation of alternatives. They look to research to support their intuitive practices, experimenting whenever they try to decide which instruction method will best encourage student improvement.

Combining Theory, Research, and Practice

The goal of educational research lies in combining theory, research, and practice. Educational researchers attempt to establish models of teaching practice, learning styles, curriculum development, and countless other educational issues. The aim is to "try to improve our understanding of education and to strive to find ways to have understanding contribute to the improvement of practice," one writer asserts (Floden 1996, p. 197).

In quasi-experimentation, researchers try to develop models by involving teachers as researchers, employing observational research techniques. Although results of this kind of research are context-dependent and difficult to generalize, they can act as a starting point for further study. The "educational researcher . . . provides guidelines and interpretive material intended to liberate the teacher's intelligence so that whatever artistry in teaching the teacher can achieve will be employed" (Eisner 1992, p. 8).

Bias and Rigor

Critics contend that the educational researcher is inherently biased, sample selection is arbitrary, and replication is impossible. The key to combating such criticism has to do with rigor. Rigor is established through close, proper attention to randomizing groups, time spent on a study, and questioning techniques. This allows more effective application of standards of quantitative research to qualitative research.

Often, teachers cannot wait to for piles of experimentation data to be analyzed before using the teaching methods (Lauer and Asher 1988). They ultimately must assess whether the results of a study in a distant classroom are applicable in their own classrooms. And they must continuously test the effectiveness of their methods by using experimental and qualitative research simultaneously. In addition to statistics (quantitative), researchers may perform case studies or observational research (qualitative) in conjunction with, or prior to, experimentation.

Relevance to English Studies

Situations in english studies that might encourage use of experimental methods.

Whenever a researcher would like to see if a causal relationship exists between groups, experimental and quasi-experimental research can be a viable research tool. Researchers in English Studies might use experimentation when they believe a relationship exists between two variables, and they want to show that these two variables have a significant correlation (or causal relationship).

A benefit of experimentation is the ability to control variables, such as the amount of treatment, when it is given, to whom and so forth. Controlling variables allows researchers to gain insight into the relationships they believe exist. For example, a researcher has an idea that writing under pseudonyms encourages student participation in newsgroups. Researchers can control which students write under pseudonyms and which do not, then measure the outcomes. Researchers can then analyze results and determine if this particular variable alone causes increased participation.

Transferability-Applying Results

Experimentation and quasi-experimentation allow for generating transferable results and accepting those results as being dependent upon experimental rigor. It is an effective alternative to generalizability, which is difficult to rely upon in educational research. English scholars, reading results of experiments with a critical eye, ultimately decide if results will be implemented and how. They may even extend that existing research by replicating experiments in the interest of generating new results and benefiting from multiple perspectives. These results will strengthen the study or discredit findings.

Concerns English Scholars Express about Experiments

Researchers should carefully consider if a particular method is feasible in humanities studies, and whether it will yield the desired information. Some researchers recommend addressing pertinent issues combining several research methods, such as survey, interview, ethnography, case study, content analysis, and experimentation (Lauer and Asher, 1988).

Advantages and Disadvantages of Experimental Research: Discussion

In educational research, experimentation is a way to gain insight into methods of instruction. Although teaching is context specific, results can provide a starting point for further study. Often, a teacher/researcher will have a "gut" feeling about an issue which can be explored through experimentation and looking at causal relationships. Through research intuition can shape practice .

A preconception exists that information obtained through scientific method is free of human inconsistencies. But, since scientific method is a matter of human construction, it is subject to human error . The researcher's personal bias may intrude upon the experiment , as well. For example, certain preconceptions may dictate the course of the research and affect the behavior of the subjects. The issue may be compounded when, although many researchers are aware of the affect that their personal bias exerts on their own research, they are pressured to produce research that is accepted in their field of study as "legitimate" experimental research.

The researcher does bring bias to experimentation, but bias does not limit an ability to be reflective . An ethical researcher thinks critically about results and reports those results after careful reflection. Concerns over bias can be leveled against any research method.

Often, the sample may not be representative of a population, because the researcher does not have an opportunity to ensure a representative sample. For example, subjects could be limited to one location, limited in number, studied under constrained conditions and for too short a time.

Despite such inconsistencies in educational research, the researcher has control over the variables , increasing the possibility of more precisely determining individual effects of each variable. Also, determining interaction between variables is more possible.

Even so, artificial results may result . It can be argued that variables are manipulated so the experiment measures what researchers want to examine; therefore, the results are merely contrived products and have no bearing in material reality. Artificial results are difficult to apply in practical situations, making generalizing from the results of a controlled study questionable. Experimental research essentially first decontextualizes a single question from a "real world" scenario, studies it under controlled conditions, and then tries to recontextualize the results back on the "real world" scenario. Results may be difficult to replicate .

Perhaps, groups in an experiment may not be comparable . Quasi-experimentation in educational research is widespread because not only are many researchers also teachers, but many subjects are also students. With the classroom as laboratory, it is difficult to implement randomizing or matching strategies. Often, students self-select into certain sections of a course on the basis of their own agendas and scheduling needs. Thus when, as often happens, one class is treated and the other used for a control, the groups may not actually be comparable. As one might imagine, people who register for a class which meets three times a week at eleven o'clock in the morning (young, no full-time job, night people) differ significantly from those who register for one on Monday evenings from seven to ten p.m. (older, full-time job, possibly more highly motivated). Each situation presents different variables and your group might be completely different from that in the study. Long-term studies are expensive and hard to reproduce. And although often the same hypotheses are tested by different researchers, various factors complicate attempts to compare or synthesize them. It is nearly impossible to be as rigorous as the natural sciences model dictates.

Even when randomization of students is possible, problems arise. First, depending on the class size and the number of classes, the sample may be too small for the extraneous variables to cancel out. Second, the study population is not strictly a sample, because the population of students registered for a given class at a particular university is obviously not representative of the population of all students at large. For example, students at a suburban private liberal-arts college are typically young, white, and upper-middle class. In contrast, students at an urban community college tend to be older, poorer, and members of a racial minority. The differences can be construed as confounding variables: the first group may have fewer demands on its time, have less self-discipline, and benefit from superior secondary education. The second may have more demands, including a job and/or children, have more self-discipline, but an inferior secondary education. Selecting a population of subjects which is representative of the average of all post-secondary students is also a flawed solution, because the outcome of a treatment involving this group is not necessarily transferable to either the students at a community college or the students at the private college, nor are they universally generalizable.

When a human population is involved, experimental research becomes concerned if behavior can be predicted or studied with validity. Human response can be difficult to measure . Human behavior is dependent on individual responses. Rationalizing behavior through experimentation does not account for the process of thought, making outcomes of that process fallible (Eisenberg, 1996).

Nevertheless, we perform experiments daily anyway . When we brush our teeth every morning, we are experimenting to see if this behavior will result in fewer cavities. We are relying on previous experimentation and we are transferring the experimentation to our daily lives.

Moreover, experimentation can be combined with other research methods to ensure rigor . Other qualitative methods such as case study, ethnography, observational research and interviews can function as preconditions for experimentation or conducted simultaneously to add validity to a study.

We have few alternatives to experimentation. Mere anecdotal research , for example is unscientific, unreplicatable, and easily manipulated. Should we rely on Ed walking into a faculty meeting and telling the story of Sally? Sally screamed, "I love writing!" ten times before she wrote her essay and produced a quality paper. Therefore, all the other faculty members should hear this anecdote and know that all other students should employ this similar technique.

On final disadvantage: frequently, political pressure drives experimentation and forces unreliable results. Specific funding and support may drive the outcomes of experimentation and cause the results to be skewed. The reader of these results may not be aware of these biases and should approach experimentation with a critical eye.

Advantages and Disadvantages of Experimental Research: Quick Reference List

Experimental and quasi-experimental research can be summarized in terms of their advantages and disadvantages. This section combines and elaborates upon many points mentioned previously in this guide.

|

|

|

| gain insight into methods of instruction | subject to human error |

| intuitive practice shaped by research | personal bias of researcher may intrude |

| teachers have bias but can be reflective | sample may not be representative |

| researcher can have control over variables | can produce artificial results |

| humans perform experiments anyway | results may only apply to one situation and may be difficult to replicate |

| can be combined with other research methods for rigor | groups may not be comparable |

| use to determine what is best for population | human response can be difficult to measure |

| provides for greater transferability than anecdotal research | political pressure may skew results |

Ethical Concerns

Experimental research may be manipulated on both ends of the spectrum: by researcher and by reader. Researchers who report on experimental research, faced with naive readers of experimental research, encounter ethical concerns. While they are creating an experiment, certain objectives and intended uses of the results might drive and skew it. Looking for specific results, they may ask questions and look at data that support only desired conclusions. Conflicting research findings are ignored as a result. Similarly, researchers, seeking support for a particular plan, look only at findings which support that goal, dismissing conflicting research.

Editors and journals do not publish only trouble-free material. As readers of experiments members of the press might report selected and isolated parts of a study to the public, essentially transferring that data to the general population which may not have been intended by the researcher. Take, for example, oat bran. A few years ago, the press reported how oat bran reduces high blood pressure by reducing cholesterol. But that bit of information was taken out of context. The actual study found that when people ate more oat bran, they reduced their intake of saturated fats high in cholesterol. People started eating oat bran muffins by the ton, assuming a causal relationship when in actuality a number of confounding variables might influence the causal link.

Ultimately, ethical use and reportage of experimentation should be addressed by researchers, reporters and readers alike.

Reporters of experimental research often seek to recognize their audience's level of knowledge and try not to mislead readers. And readers must rely on the author's skill and integrity to point out errors and limitations. The relationship between researcher and reader may not sound like a problem, but after spending months or years on a project to produce no significant results, it may be tempting to manipulate the data to show significant results in order to jockey for grants and tenure.

Meanwhile, the reader may uncritically accept results that receive validity by being published in a journal. However, research that lacks credibility often is not published; consequentially, researchers who fail to publish run the risk of being denied grants, promotions, jobs, and tenure. While few researchers are anything but earnest in their attempts to conduct well-designed experiments and present the results in good faith, rhetorical considerations often dictate a certain minimization of methodological flaws.

Concerns arise if researchers do not report all, or otherwise alter, results. This phenomenon is counterbalanced, however, in that professionals are also rewarded for publishing critiques of others' work. Because the author of an experimental study is in essence making an argument for the existence of a causal relationship, he or she must be concerned not only with its integrity, but also with its presentation. Achieving persuasiveness in any kind of writing involves several elements: choosing a topic of interest, providing convincing evidence for one's argument, using tone and voice to project credibility, and organizing the material in a way that meets expectations for a logical sequence. Of course, what is regarded as pertinent, accepted as evidence, required for credibility, and understood as logical varies according to context. If the experimental researcher hopes to make an impact on the community of professionals in their field, she must attend to the standards and orthodoxy's of that audience.

Related Links

Contrasts: Traditional and computer-supported writing classrooms. This Web presents a discussion of the Transitions Study, a year-long exploration of teachers and students in computer-supported and traditional writing classrooms. Includes description of study, rationale for conducting the study, results and implications of the study.

http://kairos.technorhetoric.net/2.2/features/reflections/page1.htm

Annotated Bibliography

A cozy world of trivial pursuits? (1996, June 28) The Times Educational Supplement . 4174, pp. 14-15.

A critique discounting the current methods Great Britain employs to fund and disseminate educational research. The belief is that research is performed for fellow researchers not the teaching public and implications for day to day practice are never addressed.

Anderson, J. A. (1979, Nov. 10-13). Research as argument: the experimental form. Paper presented at the annual meeting of the Speech Communication Association, San Antonio, TX.

In this paper, the scientist who uses the experimental form does so in order to explain that which is verified through prediction.

Anderson, Linda M. (1979). Classroom-based experimental studies of teaching effectiveness in elementary schools . (Technical Report UTR&D-R- 4102). Austin: Research and Development Center for Teacher Education, University of Texas.

Three recent large-scale experimental studies have built on a database established through several correlational studies of teaching effectiveness in elementary school.

Asher, J. W. (1976). Educational research and evaluation methods . Boston: Little, Brown.

Abstract unavailable by press time.

Babbie, Earl R. (1979). The Practice of Social Research . Belmont, CA: Wadsworth.

A textbook containing discussions of several research methodologies used in social science research.

Bangert-Drowns, R.L. (1993). The word processor as instructional tool: a meta-analysis of word processing in writing instruction. Review of Educational Research, 63 (1), 69-93.

Beach, R. (1993). The effects of between-draft teacher evaluation versus student self-evaluation on high school students' revising of rough drafts. Research in the Teaching of English, 13 , 111-119.

The question of whether teacher evaluation or guided self-evaluation of rough drafts results in increased revision was addressed in Beach's study. Differences in the effects of teacher evaluations, guided self-evaluation (using prepared guidelines,) and no evaluation of rough drafts were examined. The final drafts of students (10th, 11th, and 12th graders) were compared with their rough drafts and rated by judges according to degree of change.

Beishuizen, J. & Moonen, J. (1992). Research in technology enriched schools: a case for cooperation between teachers and researchers . (ERIC Technical Report ED351006).

This paper describes the research strategies employed in the Dutch Technology Enriched Schools project to encourage extensive and intensive use of computers in a small number of secondary schools, and to study the effects of computer use on the classroom, the curriculum, and school administration and management.

Borg, W. P. (1989). Educational Research: an Introduction . (5th ed.). New York: Longman.

An overview of educational research methodology, including literature review and discussion of approaches to research, experimental design, statistical analysis, ethics, and rhetorical presentation of research findings.

Campbell, D. T., & Stanley, J. C. (1963). Experimental and quasi-experimental designs for research . Boston: Houghton Mifflin.

A classic overview of research designs.

Campbell, D.T. (1988). Methodology and epistemology for social science: selected papers . ed. E. S. Overman. Chicago: University of Chicago Press.

This is an overview of Campbell's 40-year career and his work. It covers in seven parts measurement, experimental design, applied social experimentation, interpretive social science, epistemology and sociology of science. Includes an extensive bibliography.

Caporaso, J. A., & Roos, Jr., L. L. (Eds.). Quasi-experimental approaches: Testing theory and evaluating policy. Evanston, WA: Northwestern University Press.

A collection of articles concerned with explicating the underlying assumptions of quasi-experimentation and relating these to true experimentation. With an emphasis on design. Includes a glossary of terms.

Collier, R. Writing and the word processor: How wary of the gift-giver should we be? Unpublished manuscript.

Unpublished typescript. Charts the developments to date in computers and composition and speculates about the future within the framework of Willie Sypher's model of the evolution of creative discovery.

Cook, T.D. & Campbell, D.T. (1979). Quasi-experimentation: design and analysis issues for field settings . Boston: Houghton Mifflin Co.

The authors write that this book "presents some quasi-experimental designs and design features that can be used in many social research settings. The designs serve to probe causal hypotheses about a wide variety of substantive issues in both basic and applied research."

Cutler, A. (1970). An experimental method for semantic field study. Linguistic Communication, 2 , N. pag.

This paper emphasizes the need for empirical research and objective discovery procedures in semantics, and illustrates a method by which these goals may be obtained.

Daniels, L. B. (1996, Summer). Eisenberg's Heisenberg: The indeterminancies of rationality. Curriculum Inquiry, 26 , 181-92.

Places Eisenberg's theories in relation to the death of foundationalism by showing that he distorts rational studies into a form of relativism. He looks at Eisenberg's ideas on indeterminacy, methods and evidence, what he is against and what we should think of what he says.

Danziger, K. (1990). Constructing the subject: Historical origins of psychological research. Cambridge: Cambridge University Press.

Danzinger stresses the importance of being aware of the framework in which research operates and of the essentially social nature of scientific activity.

Diener, E., et al. (1972, December). Leakage of experimental information to potential future subjects by debriefed subjects. Journal of Experimental Research in Personality , 264-67.

Research regarding research: an investigation of the effects on the outcome of an experiment in which information about the experiment had been leaked to subjects. The study concludes that such leakage is not a significant problem.

Dudley-Marling, C., & Rhodes, L. K. (1989). Reflecting on a close encounter with experimental research. Canadian Journal of English Language Arts. 12 , 24-28.

Researchers, Dudley-Marling and Rhodes, address some problems they met in their experimental approach to a study of reading comprehension. This article discusses the limitations of experimental research, and presents an alternative to experimental or quantitative research.

Edgington, E. S. (1985). Random assignment and experimental research. Educational Administration Quarterly, 21 , N. pag.

Edgington explores ways on which random assignment can be a part of field studies. The author discusses both non-experimental and experimental research and the need for using random assignment.

Eisenberg, J. (1996, Summer). Response to critiques by R. Floden, J. Zeuli, and L. Daniels. Curriculum Inquiry, 26 , 199-201.

A response to critiques of his argument that rational educational research methods are at best suspect and at worst futile. He believes indeterminacy controls this method and worries that chaotic research is failing students.

Eisner, E. (1992, July). Are all causal claims positivistic? A reply to Francis Schrag. Educational Researcher, 21 (5), 8-9.

Eisner responds to Schrag who claimed that critics like Eisner cannot escape a positivistic paradigm whatever attempts they make to do so. Eisner argues that Schrag essentially misses the point for trying to argue for the paradigm solely on the basis of cause and effect without including the rest of positivistic philosophy. This weakens his argument against multiple modal methods, which Eisner argues provides opportunities to apply the appropriate research design where it is most applicable.

Floden, R.E. (1996, Summer). Educational research: limited, but worthwhile and maybe a bargain. (response to J.A. Eisenberg). Curriculum Inquiry, 26 , 193-7.

Responds to John Eisenberg critique of educational research by asserting the connection between improvement of practice and research results. He places high value of teacher discrepancy and knowledge that research informs practice.

Fortune, J. C., & Hutson, B. A. (1994, March/April). Selecting models for measuring change when true experimental conditions do not exist. Journal of Educational Research, 197-206.

This article reviews methods for minimizing the effects of nonideal experimental conditions by optimally organizing models for the measurement of change.

Fox, R. F. (1980). Treatment of writing apprehension and tts effects on composition. Research in the Teaching of English, 14 , 39-49.

The main purpose of Fox's study was to investigate the effects of two methods of teaching writing on writing apprehension among entry level composition students, A conventional teaching procedure was used with a control group, while a workshop method was employed with the treatment group.

Gadamer, H-G. (1976). Philosophical hermeneutics . (D. E. Linge, Trans.). Berkeley, CA: University of California Press.

A collection of essays with the common themes of the mediation of experience through language, the impossibility of objectivity, and the importance of context in interpretation.

Gaise, S. J. (1981). Experimental vs. non-experimental research on classroom second language learning. Bilingual Education Paper Series, 5 , N. pag.

Aims on classroom-centered research on second language learning and teaching are considered and contrasted with the experimental approach.

Giordano, G. (1983). Commentary: Is experimental research snowing us? Journal of Reading, 27 , 5-7.

Do educational research findings actually benefit teachers and students? Giordano states his opinion that research may be helpful to teaching, but is not essential and often is unnecessary.

Goldenson, D. R. (1978, March). An alternative view about the role of the secondary school in political socialization: A field-experimental study of theory and research in social education. Theory and Research in Social Education , 44-72.

This study concludes that when political discussion among experimental groups of secondary school students is led by a teacher, the degree to which the students' views were impacted is proportional to the credibility of the teacher.

Grossman, J., and J. P. Tierney. (1993, October). The fallibility of comparison groups. Evaluation Review , 556-71.

Grossman and Tierney present evidence to suggest that comparison groups are not the same as nontreatment groups.

Harnisch, D. L. (1992). Human judgment and the logic of evidence: A critical examination of research methods in special education transition literature. In D. L. Harnisch et al. (Eds.), Selected readings in transition.

This chapter describes several common types of research studies in special education transition literature and the threats to their validity.

Hawisher, G. E. (1989). Research and recommendations for computers and composition. In G. Hawisher and C. Selfe. (Eds.), Critical Perspectives on Computers and Composition Instruction . (pp. 44-69). New York: Teacher's College Press.

An overview of research in computers and composition to date. Includes a synthesis grid of experimental research.

Hillocks, G. Jr. (1982). The interaction of instruction, teacher comment, and revision in teaching the composing process. Research in the Teaching of English, 16 , 261-278.

Hillock conducted a study using three treatments: observational or data collecting activities prior to writing, use of revisions or absence of same, and either brief or lengthy teacher comments to identify effective methods of teaching composition to seventh and eighth graders.

Jenkinson, J. C. (1989). Research design in the experimental study of intellectual disability. International Journal of Disability, Development, and Education, 69-84.

This article catalogues the difficulties of conducting experimental research where the subjects are intellectually disables and suggests alternative research strategies.

Jones, R. A. (1985). Research Methods in the Social and Behavioral Sciences. Sunderland, MA: Sinauer Associates, Inc..

A textbook designed to provide an overview of research strategies in the social sciences, including survey, content analysis, ethnographic approaches, and experimentation. The author emphasizes the importance of applying strategies appropriately and in variety.

Kamil, M. L., Langer, J. A., & Shanahan, T. (1985). Understanding research in reading and writing . Newton, Massachusetts: Allyn and Bacon.

Examines a wide variety of problems in reading and writing, with a broad range of techniques, from different perspectives.

Kennedy, J. L. (1985). An Introduction to the Design and Analysis of Experiments in Behavioral Research . Lanham, MD: University Press of America.

An introductory textbook of psychological and educational research.

Keppel, G. (1991). Design and analysis: a researcher's handbook . Englewood Cliffs, NJ: Prentice Hall.

This updates Keppel's earlier book subtitled "a student's handbook." Focuses on extensive information about analytical research and gives a basic picture of research in psychology. Covers a range of statistical topics. Includes a subject and name index, as well as a glossary.

Knowles, G., Elija, R., & Broadwater, K. (1996, Spring/Summer). Teacher research: enhancing the preparation of teachers? Teaching Education, 8 , 123-31.

Researchers looked at one teacher candidate who participated in a class which designed their own research project correlating to a question they would like answered in the teaching world. The goal of the study was to see if preservice teachers developed reflective practice by researching appropriate classroom contexts.

Lace, J., & De Corte, E. (1986, April 16-20). Research on media in western Europe: A myth of sisyphus? Paper presented at the annual meeting of the American Educational Research Association. San Francisco.

Identifies main trends in media research in western Europe, with emphasis on three successive stages since 1960: tools technology, systems technology, and reflective technology.

Latta, A. (1996, Spring/Summer). Teacher as researcher: selected resources. Teaching Education, 8 , 155-60.

An annotated bibliography on educational research including milestones of thought, practical applications, successful outcomes, seminal works, and immediate practical applications.

Lauer. J.M. & Asher, J. W. (1988). Composition research: Empirical designs . New York: Oxford University Press.

Approaching experimentation from a humanist's perspective to it, authors focus on eight major research designs: Case studies, ethnographies, sampling and surveys, quantitative descriptive studies, measurement, true experiments, quasi-experiments, meta-analyses, and program evaluations. It takes on the challenge of bridging language of social science with that of the humanist. Includes name and subject indexes, as well as a glossary and a glossary of symbols.

Mishler, E. G. (1979). Meaning in context: Is there any other kind? Harvard Educational Review, 49 , 1-19.

Contextual importance has been largely ignored by traditional research approaches in social/behavioral sciences and in their application to the education field. Developmental and social psychologists have increasingly noted the inadequacies of this approach. Drawing examples for phenomenology, sociolinguistics, and ethnomethodology, the author proposes alternative approaches for studying meaning in context.

Mitroff, I., & Bonoma, T. V. (1978, May). Psychological assumptions, experimentations, and real world problems: A critique and an alternate approach to evaluation. Evaluation Quarterly , 235-60.

The authors advance the notion of dialectic as a means to clarify and examine the underlying assumptions of experimental research methodology, both in highly controlled situations and in social evaluation.

Muller, E. W. (1985). Application of experimental and quasi-experimental research designs to educational software evaluation. Educational Technology, 25 , 27-31.

Muller proposes a set of guidelines for the use of experimental and quasi-experimental methods of research in evaluating educational software. By obtaining empirical evidence of student performance, it is possible to evaluate if programs are making the desired learning effect.

Murray, S., et al. (1979, April 8-12). Technical issues as threats to internal validity of experimental and quasi-experimental designs . San Francisco: University of California.

The article reviews three evaluation models and analyzes the flaws common to them. Remedies are suggested.

Muter, P., & Maurutto, P. (1991). Reading and skimming from computer screens and books: The paperless office revisited? Behavior and Information Technology, 10 (4), 257-66.

The researchers test for reading and skimming effectiveness, defined as accuracy combined with speed, for written text compared to text on a computer monitor. They conclude that, given optimal on-line conditions, both are equally effective.

O'Donnell, A., Et al. (1992). The impact of cooperative writing. In J. R. Hayes, et al. (Eds.). Reading empirical research studies: The rhetoric of research . (pp. 371-84). Hillsdale, NJ: Lawrence Erlbaum Associates.

A model of experimental design. The authors investigate the efficacy of cooperative writing strategies, as well as the transferability of skills learned to other, individual writing situations.

Palmer, D. (1988). Looking at philosophy . Mountain View, CA: Mayfield Publishing.

An introductory text with incisive but understandable discussions of the major movements and thinkers in philosophy from the Pre-Socratics through Sartre. With illustrations by the author. Includes a glossary.

Phelps-Gunn, T., & Phelps-Terasaki, D. (1982). Written language instruction: Theory and remediation . London: Aspen Systems Corporation.

The lack of research in written expression is addressed and an application on the Total Writing Process Model is presented.

Poetter, T. (1996, Spring/Summer). From resistance to excitement: becoming qualitative researchers and reflective practitioners. Teaching Education , 8109-19.

An education professor reveals his own problematic research when he attempted to institute a educational research component to a teacher preparation program. He encountered dissent from students and cooperating professionals and ultimately was rewarded with excitement towards research and a recognized correlation to practice.

Purves, A. C. (1992). Reflections on research and assessment in written composition. Research in the Teaching of English, 26 .

Three issues concerning research and assessment is writing are discussed: 1) School writing is a matter of products not process, 2) school writing is an ill-defined domain, 3) the quality of school writing is what observers report they see. Purves discusses these issues while looking at data collected in a ten-year study of achievement in written composition in fourteen countries.

Rathus, S. A. (1987). Psychology . (3rd ed.). Poughkeepsie, NY: Holt, Rinehart, and Winston.

An introductory psychology textbook. Includes overviews of the major movements in psychology, discussions of prominent examples of experimental research, and a basic explanation of relevant physiological factors. With chapter summaries.

Reiser, R. A. (1982). Improving the research skills of instructional designers. Educational Technology, 22 , 19-21.

In his paper, Reiser starts by stating the importance of research in advancing the field of education, and points out that graduate students in instructional design lack the proper skills to conduct research. The paper then goes on to outline the practicum in the Instructional Systems Program at Florida State University which includes: 1) Planning and conducting an experimental research study; 2) writing the manuscript describing the study; 3) giving an oral presentation in which they describe their research findings.

Report on education research . (Journal). Washington, DC: Capitol Publication, Education News Services Division.

This is an independent bi-weekly newsletter on research in education and learning. It has been publishing since Sept. 1969.

Rossell, C. H. (1986). Why is bilingual education research so bad?: Critique of the Walsh and Carballo study of Massachusetts bilingual education programs . Boston: Center for Applied Social Science, Boston University. (ERIC Working Paper 86-5).

The Walsh and Carballo evaluation of the effectiveness of transitional bilingual education programs in five Massachusetts communities has five flaws and the five flaws are discussed in detail.

Rubin, D. L., & Greene, K. (1992). Gender-typical style in written language. Research in the Teaching of English, 26.

This study was designed to find out whether the writing styles of men and women differ. Rubin and Green discuss the pre-suppositions that women are better writers than men.

Sawin, E. (1992). Reaction: Experimental research in the context of other methods. School of Education Review, 4 , 18-21.

Sawin responds to Gage's article on methodologies and issues in educational research. He agrees with most of the article but suggests the concept of scientific should not be regarded in absolute terms and recommends more emphasis on scientific method. He also questions the value of experiments over other types of research.

Schoonmaker, W. E. (1984). Improving classroom instruction: A model for experimental research. The Technology Teacher, 44, 24-25.

The model outlined in this article tries to bridge the gap between classroom practice and laboratory research, using what Schoonmaker calls active research. Research is conducted in the classroom with the students and is used to determine which two methods of classroom instruction chosen by the teacher is more effective.

Schrag, F. (1992). In defense of positivist research paradigms. Educational Researcher, 21, (5), 5-8.

The controversial defense of the use of positivistic research methods to evaluate educational strategies; the author takes on Eisner, Erickson, and Popkewitz.

Smith, J. (1997). The stories educational researchers tell about themselves. Educational Researcher, 33 (3), 4-11.

Recapitulates main features of an on-going debate between advocates for using vocabularies of traditional language arts and whole language in educational research. An "impasse" exists were advocates "do not share a theoretical disposition concerning both language instruction and the nature of research," Smith writes (p. 6). He includes a very comprehensive history of the debate of traditional research methodology and qualitative methods and vocabularies. Definitely worth a read by graduates.

Smith, N. L. (1980). The feasibility and desirability of experimental methods in evaluation. Evaluation and Program Planning: An International Journal , 251-55.

Smith identifies the conditions under which experimental research is most desirable. Includes a review of current thinking and controversies.

Stewart, N. R., & Johnson, R. G. (1986, March 16-20). An evaluation of experimental methodology in counseling and counselor education research. Paper presented at the annual meeting of the American Educational Research Association, San Francisco.

The purpose of this study was to evaluate the quality of experimental research in counseling and counselor education published from 1976 through 1984.

Spector, P. E. (1990). Research Designs. Newbury Park, California: Sage Publications.

In this book, Spector introduces the basic principles of experimental and nonexperimental design in the social sciences.

Tait, P. E. (1984). Do-it-yourself evaluation of experimental research. Journal of Visual Impairment and Blindness, 78 , 356-363 .

Tait's goal is to provide the reader who is unfamiliar with experimental research or statistics with the basic skills necessary for the evaluation of research studies.

Walsh, S. M. (1990). The current conflict between case study and experimental research: A breakthrough study derives benefits from both . (ERIC Document Number ED339721).

This paper describes a study that was not experimentally designed, but its major findings were generalizable to the overall population of writers in college freshman composition classes. The study was not a case study, but it provided insights into the attitudes and feelings of small clusters of student writers.

Waters, G. R. (1976). Experimental designs in communication research. Journal of Business Communication, 14 .

The paper presents a series of discussions on the general elements of experimental design and the scientific process and relates these elements to the field of communication.

Welch, W. W. (March 1969). The selection of a national random sample of teachers for experimental curriculum evaluation. Scholastic Science and Math , 210-216.

Members of the evaluation section of Harvard project physics describe what is said to be the first attempt to select a national random sample of teachers, and list 6 steps to do so. Cost and comparison with a volunteer group are also discussed.

Winer, B.J. (1971). Statistical principles in experimental design , (2nd ed.). New York: McGraw-Hill.

Combines theory and application discussions to give readers a better understanding of the logic behind statistical aspects of experimental design. Introduces the broad topic of design, then goes into considerable detail. Not for light reading. Bring your aspirin if you like statistics. Bring morphine is you're a humanist.

Winn, B. (1986, January 16-21). Emerging trends in educational technology research. Paper presented at the Annual Convention of the Association for Educational Communication Technology.

This examination of the topic of research in educational technology addresses four major areas: (1) why research is conducted in this area and the characteristics of that research; (2) the types of research questions that should or should not be addressed; (3) the most appropriate methodologies for finding answers to research questions; and (4) the characteristics of a research report that make it good and ultimately suitable for publication.

Citation Information

Luann Barnes, Jennifer Hauser, Luana Heikes, Anthony J. Hernandez, Paul Tim Richard, Katherine Ross, Guo Hua Yang, and Mike Palmquist. (1994-2024). Experimental and Quasi-Experimental Research. The WAC Clearinghouse. Colorado State University. Available at https://wac.colostate.edu/repository/writing/guides/.

Copyright Information

Copyright © 1994-2024 Colorado State University and/or this site's authors, developers, and contributors . Some material displayed on this site is used with permission.

Correlational Research vs. Experimental Research

What's the difference.

Correlational research and experimental research are two different approaches used in scientific studies. Correlational research aims to identify relationships or associations between variables without manipulating them. It examines the extent to which changes in one variable are related to changes in another variable. On the other hand, experimental research involves manipulating variables to determine cause-and-effect relationships. It includes the use of control groups, random assignment, and independent and dependent variables. While correlational research can provide valuable insights into relationships between variables, experimental research allows researchers to establish causal relationships and draw more definitive conclusions.

| Attribute | Correlational Research | Experimental Research |

|---|---|---|

| Definition | Examines the relationship between variables without manipulating them. | Manipulates variables to establish cause-and-effect relationships. |

| Research Design | Non-experimental | Experimental |

| Control over Variables | Little to no control over variables | High control over variables |

| Manipulation of Variables | Variables are not manipulated | Variables are manipulated |

| Causality | Cannot establish causality | Can establish causality |

| Random Assignment | Not used | Used to assign participants to groups |

| Independent Variable | Not manipulated | Manipulated by the researcher |

| Dependent Variable | Measured and analyzed for relationships | Measured and analyzed for cause-and-effect |

| Sample Size | Can be large | Usually smaller |

Further Detail

Introduction.

Research is a fundamental aspect of advancing knowledge and understanding in various fields. Two common research methods used in scientific studies are correlational research and experimental research. While both methods aim to explore relationships between variables, they differ in their approach, design, and the level of control over variables. This article will compare and contrast the attributes of correlational research and experimental research, highlighting their strengths and limitations.

Correlational Research

Correlational research is a non-experimental method used to examine the relationship between two or more variables. It focuses on measuring the degree of association or correlation between variables without manipulating them. In this type of research, researchers collect data on the variables of interest and analyze the statistical relationship between them. The strength and direction of the relationship are typically expressed through correlation coefficients.

One of the key advantages of correlational research is its ability to study naturally occurring phenomena in real-world settings. It allows researchers to observe and analyze relationships between variables that may be difficult or unethical to manipulate in an experimental setting. For example, studying the relationship between smoking and lung cancer would be more feasible using correlational research, as it would be unethical to assign individuals to smoke for an experimental study.

However, correlational research has limitations. It cannot establish causality or determine the direction of the relationship between variables. While a correlation may exist between two variables, it does not necessarily mean that one variable causes the other. It could be due to a third variable, known as a confounding variable, that influences both variables simultaneously. Additionally, correlational research is susceptible to issues such as selection bias, measurement error, and the inability to control extraneous variables.

Experimental Research

Experimental research, on the other hand, is a method that involves manipulating variables to determine cause-and-effect relationships. In experimental research, researchers carefully design and control the conditions under which the study takes place. They manipulate the independent variable(s) and measure the effects on the dependent variable(s). The goal is to establish a cause-and-effect relationship by systematically varying the independent variable(s) and controlling for potential confounding variables.

One of the main strengths of experimental research is its ability to establish causality. By manipulating variables and controlling extraneous factors, researchers can confidently attribute any observed changes in the dependent variable(s) to the manipulation of the independent variable(s). This allows for a more definitive understanding of the relationship between variables. Experimental research also provides a high level of control, which increases the internal validity of the study.

However, experimental research also has limitations. It may not always be feasible or ethical to manipulate certain variables. For example, it would be unethical to assign individuals to smoke for an experimental study on the effects of smoking. Additionally, experimental research often takes place in controlled laboratory settings, which may limit the generalizability of the findings to real-world situations. The high level of control in experimental research can also lead to artificiality, as it may not fully capture the complexity and variability of natural settings.

Comparison of Attributes

While correlational research and experimental research differ in their approach and design, they share some common attributes. Both methods involve collecting and analyzing data to explore relationships between variables. They also rely on statistical techniques to examine the strength and direction of these relationships. Furthermore, both methods contribute to the advancement of knowledge in their respective fields.

However, the key differences lie in the level of control over variables and the ability to establish causality. Correlational research lacks the ability to manipulate variables and establish cause-and-effect relationships. It focuses on observing and analyzing existing relationships between variables. On the other hand, experimental research allows for the manipulation of variables, providing a higher level of control and the ability to establish causality.

Correlational research is often used in exploratory studies or when it is not feasible or ethical to manipulate variables. It can provide valuable insights into the relationships between variables and generate hypotheses for further investigation. Experimental research, on the other hand, is more suitable for testing specific hypotheses and establishing causal relationships. It allows researchers to control extraneous variables and systematically manipulate independent variables to observe their effects on dependent variables.

Correlational research and experimental research are two distinct methods used in scientific studies. While correlational research focuses on observing and analyzing relationships between variables without manipulation, experimental research involves manipulating variables to establish cause-and-effect relationships. Both methods have their strengths and limitations, and their choice depends on the research question, feasibility, and ethical considerations. By understanding the attributes of correlational research and experimental research, researchers can make informed decisions about the most appropriate method to use in their studies, ultimately contributing to the advancement of knowledge in their respective fields.

Comparisons may contain inaccurate information about people, places, or facts. Please report any issues.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Quasi Experimental Design Overview & Examples

By Jim Frost Leave a Comment

What is a Quasi Experimental Design?

A quasi experimental design is a method for identifying causal relationships that does not randomly assign participants to the experimental groups. Instead, researchers use a non-random process. For example, they might use an eligibility cutoff score or preexisting groups to determine who receives the treatment.

Quasi-experimental research is a design that closely resembles experimental research but is different. The term “quasi” means “resembling,” so you can think of it as a cousin to actual experiments. In these studies, researchers can manipulate an independent variable — that is, they change one factor to see what effect it has. However, unlike true experimental research, participants are not randomly assigned to different groups.

Learn more about Experimental Designs: Definition & Types .

When to Use Quasi-Experimental Design

Researchers typically use a quasi-experimental design because they can’t randomize due to practical or ethical concerns. For example:

- Practical Constraints : A school interested in testing a new teaching method can only implement it in preexisting classes and cannot randomly assign students.

- Ethical Concerns : A medical study might not be able to randomly assign participants to a treatment group for an experimental medication when they are already taking a proven drug.

Quasi-experimental designs also come in handy when researchers want to study the effects of naturally occurring events, like policy changes or environmental shifts, where they can’t control who is exposed to the treatment.

Quasi-experimental designs occupy a unique position in the spectrum of research methodologies, sitting between observational studies and true experiments. This middle ground offers a blend of both worlds, addressing some limitations of purely observational studies while navigating the constraints often accompanying true experiments.

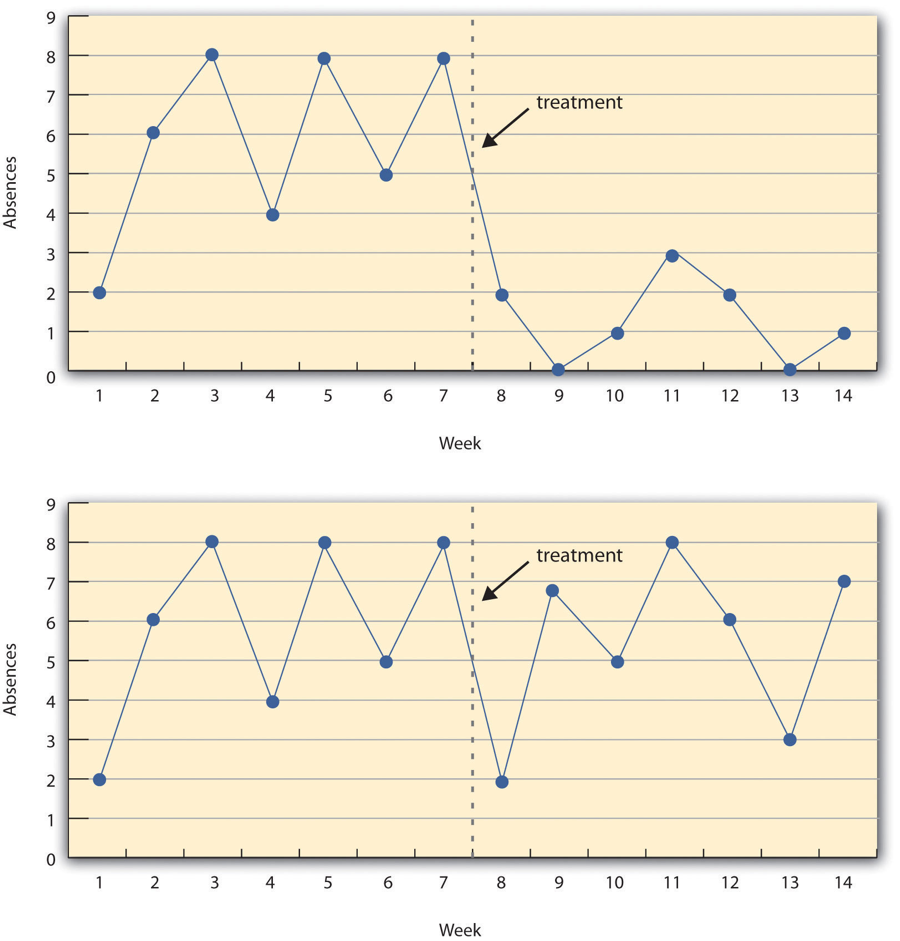

A significant advantage of quasi-experimental research over purely observational studies and correlational research is that it addresses the issue of directionality, determining which variable is the cause and which is the effect. In quasi-experiments, an intervention typically occurs during the investigation, and the researchers record outcomes before and after it, increasing the confidence that it causes the observed changes.

However, it’s crucial to recognize its limitations as well. Controlling confounding variables is a larger concern for a quasi-experimental design than a true experiment because it lacks random assignment.

In sum, quasi-experimental designs offer a valuable research approach when random assignment is not feasible, providing a more structured and controlled framework than observational studies while acknowledging and attempting to address potential confounders.

Types of Quasi-Experimental Designs and Examples

Quasi-experimental studies use various methods, depending on the scenario.

Natural Experiments

This design uses naturally occurring events or changes to create the treatment and control groups. Researchers compare outcomes between those whom the event affected and those it did not affect. Analysts use statistical controls to account for confounders that the researchers must also measure.

Natural experiments are related to observational studies, but they allow for a clearer causality inference because the external event or policy change provides both a form of quasi-random group assignment and a definite start date for the intervention.

For example, in a natural experiment utilizing a quasi-experimental design, researchers study the impact of a significant economic policy change on small business growth. The policy is implemented in one state but not in neighboring states. This scenario creates an unplanned experimental setup, where the state with the new policy serves as the treatment group, and the neighboring states act as the control group.

Researchers are primarily interested in small business growth rates but need to record various confounders that can impact growth rates. Hence, they record state economic indicators, investment levels, and employment figures. By recording these metrics across the states, they can include them in the model as covariates and control them statistically. This method allows researchers to estimate differences in small business growth due to the policy itself, separate from the various confounders.

Nonequivalent Groups Design

This method involves matching existing groups that are similar but not identical. Researchers attempt to find groups that are as equivalent as possible, particularly for factors likely to affect the outcome.

For instance, researchers use a nonequivalent groups quasi-experimental design to evaluate the effectiveness of a new teaching method in improving students’ mathematics performance. A school district considering the teaching method is planning the study. Students are already divided into schools, preventing random assignment.

The researchers matched two schools with similar demographics, baseline academic performance, and resources. The school using the traditional methodology is the control, while the other uses the new approach. Researchers are evaluating differences in educational outcomes between the two methods.

They perform a pretest to identify differences between the schools that might affect the outcome and include them as covariates to control for confounding. They also record outcomes before and after the intervention to have a larger context for the changes they observe.

Regression Discontinuity