- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

Correlation Studies in Psychology Research

Determining the relationship between two or more variables.

Verywell / Brianna Gilmartin

- Characteristics

Potential Pitfalls

Frequently asked questions.

A correlational study is a type of research design that looks at the relationships between two or more variables. Correlational studies are non-experimental, which means that the experimenter does not manipulate or control any of the variables.

A correlation refers to a relationship between two variables. Correlations can be strong or weak and positive or negative. Sometimes, there is no correlation.

There are three possible outcomes of a correlation study: a positive correlation, a negative correlation, or no correlation. Researchers can present the results using a numerical value called the correlation coefficient, a measure of the correlation strength. It can range from –1.00 (negative) to +1.00 (positive). A correlation coefficient of 0 indicates no correlation.

- Positive correlations : Both variables increase or decrease at the same time. A correlation coefficient close to +1.00 indicates a strong positive correlation.

- Negative correlations : As the amount of one variable increases, the other decreases (and vice versa). A correlation coefficient close to -1.00 indicates a strong negative correlation.

- No correlation : There is no relationship between the two variables. A correlation coefficient of 0 indicates no correlation.

Characteristics of a Correlational Study

Correlational studies are often used in psychology, as well as other fields like medicine. Correlational research is a preliminary way to gather information about a topic. The method is also useful if researchers are unable to perform an experiment.

Researchers use correlations to see if a relationship between two or more variables exists, but the variables themselves are not under the control of the researchers.

While correlational research can demonstrate a relationship between variables, it cannot prove that changing one variable will change another. In other words, correlational studies cannot prove cause-and-effect relationships.

When you encounter research that refers to a "link" or an "association" between two things, they are most likely talking about a correlational study.

Types of Correlational Research

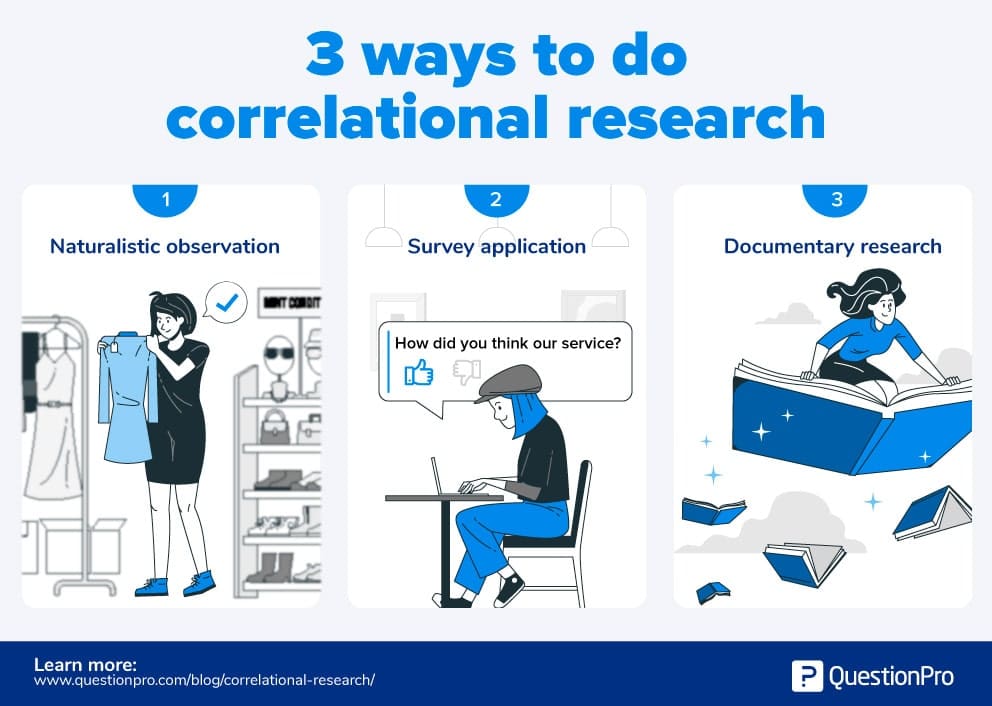

There are three types of correlational research: naturalistic observation, the survey method, and archival research. Each type has its own purpose, as well as its pros and cons.

Naturalistic Observation

The naturalistic observation method involves observing and recording variables of interest in a natural setting without interference or manipulation.

Can inspire ideas for further research

Option if lab experiment not available

Variables are viewed in natural setting

Can be time-consuming and expensive

Extraneous variables can't be controlled

No scientific control of variables

Subjects might behave differently if aware of being observed

This method is well-suited to studies where researchers want to see how variables behave in their natural setting or state. Inspiration can then be drawn from the observations to inform future avenues of research.

In some cases, it might be the only method available to researchers; for example, if lab experimentation would be precluded by access, resources, or ethics. It might be preferable to not being able to conduct research at all, but the method can be costly and usually takes a lot of time.

Naturalistic observation presents several challenges for researchers. For one, it does not allow them to control or influence the variables in any way nor can they change any possible external variables.

However, this does not mean that researchers will get reliable data from watching the variables, or that the information they gather will be free from bias.

For example, study subjects might act differently if they know that they are being watched. The researchers might not be aware that the behavior that they are observing is not necessarily the subject's natural state (i.e., how they would act if they did not know they were being watched).

Researchers also need to be aware of their biases, which can affect the observation and interpretation of a subject's behavior.

Surveys and questionnaires are some of the most common methods used for psychological research. The survey method involves having a random sample of participants complete a survey, test, or questionnaire related to the variables of interest. Random sampling is vital to the generalizability of a survey's results.

Cheap, easy, and fast

Can collect large amounts of data in a short amount of time

Results can be affected by poor survey questions

Results can be affected by unrepresentative sample

Outcomes can be affected by participants

If researchers need to gather a large amount of data in a short period of time, a survey is likely to be the fastest, easiest, and cheapest option.

It's also a flexible method because it lets researchers create data-gathering tools that will help ensure they get the information they need (survey responses) from all the sources they want to use (a random sample of participants taking the survey).

Survey data might be cost-efficient and easy to get, but it has its downsides. For one, the data is not always reliable—particularly if the survey questions are poorly written or the overall design or delivery is weak. Data is also affected by specific faults, such as unrepresented or underrepresented samples .

The use of surveys relies on participants to provide useful data. Researchers need to be aware of the specific factors related to the people taking the survey that will affect its outcome.

For example, some people might struggle to understand the questions. A person might answer a particular way to try to please the researchers or to try to control how the researchers perceive them (such as trying to make themselves "look better").

Sometimes, respondents might not even realize that their answers are incorrect or misleading because of mistaken memories .

Archival Research

Many areas of psychological research benefit from analyzing studies that were conducted long ago by other researchers, as well as reviewing historical records and case studies.

For example, in an experiment known as "The Irritable Heart ," researchers used digitalized records containing information on American Civil War veterans to learn more about post-traumatic stress disorder (PTSD).

Large amount of data

Can be less expensive

Researchers cannot change participant behavior

Can be unreliable

Information might be missing

No control over data collection methods

Using records, databases, and libraries that are publicly accessible or accessible through their institution can help researchers who might not have a lot of money to support their research efforts.

Free and low-cost resources are available to researchers at all levels through academic institutions, museums, and data repositories around the world.

Another potential benefit is that these sources often provide an enormous amount of data that was collected over a very long period of time, which can give researchers a way to view trends, relationships, and outcomes related to their research.

While the inability to change variables can be a disadvantage of some methods, it can be a benefit of archival research. That said, using historical records or information that was collected a long time ago also presents challenges. For one, important information might be missing or incomplete and some aspects of older studies might not be useful to researchers in a modern context.

A primary issue with archival research is reliability. When reviewing old research, little information might be available about who conducted the research, how a study was designed, who participated in the research, as well as how data was collected and interpreted.

Researchers can also be presented with ethical quandaries—for example, should modern researchers use data from studies that were conducted unethically or with questionable ethics?

You've probably heard the phrase, "correlation does not equal causation." This means that while correlational research can suggest that there is a relationship between two variables, it cannot prove that one variable will change another.

For example, researchers might perform a correlational study that suggests there is a relationship between academic success and a person's self-esteem. However, the study cannot show that academic success changes a person's self-esteem.

To determine why the relationship exists, researchers would need to consider and experiment with other variables, such as the subject's social relationships, cognitive abilities, personality, and socioeconomic status.

The difference between a correlational study and an experimental study involves the manipulation of variables. Researchers do not manipulate variables in a correlational study, but they do control and systematically vary the independent variables in an experimental study. Correlational studies allow researchers to detect the presence and strength of a relationship between variables, while experimental studies allow researchers to look for cause and effect relationships.

If the study involves the systematic manipulation of the levels of a variable, it is an experimental study. If researchers are measuring what is already present without actually changing the variables, then is a correlational study.

The variables in a correlational study are what the researcher measures. Once measured, researchers can then use statistical analysis to determine the existence, strength, and direction of the relationship. However, while correlational studies can say that variable X and variable Y have a relationship, it does not mean that X causes Y.

The goal of correlational research is often to look for relationships, describe these relationships, and then make predictions. Such research can also often serve as a jumping off point for future experimental research.

Heath W. Psychology Research Methods . Cambridge University Press; 2018:134-156.

Schneider FW. Applied Social Psychology . 2nd ed. SAGE; 2012:50-53.

Curtis EA, Comiskey C, Dempsey O. Importance and use of correlational research . Nurse Researcher . 2016;23(6):20-25. doi:10.7748/nr.2016.e1382

Carpenter S. Visualizing Psychology . 3rd ed. John Wiley & Sons; 2012:14-30.

Pizarro J, Silver RC, Prause J. Physical and mental health costs of traumatic war experiences among civil war veterans . Arch Gen Psychiatry . 2006;63(2):193. doi:10.1001/archpsyc.63.2.193

Post SG. The echo of Nuremberg: Nazi data and ethics . J Med Ethics . 1991;17(1):42-44. doi:10.1136/jme.17.1.42

Lau F. Chapter 12 Methods for Correlational Studies . In: Lau F, Kuziemsky C, eds. Handbook of eHealth Evaluation: An Evidence-based Approach . University of Victoria.

Akoglu H. User's guide to correlation coefficients . Turk J Emerg Med . 2018;18(3):91-93. doi:10.1016/j.tjem.2018.08.001

Price PC. Research Methods in Psychology . California State University.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

- Privacy Policy

Home » Correlational Research – Methods, Types and Examples

Correlational Research – Methods, Types and Examples

Table of Contents

Correlational Research

Correlational Research is a type of research that examines the statistical relationship between two or more variables without manipulating them. It is a non-experimental research design that seeks to establish the degree of association or correlation between two or more variables.

Types of Correlational Research

There are three types of correlational research:

Positive Correlation

A positive correlation occurs when two variables increase or decrease together. This means that as one variable increases, the other variable also tends to increase. Similarly, as one variable decreases, the other variable also tends to decrease. For example, there is a positive correlation between the amount of time spent studying and academic performance. The more time a student spends studying, the higher their academic performance is likely to be. Similarly, there is a positive correlation between a person’s age and their income level. As a person gets older, they tend to earn more money.

Negative Correlation

A negative correlation occurs when one variable increases while the other decreases. This means that as one variable increases, the other variable tends to decrease. Similarly, as one variable decreases, the other variable tends to increase. For example, there is a negative correlation between the number of hours spent watching TV and physical activity level. The more time a person spends watching TV, the less physically active they are likely to be. Similarly, there is a negative correlation between the amount of stress a person experiences and their overall happiness. As stress levels increase, happiness levels tend to decrease.

Zero Correlation

A zero correlation occurs when there is no relationship between two variables. This means that the variables are unrelated and do not affect each other. For example, there is zero correlation between a person’s shoe size and their IQ score. The size of a person’s feet has no relationship to their level of intelligence. Similarly, there is zero correlation between a person’s height and their favorite color. The two variables are unrelated to each other.

Correlational Research Methods

Correlational research can be conducted using different methods, including:

Surveys are a common method used in correlational research. Researchers collect data by asking participants to complete questionnaires or surveys that measure different variables of interest. Surveys are useful for exploring the relationships between variables such as personality traits, attitudes, and behaviors.

Observational Studies

Observational studies involve observing and recording the behavior of participants in natural settings. Researchers can use observational studies to examine the relationships between variables such as social interactions, group dynamics, and communication patterns.

Archival Data

Archival data involves using existing data sources such as historical records, census data, or medical records to explore the relationships between variables. Archival data is useful for investigating the relationships between variables that cannot be manipulated or controlled.

Experimental Design

While correlational research does not involve manipulating variables, researchers can use experimental design to establish cause-and-effect relationships between variables. Experimental design involves manipulating one variable while holding other variables constant to determine the effect on the dependent variable.

Meta-Analysis

Meta-analysis involves combining and analyzing the results of multiple studies to explore the relationships between variables across different contexts and populations. Meta-analysis is useful for identifying patterns and inconsistencies in the literature and can provide insights into the strength and direction of relationships between variables.

Data Analysis Methods

Correlational research data analysis methods depend on the type of data collected and the research questions being investigated. Here are some common data analysis methods used in correlational research:

Correlation Coefficient

A correlation coefficient is a statistical measure that quantifies the strength and direction of the relationship between two variables. The correlation coefficient ranges from -1 to +1, with -1 indicating a perfect negative correlation, +1 indicating a perfect positive correlation, and 0 indicating no correlation. Researchers use correlation coefficients to determine the degree to which two variables are related.

Scatterplots

A scatterplot is a graphical representation of the relationship between two variables. Each data point on the plot represents a single observation. The x-axis represents one variable, and the y-axis represents the other variable. The pattern of data points on the plot can provide insights into the strength and direction of the relationship between the two variables.

Regression Analysis

Regression analysis is a statistical method used to model the relationship between two or more variables. Researchers use regression analysis to predict the value of one variable based on the value of another variable. Regression analysis can help identify the strength and direction of the relationship between variables, as well as the degree to which one variable can be used to predict the other.

Factor Analysis

Factor analysis is a statistical method used to identify patterns among variables. Researchers use factor analysis to group variables into factors that are related to each other. Factor analysis can help identify underlying factors that influence the relationship between two variables.

Path Analysis

Path analysis is a statistical method used to model the relationship between multiple variables. Researchers use path analysis to test causal models and identify direct and indirect effects between variables.

Applications of Correlational Research

Correlational research has many practical applications in various fields, including:

- Psychology : Correlational research is commonly used in psychology to explore the relationships between variables such as personality traits, behaviors, and mental health outcomes. For example, researchers may use correlational research to examine the relationship between anxiety and depression, or the relationship between self-esteem and academic achievement.

- Education : Correlational research is useful in educational research to explore the relationships between variables such as teaching methods, student motivation, and academic performance. For example, researchers may use correlational research to examine the relationship between student engagement and academic success, or the relationship between teacher feedback and student learning outcomes.

- Business : Correlational research can be used in business to explore the relationships between variables such as consumer behavior, marketing strategies, and sales outcomes. For example, marketers may use correlational research to examine the relationship between advertising spending and sales revenue, or the relationship between customer satisfaction and brand loyalty.

- Medicine : Correlational research is useful in medical research to explore the relationships between variables such as risk factors, disease outcomes, and treatment effectiveness. For example, researchers may use correlational research to examine the relationship between smoking and lung cancer, or the relationship between exercise and heart health.

- Social Science : Correlational research is commonly used in social science research to explore the relationships between variables such as socioeconomic status, cultural factors, and social behavior. For example, researchers may use correlational research to examine the relationship between income and voting behavior, or the relationship between cultural values and attitudes towards immigration.

Examples of Correlational Research

- Psychology : Researchers might be interested in exploring the relationship between two variables, such as parental attachment and anxiety levels in young adults. The study could involve measuring levels of attachment and anxiety using established scales or questionnaires, and then analyzing the data to determine if there is a correlation between the two variables. This information could be useful in identifying potential risk factors for anxiety in young adults, and in developing interventions that could help improve attachment and reduce anxiety.

- Education : In a correlational study in education, researchers might investigate the relationship between two variables, such as teacher engagement and student motivation in a classroom setting. The study could involve measuring levels of teacher engagement and student motivation using established scales or questionnaires, and then analyzing the data to determine if there is a correlation between the two variables. This information could be useful in identifying strategies that teachers could use to improve student motivation and engagement in the classroom.

- Business : Researchers might explore the relationship between two variables, such as employee satisfaction and productivity levels in a company. The study could involve measuring levels of employee satisfaction and productivity using established scales or questionnaires, and then analyzing the data to determine if there is a correlation between the two variables. This information could be useful in identifying factors that could help increase productivity and improve job satisfaction among employees.

- Medicine : Researchers might examine the relationship between two variables, such as smoking and the risk of developing lung cancer. The study could involve collecting data on smoking habits and lung cancer diagnoses, and then analyzing the data to determine if there is a correlation between the two variables. This information could be useful in identifying risk factors for lung cancer and in developing interventions that could help reduce smoking rates.

- Sociology : Researchers might investigate the relationship between two variables, such as income levels and political attitudes. The study could involve measuring income levels and political attitudes using established scales or questionnaires, and then analyzing the data to determine if there is a correlation between the two variables. This information could be useful in understanding how socioeconomic factors can influence political beliefs and attitudes.

How to Conduct Correlational Research

Here are the general steps to conduct correlational research:

- Identify the Research Question : Start by identifying the research question that you want to explore. It should involve two or more variables that you want to investigate for a correlation.

- Choose the research method: Decide on the research method that will be most appropriate for your research question. The most common methods for correlational research are surveys, archival research, and naturalistic observation.

- Choose the Sample: Select the participants or data sources that you will use in your study. Your sample should be representative of the population you want to generalize the results to.

- Measure the variables: Choose the measures that will be used to assess the variables of interest. Ensure that the measures are reliable and valid.

- Collect the Data: Collect the data from your sample using the chosen research method. Be sure to maintain ethical standards and obtain informed consent from your participants.

- Analyze the data: Use statistical software to analyze the data and compute the correlation coefficient. This will help you determine the strength and direction of the correlation between the variables.

- Interpret the results: Interpret the results and draw conclusions based on the findings. Consider any limitations or alternative explanations for the results.

- Report the findings: Report the findings of your study in a research report or manuscript. Be sure to include the research question, methods, results, and conclusions.

Purpose of Correlational Research

The purpose of correlational research is to examine the relationship between two or more variables. Correlational research allows researchers to identify whether there is a relationship between variables, and if so, the strength and direction of that relationship. This information can be useful for predicting and explaining behavior, and for identifying potential risk factors or areas for intervention.

Correlational research can be used in a variety of fields, including psychology, education, medicine, business, and sociology. For example, in psychology, correlational research can be used to explore the relationship between personality traits and behavior, or between early life experiences and later mental health outcomes. In education, correlational research can be used to examine the relationship between teaching practices and student achievement. In medicine, correlational research can be used to investigate the relationship between lifestyle factors and disease outcomes.

Overall, the purpose of correlational research is to provide insight into the relationship between variables, which can be used to inform further research, interventions, or policy decisions.

When to use Correlational Research

Here are some situations when correlational research can be particularly useful:

- When experimental research is not possible or ethical: In some situations, it may not be possible or ethical to manipulate variables in an experimental design. In these cases, correlational research can be used to explore the relationship between variables without manipulating them.

- When exploring new areas of research: Correlational research can be useful when exploring new areas of research or when researchers are unsure of the direction of the relationship between variables. Correlational research can help identify potential areas for further investigation.

- When testing theories: Correlational research can be useful for testing theories about the relationship between variables. Researchers can use correlational research to examine the relationship between variables predicted by a theory, and to determine whether the theory is supported by the data.

- When making predictions: Correlational research can be used to make predictions about future behavior or outcomes. For example, if there is a strong positive correlation between education level and income, one could predict that individuals with higher levels of education will have higher incomes.

- When identifying risk factors: Correlational research can be useful for identifying potential risk factors for negative outcomes. For example, a study might find a positive correlation between drug use and depression, indicating that drug use could be a risk factor for depression.

Characteristics of Correlational Research

Here are some common characteristics of correlational research:

- Examines the relationship between two or more variables: Correlational research is designed to examine the relationship between two or more variables. It seeks to determine if there is a relationship between the variables, and if so, the strength and direction of that relationship.

- Non-experimental design: Correlational research is typically non-experimental in design, meaning that the researcher does not manipulate any variables. Instead, the researcher observes and measures the variables as they naturally occur.

- Cannot establish causation : Correlational research cannot establish causation, meaning that it cannot determine whether one variable causes changes in another variable. Instead, it only provides information about the relationship between the variables.

- Uses statistical analysis: Correlational research relies on statistical analysis to determine the strength and direction of the relationship between variables. This may include calculating correlation coefficients, regression analysis, or other statistical tests.

- Observes real-world phenomena : Correlational research is often used to observe real-world phenomena, such as the relationship between education and income or the relationship between stress and physical health.

- Can be conducted in a variety of fields : Correlational research can be conducted in a variety of fields, including psychology, sociology, education, and medicine.

- Can be conducted using different methods: Correlational research can be conducted using a variety of methods, including surveys, observational studies, and archival studies.

Advantages of Correlational Research

There are several advantages of using correlational research in a study:

- Allows for the exploration of relationships: Correlational research allows researchers to explore the relationships between variables in a natural setting without manipulating any variables. This can help identify possible relationships between variables that may not have been previously considered.

- Useful for predicting behavior: Correlational research can be useful for predicting future behavior. If a strong correlation is found between two variables, researchers can use this information to predict how changes in one variable may affect the other.

- Can be conducted in real-world settings: Correlational research can be conducted in real-world settings, which allows for the collection of data that is representative of real-world phenomena.

- Can be less expensive and time-consuming than experimental research: Correlational research is often less expensive and time-consuming than experimental research, as it does not involve manipulating variables or creating controlled conditions.

- Useful in identifying risk factors: Correlational research can be used to identify potential risk factors for negative outcomes. By identifying variables that are correlated with negative outcomes, researchers can develop interventions or policies to reduce the risk of negative outcomes.

- Useful in exploring new areas of research: Correlational research can be useful in exploring new areas of research, particularly when researchers are unsure of the direction of the relationship between variables. By conducting correlational research, researchers can identify potential areas for further investigation.

Limitation of Correlational Research

Correlational research also has several limitations that should be taken into account:

- Cannot establish causation: Correlational research cannot establish causation, meaning that it cannot determine whether one variable causes changes in another variable. This is because it is not possible to control all possible confounding variables that could affect the relationship between the variables being studied.

- Directionality problem: The directionality problem refers to the difficulty of determining which variable is influencing the other. For example, a correlation may exist between happiness and social support, but it is not clear whether social support causes happiness, or whether happy people are more likely to have social support.

- Third variable problem: The third variable problem refers to the possibility that a third variable, not included in the study, is responsible for the observed relationship between the two variables being studied.

- Limited generalizability: Correlational research is often limited in terms of its generalizability to other populations or settings. This is because the sample studied may not be representative of the larger population, or because the variables studied may behave differently in different contexts.

- Relies on self-reported data: Correlational research often relies on self-reported data, which can be subject to social desirability bias or other forms of response bias.

- Limited in explaining complex behaviors: Correlational research is limited in explaining complex behaviors that are influenced by multiple factors, such as personality traits, situational factors, and social context.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Triangulation in Research – Types, Methods and...

Qualitative Research Methods

Quantitative Research – Methods, Types and...

Exploratory Research – Types, Methods and...

Quasi-Experimental Research Design – Types...

Research Methods – Types, Examples and Guide

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.2 Correlational Research

Learning objectives.

- Define correlational research and give several examples.

- Explain why a researcher might choose to conduct correlational research rather than experimental research or another type of nonexperimental research.

What Is Correlational Research?

Correlational research is a type of nonexperimental research in which the researcher measures two variables and assesses the statistical relationship (i.e., the correlation) between them with little or no effort to control extraneous variables. There are essentially two reasons that researchers interested in statistical relationships between variables would choose to conduct a correlational study rather than an experiment. The first is that they do not believe that the statistical relationship is a causal one. For example, a researcher might evaluate the validity of a brief extraversion test by administering it to a large group of participants along with a longer extraversion test that has already been shown to be valid. This researcher might then check to see whether participants’ scores on the brief test are strongly correlated with their scores on the longer one. Neither test score is thought to cause the other, so there is no independent variable to manipulate. In fact, the terms independent variable and dependent variable do not apply to this kind of research.

The other reason that researchers would choose to use a correlational study rather than an experiment is that the statistical relationship of interest is thought to be causal, but the researcher cannot manipulate the independent variable because it is impossible, impractical, or unethical. For example, Allen Kanner and his colleagues thought that the number of “daily hassles” (e.g., rude salespeople, heavy traffic) that people experience affects the number of physical and psychological symptoms they have (Kanner, Coyne, Schaefer, & Lazarus, 1981). But because they could not manipulate the number of daily hassles their participants experienced, they had to settle for measuring the number of daily hassles—along with the number of symptoms—using self-report questionnaires. Although the strong positive relationship they found between these two variables is consistent with their idea that hassles cause symptoms, it is also consistent with the idea that symptoms cause hassles or that some third variable (e.g., neuroticism) causes both.

A common misconception among beginning researchers is that correlational research must involve two quantitative variables, such as scores on two extraversion tests or the number of hassles and number of symptoms people have experienced. However, the defining feature of correlational research is that the two variables are measured—neither one is manipulated—and this is true regardless of whether the variables are quantitative or categorical. Imagine, for example, that a researcher administers the Rosenberg Self-Esteem Scale to 50 American college students and 50 Japanese college students. Although this “feels” like a between-subjects experiment, it is a correlational study because the researcher did not manipulate the students’ nationalities. The same is true of the study by Cacioppo and Petty comparing college faculty and factory workers in terms of their need for cognition. It is a correlational study because the researchers did not manipulate the participants’ occupations.

Figure 7.2 “Results of a Hypothetical Study on Whether People Who Make Daily To-Do Lists Experience Less Stress Than People Who Do Not Make Such Lists” shows data from a hypothetical study on the relationship between whether people make a daily list of things to do (a “to-do list”) and stress. Notice that it is unclear whether this is an experiment or a correlational study because it is unclear whether the independent variable was manipulated. If the researcher randomly assigned some participants to make daily to-do lists and others not to, then it is an experiment. If the researcher simply asked participants whether they made daily to-do lists, then it is a correlational study. The distinction is important because if the study was an experiment, then it could be concluded that making the daily to-do lists reduced participants’ stress. But if it was a correlational study, it could only be concluded that these variables are statistically related. Perhaps being stressed has a negative effect on people’s ability to plan ahead (the directionality problem). Or perhaps people who are more conscientious are more likely to make to-do lists and less likely to be stressed (the third-variable problem). The crucial point is that what defines a study as experimental or correlational is not the variables being studied, nor whether the variables are quantitative or categorical, nor the type of graph or statistics used to analyze the data. It is how the study is conducted.

Figure 7.2 Results of a Hypothetical Study on Whether People Who Make Daily To-Do Lists Experience Less Stress Than People Who Do Not Make Such Lists

Data Collection in Correlational Research

Again, the defining feature of correlational research is that neither variable is manipulated. It does not matter how or where the variables are measured. A researcher could have participants come to a laboratory to complete a computerized backward digit span task and a computerized risky decision-making task and then assess the relationship between participants’ scores on the two tasks. Or a researcher could go to a shopping mall to ask people about their attitudes toward the environment and their shopping habits and then assess the relationship between these two variables. Both of these studies would be correlational because no independent variable is manipulated. However, because some approaches to data collection are strongly associated with correlational research, it makes sense to discuss them here. The two we will focus on are naturalistic observation and archival data. A third, survey research, is discussed in its own chapter.

Naturalistic Observation

Naturalistic observation is an approach to data collection that involves observing people’s behavior in the environment in which it typically occurs. Thus naturalistic observation is a type of field research (as opposed to a type of laboratory research). It could involve observing shoppers in a grocery store, children on a school playground, or psychiatric inpatients in their wards. Researchers engaged in naturalistic observation usually make their observations as unobtrusively as possible so that participants are often not aware that they are being studied. Ethically, this is considered to be acceptable if the participants remain anonymous and the behavior occurs in a public setting where people would not normally have an expectation of privacy. Grocery shoppers putting items into their shopping carts, for example, are engaged in public behavior that is easily observable by store employees and other shoppers. For this reason, most researchers would consider it ethically acceptable to observe them for a study. On the other hand, one of the arguments against the ethicality of the naturalistic observation of “bathroom behavior” discussed earlier in the book is that people have a reasonable expectation of privacy even in a public restroom and that this expectation was violated.

Researchers Robert Levine and Ara Norenzayan used naturalistic observation to study differences in the “pace of life” across countries (Levine & Norenzayan, 1999). One of their measures involved observing pedestrians in a large city to see how long it took them to walk 60 feet. They found that people in some countries walked reliably faster than people in other countries. For example, people in the United States and Japan covered 60 feet in about 12 seconds on average, while people in Brazil and Romania took close to 17 seconds.

Because naturalistic observation takes place in the complex and even chaotic “real world,” there are two closely related issues that researchers must deal with before collecting data. The first is sampling. When, where, and under what conditions will the observations be made, and who exactly will be observed? Levine and Norenzayan described their sampling process as follows:

Male and female walking speed over a distance of 60 feet was measured in at least two locations in main downtown areas in each city. Measurements were taken during main business hours on clear summer days. All locations were flat, unobstructed, had broad sidewalks, and were sufficiently uncrowded to allow pedestrians to move at potentially maximum speeds. To control for the effects of socializing, only pedestrians walking alone were used. Children, individuals with obvious physical handicaps, and window-shoppers were not timed. Thirty-five men and 35 women were timed in most cities. (p. 186)

Precise specification of the sampling process in this way makes data collection manageable for the observers, and it also provides some control over important extraneous variables. For example, by making their observations on clear summer days in all countries, Levine and Norenzayan controlled for effects of the weather on people’s walking speeds.

The second issue is measurement. What specific behaviors will be observed? In Levine and Norenzayan’s study, measurement was relatively straightforward. They simply measured out a 60-foot distance along a city sidewalk and then used a stopwatch to time participants as they walked over that distance. Often, however, the behaviors of interest are not so obvious or objective. For example, researchers Robert Kraut and Robert Johnston wanted to study bowlers’ reactions to their shots, both when they were facing the pins and then when they turned toward their companions (Kraut & Johnston, 1979). But what “reactions” should they observe? Based on previous research and their own pilot testing, Kraut and Johnston created a list of reactions that included “closed smile,” “open smile,” “laugh,” “neutral face,” “look down,” “look away,” and “face cover” (covering one’s face with one’s hands). The observers committed this list to memory and then practiced by coding the reactions of bowlers who had been videotaped. During the actual study, the observers spoke into an audio recorder, describing the reactions they observed. Among the most interesting results of this study was that bowlers rarely smiled while they still faced the pins. They were much more likely to smile after they turned toward their companions, suggesting that smiling is not purely an expression of happiness but also a form of social communication.

Naturalistic observation has revealed that bowlers tend to smile when they turn away from the pins and toward their companions, suggesting that smiling is not purely an expression of happiness but also a form of social communication.

sieneke toering – bowling big lebowski style – CC BY-NC-ND 2.0.

When the observations require a judgment on the part of the observers—as in Kraut and Johnston’s study—this process is often described as coding . Coding generally requires clearly defining a set of target behaviors. The observers then categorize participants individually in terms of which behavior they have engaged in and the number of times they engaged in each behavior. The observers might even record the duration of each behavior. The target behaviors must be defined in such a way that different observers code them in the same way. This is the issue of interrater reliability. Researchers are expected to demonstrate the interrater reliability of their coding procedure by having multiple raters code the same behaviors independently and then showing that the different observers are in close agreement. Kraut and Johnston, for example, video recorded a subset of their participants’ reactions and had two observers independently code them. The two observers showed that they agreed on the reactions that were exhibited 97% of the time, indicating good interrater reliability.

Archival Data

Another approach to correlational research is the use of archival data , which are data that have already been collected for some other purpose. An example is a study by Brett Pelham and his colleagues on “implicit egotism”—the tendency for people to prefer people, places, and things that are similar to themselves (Pelham, Carvallo, & Jones, 2005). In one study, they examined Social Security records to show that women with the names Virginia, Georgia, Louise, and Florence were especially likely to have moved to the states of Virginia, Georgia, Louisiana, and Florida, respectively.

As with naturalistic observation, measurement can be more or less straightforward when working with archival data. For example, counting the number of people named Virginia who live in various states based on Social Security records is relatively straightforward. But consider a study by Christopher Peterson and his colleagues on the relationship between optimism and health using data that had been collected many years before for a study on adult development (Peterson, Seligman, & Vaillant, 1988). In the 1940s, healthy male college students had completed an open-ended questionnaire about difficult wartime experiences. In the late 1980s, Peterson and his colleagues reviewed the men’s questionnaire responses to obtain a measure of explanatory style—their habitual ways of explaining bad events that happen to them. More pessimistic people tend to blame themselves and expect long-term negative consequences that affect many aspects of their lives, while more optimistic people tend to blame outside forces and expect limited negative consequences. To obtain a measure of explanatory style for each participant, the researchers used a procedure in which all negative events mentioned in the questionnaire responses, and any causal explanations for them, were identified and written on index cards. These were given to a separate group of raters who rated each explanation in terms of three separate dimensions of optimism-pessimism. These ratings were then averaged to produce an explanatory style score for each participant. The researchers then assessed the statistical relationship between the men’s explanatory style as college students and archival measures of their health at approximately 60 years of age. The primary result was that the more optimistic the men were as college students, the healthier they were as older men. Pearson’s r was +.25.

This is an example of content analysis —a family of systematic approaches to measurement using complex archival data. Just as naturalistic observation requires specifying the behaviors of interest and then noting them as they occur, content analysis requires specifying keywords, phrases, or ideas and then finding all occurrences of them in the data. These occurrences can then be counted, timed (e.g., the amount of time devoted to entertainment topics on the nightly news show), or analyzed in a variety of other ways.

Key Takeaways

- Correlational research involves measuring two variables and assessing the relationship between them, with no manipulation of an independent variable.

- Correlational research is not defined by where or how the data are collected. However, some approaches to data collection are strongly associated with correlational research. These include naturalistic observation (in which researchers observe people’s behavior in the context in which it normally occurs) and the use of archival data that were already collected for some other purpose.

Discussion: For each of the following, decide whether it is most likely that the study described is experimental or correlational and explain why.

- An educational researcher compares the academic performance of students from the “rich” side of town with that of students from the “poor” side of town.

- A cognitive psychologist compares the ability of people to recall words that they were instructed to “read” with their ability to recall words that they were instructed to “imagine.”

- A manager studies the correlation between new employees’ college grade point averages and their first-year performance reports.

- An automotive engineer installs different stick shifts in a new car prototype, each time asking several people to rate how comfortable the stick shift feels.

- A food scientist studies the relationship between the temperature inside people’s refrigerators and the amount of bacteria on their food.

- A social psychologist tells some research participants that they need to hurry over to the next building to complete a study. She tells others that they can take their time. Then she observes whether they stop to help a research assistant who is pretending to be hurt.

Kanner, A. D., Coyne, J. C., Schaefer, C., & Lazarus, R. S. (1981). Comparison of two modes of stress measurement: Daily hassles and uplifts versus major life events. Journal of Behavioral Medicine, 4 , 1–39.

Kraut, R. E., & Johnston, R. E. (1979). Social and emotional messages of smiling: An ethological approach. Journal of Personality and Social Psychology, 37 , 1539–1553.

Levine, R. V., & Norenzayan, A. (1999). The pace of life in 31 countries. Journal of Cross-Cultural Psychology, 30 , 178–205.

Pelham, B. W., Carvallo, M., & Jones, J. T. (2005). Implicit egotism. Current Directions in Psychological Science, 14 , 106–110.

Peterson, C., Seligman, M. E. P., & Vaillant, G. E. (1988). Pessimistic explanatory style is a risk factor for physical illness: A thirty-five year longitudinal study. Journal of Personality and Social Psychology, 55 , 23–27.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Correlational Study Overview & Examples

By Jim Frost 2 Comments

What is a Correlational Study?

A correlational study is an experimental design that evaluates only the correlation between variables. The researchers record measurements but do not control or manipulate the variables. Correlational research is a form of observational study .

A correlation indicates that as the value of one variable increases, the other tends to change in a specific direction:

- Positive correlation : Two variables increase or decrease together (as height increases, weight tends to increase).

- Negative correlation : As one variable increases, the other tends to decrease (as school absences increase, grades tend to fall).

- No correlation : No relationship exists between the two variables. As one increases, the other does not change in a specific direction (as absences increase, height doesn’t tend to increase or decrease).

For example, researchers conducting correlational research explored the relationship between social media usage and levels of anxiety in young adults. Participants reported their demographic information and daily time on various social media platforms and completed a standardized anxiety assessment tool.

The correlational study looked for relationships between social media usage and anxiety. Is increased social media usage associated with higher anxiety? Is it worse for particular demographics?

Learn more about Interpreting Correlation .

Using Correlational Research

Correlational research design is crucial in various disciplines, notably psychology and medicine. This type of design is generally cheaper, easier, and quicker to conduct than an experiment because the researchers don’t control any variables or conditions. Consequently, these studies often serve as an initial assessment, especially when random assignment and controlling variables for a true experiment are not feasible or unethical.

However, an unfortunate aspect of a correlational study is its limitation in establishing causation. While these studies can reveal connections between variables, they cannot prove that altering one variable will cause changes in another. Hence, correlational research can determine whether relationships exist but cannot confirm causality.

Remember, correlation doesn’t necessarily imply causation !

Correlational Study vs Experiment

The difference between the two designs is simple.

In a correlational study, the researchers don’t systematically control any variables. They’re simply observing events and do not want to influence outcomes.

In an experiment, researchers manipulate variables and explicitly hope to affect the outcomes. For example, they might control the treatment condition by giving a medication or placebo to each subject. They also randomly assign subjects to the control and treatment groups, which helps establish causality.

Learn more about Randomized Controlled Trials (RCTs) , which statisticians consider to be true experiments.

Types of Correlation Studies and Examples

Researchers divide these studies into three broad types.

Secondary Data Sources

One approach to correlational research is to utilize pre-existing data, which may include official records, public polls, or data from earlier studies. This method can be cost-effective and time-efficient because other researchers have already gathered the data. These existing data sources can provide large sample sizes and longitudinal data , thereby showing relationship trends.

However, it also comes with potential drawbacks. The data may be incomplete or irrelevant to the new research question. Additionally, as a researcher, you won’t have control over the original data collection methods, potentially impacting the data’s reliability and validity .

Using existing data makes this approach a retrospective study .

Surveys in Correlation Research

Surveys are a great way to collect data for correlational studies while using a consistent instrument across all respondents. You can use various formats, such as in-person, online, and by phone. And you can ask the questions necessary to obtain the particular variables you need for your project. In short, it’s easy to customize surveys to match your study’s requirements.

However, you’ll need to carefully word all the questions to be clear and not introduce bias in the results. This process can take multiple iterations and pilot studies to produce the finished survey.

For example, you can use a survey to find correlations between various demographic variables and political opinions.

Naturalistic Observation

Naturalistic observation is a method of collecting field data for a correlational study. Researchers observe and measure variables in a natural environment. The process can include counting events, categorizing behavior, and describing outcomes without interfering with the activities.

For example, researchers might observe and record children’s behavior after watching television. Does a relationship exist between the type of television program and behaviors?

Naturalistic observations occur in a prospective study .

Analyzing Data from a Correlational Study

Statistical analysis of correlational research frequently involves correlation and regression analysis .

A correlation coefficient describes the strength and direction of the relationship between two variables with a single number.

Regression analysis can evaluate how multiple variables relate to a single outcome. For example, in the social media correlational study example, how do the demographic variables and daily social media usage collectively correlate with anxiety?

Curtis EA, Comiskey C, Dempsey O. Importance and use of correlational research . Nurse Researcher . 2016;23(6):20-25. doi:10.7748/nr.2016.e1382

Share this:

Reader Interactions

January 14, 2024 at 4:34 pm

Hi Jim. Have you written a blog note dedicated to clinical trials? If not, besides the note on hypothesis testing, are there other blogs ypo have written that touch on clinical trials?

January 14, 2024 at 5:49 pm

Hi Stan, I haven’t written a blog post specifically about clinical trials, but I have the following related posts:

Randomized Controlled Trials Clinical Trial about a COVID vaccine Clinical Trials about flu vaccines

Comments and Questions Cancel reply

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Correlational Research | Guide, Design & Examples

Correlational Research | Guide, Design & Examples

Published on 5 May 2022 by Pritha Bhandari . Revised on 5 December 2022.

A correlational research design investigates relationships between variables without the researcher controlling or manipulating any of them.

A correlation reflects the strength and/or direction of the relationship between two (or more) variables. The direction of a correlation can be either positive or negative.

| Positive correlation | Both variables change in the same direction | As height increases, weight also increases |

|---|---|---|

| Negative correlation | The variables change in opposite directions | As coffee consumption increases, tiredness decreases |

| Zero correlation | There is no relationship between the variables | Coffee consumption is not correlated with height |

Table of contents

Correlational vs experimental research, when to use correlational research, how to collect correlational data, how to analyse correlational data, correlation and causation, frequently asked questions about correlational research.

Correlational and experimental research both use quantitative methods to investigate relationships between variables. But there are important differences in how data is collected and the types of conclusions you can draw.

| Correlational research | Experimental research | |

|---|---|---|

| Purpose | Used to test strength of association between variables | Used to test cause-and-effect relationships between variables |

| Variables | Variables are only observed with no manipulation or intervention by researchers | An is manipulated and a dependent variable is observed |

| Control | Limited is used, so other variables may play a role in the relationship | are controlled so that they can’t impact your variables of interest |

| Validity | High : you can confidently generalise your conclusions to other populations or settings | High : you can confidently draw conclusions about causation |

Prevent plagiarism, run a free check.

Correlational research is ideal for gathering data quickly from natural settings. That helps you generalise your findings to real-life situations in an externally valid way.

There are a few situations where correlational research is an appropriate choice.

To investigate non-causal relationships

You want to find out if there is an association between two variables, but you don’t expect to find a causal relationship between them.

Correlational research can provide insights into complex real-world relationships, helping researchers develop theories and make predictions.

To explore causal relationships between variables

You think there is a causal relationship between two variables, but it is impractical, unethical, or too costly to conduct experimental research that manipulates one of the variables.

Correlational research can provide initial indications or additional support for theories about causal relationships.

To test new measurement tools

You have developed a new instrument for measuring your variable, and you need to test its reliability or validity .

Correlational research can be used to assess whether a tool consistently or accurately captures the concept it aims to measure.

There are many different methods you can use in correlational research. In the social and behavioural sciences, the most common data collection methods for this type of research include surveys, observations, and secondary data.

It’s important to carefully choose and plan your methods to ensure the reliability and validity of your results. You should carefully select a representative sample so that your data reflects the population you’re interested in without bias .

In survey research , you can use questionnaires to measure your variables of interest. You can conduct surveys online, by post, by phone, or in person.

Surveys are a quick, flexible way to collect standardised data from many participants, but it’s important to ensure that your questions are worded in an unbiased way and capture relevant insights.

Naturalistic observation

Naturalistic observation is a type of field research where you gather data about a behaviour or phenomenon in its natural environment.

This method often involves recording, counting, describing, and categorising actions and events. Naturalistic observation can include both qualitative and quantitative elements, but to assess correlation, you collect data that can be analysed quantitatively (e.g., frequencies, durations, scales, and amounts).

Naturalistic observation lets you easily generalise your results to real-world contexts, and you can study experiences that aren’t replicable in lab settings. But data analysis can be time-consuming and unpredictable, and researcher bias may skew the interpretations.

Secondary data

Instead of collecting original data, you can also use data that has already been collected for a different purpose, such as official records, polls, or previous studies.

Using secondary data is inexpensive and fast, because data collection is complete. However, the data may be unreliable, incomplete, or not entirely relevant, and you have no control over the reliability or validity of the data collection procedures.

After collecting data, you can statistically analyse the relationship between variables using correlation or regression analyses, or both. You can also visualise the relationships between variables with a scatterplot.

Different types of correlation coefficients and regression analyses are appropriate for your data based on their levels of measurement and distributions .

Correlation analysis

Using a correlation analysis, you can summarise the relationship between variables into a correlation coefficient : a single number that describes the strength and direction of the relationship between variables. With this number, you’ll quantify the degree of the relationship between variables.

The Pearson product-moment correlation coefficient, also known as Pearson’s r , is commonly used for assessing a linear relationship between two quantitative variables.

Correlation coefficients are usually found for two variables at a time, but you can use a multiple correlation coefficient for three or more variables.

Regression analysis

With a regression analysis , you can predict how much a change in one variable will be associated with a change in the other variable. The result is a regression equation that describes the line on a graph of your variables.

You can use this equation to predict the value of one variable based on the given value(s) of the other variable(s). It’s best to perform a regression analysis after testing for a correlation between your variables.

It’s important to remember that correlation does not imply causation . Just because you find a correlation between two things doesn’t mean you can conclude one of them causes the other, for a few reasons.

Directionality problem

If two variables are correlated, it could be because one of them is a cause and the other is an effect. But the correlational research design doesn’t allow you to infer which is which. To err on the side of caution, researchers don’t conclude causality from correlational studies.

Third variable problem

A confounding variable is a third variable that influences other variables to make them seem causally related even though they are not. Instead, there are separate causal links between the confounder and each variable.

In correlational research, there’s limited or no researcher control over extraneous variables . Even if you statistically control for some potential confounders, there may still be other hidden variables that disguise the relationship between your study variables.

Although a correlational study can’t demonstrate causation on its own, it can help you develop a causal hypothesis that’s tested in controlled experiments.

A correlation reflects the strength and/or direction of the association between two or more variables.

- A positive correlation means that both variables change in the same direction.

- A negative correlation means that the variables change in opposite directions.

- A zero correlation means there’s no relationship between the variables.

A correlational research design investigates relationships between two variables (or more) without the researcher controlling or manipulating any of them. It’s a non-experimental type of quantitative research .

Controlled experiments establish causality, whereas correlational studies only show associations between variables.

- In an experimental design , you manipulate an independent variable and measure its effect on a dependent variable. Other variables are controlled so they can’t impact the results.

- In a correlational design , you measure variables without manipulating any of them. You can test whether your variables change together, but you can’t be sure that one variable caused a change in another.

In general, correlational research is high in external validity while experimental research is high in internal validity .

A correlation is usually tested for two variables at a time, but you can test correlations between three or more variables.

A correlation coefficient is a single number that describes the strength and direction of the relationship between your variables.

Different types of correlation coefficients might be appropriate for your data based on their levels of measurement and distributions . The Pearson product-moment correlation coefficient (Pearson’s r ) is commonly used to assess a linear relationship between two quantitative variables.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2022, December 05). Correlational Research | Guide, Design & Examples. Scribbr. Retrieved 30 September 2024, from https://www.scribbr.co.uk/research-methods/correlational-research-design/

Is this article helpful?

Pritha Bhandari

Other students also liked, a quick guide to experimental design | 5 steps & examples, quasi-experimental design | definition, types & examples, qualitative vs quantitative research | examples & methods.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Vasc Bras

- v.17(4); Oct-Dec 2018

Correlation analysis in clinical and experimental studies

Hélio amante miot.

1 Universidade Estadual Paulista – UNESP, Faculdade de Medicina de Botucatu, Departamento de Dermatologia e Radioterapia, Botucatu, SP, Brasil.

It is common for researchers conducting clinical or biomedical studies to be interested in investigating whether the values of two or more quantitative variables change in conjunction in a given individual or object of study. In other words, whether when the value of one variable increases, the value of another tends to increase/ or, inversely, reduce, progressively. There are many different statistical tests that explore the intensity and direction of this mutual behavior of variables, known as correlation tests. 1 , 2

The first step in analyzing correlations between two quantitative variables should be to look at a scatter plot, in order to discern whether there is a gradual variability between the sets of variables, whether this variation is monotonic (predominantly increasing or decreasing), if it follows a proportional tendency (linear), and whether the underlying distribution of the data is normal. 2 - 4 Different combinations of these premises indicate a need for different techniques for correlation analysis.

Figure 1 illustrates the distribution of values of four hypothetical variables (V1, V2, V3, and V4), which exhibit data that follow a normal distribution (Shapiro-Wilk, p > 0.32).

Variables V1 and V2 exhibit simultaneously increasing values, which are distributed around an underlying imaginary (ideal) straight line, which describes the trajectory of the data. It can be stated that there is a positive linear correlation between V1 and V2. For example, Rossi et al. identified a strong positive correlation (ρ = 0.82; p < 0.01) between cores on the Venous Symptoms Clinical Severity Scale and pain in chronic venous disease. 5

In contrast, variables V1 and V3 exhibit antagonistic behavior: when the values of one increase, the values of the other reduce. It can be stated that there is a negative linear correlation between V1 and V3, just as Ohki and Bellen identified a moderate negative correlation (ρ = -0.65; p < 0.01) between average regional temperature and the incidence of venous thrombosis. 6

It can also be observed that the values for the correlation between V1 and V3 are closer to the imaginary straight line than the values for the correlation between V1 and V2. This invites the conclusion that the relationship between the values of the variables V1 and V3 is stronger than the relationship between V1 and V2, even though the directions are opposite.

Comparisons of the data for V4, whether with V1, V2, or V3, do not reveal gradually increasing or decreasing behavior. This leads to the conclusion that V4 does not exhibit a correlation with the other variables.

The most widely-used technique for evaluating the correlation between two quantitative variables is Pearson’s product-moment correlation coefficient, or Pearson’s r , which requires that both samples follow a normal distribution and that the relationship between the two variables is linear. 2 , 7 Failure to adhere to these prerequisites leads to erroneous conclusions, even when working with large sample sizes.

However, it is very common that samples of clinical and demographic data do not follow a normal distribution (for example, the distributions of income, quality of life indexes, disease severity indexes, years of study, and number of children). The most widely used options for investigating correlations between variables that do not exhibit normal distributions are the Spearman rank order correlation and the Kendall rank correlation coefficient ( Tau -b), which substitute the original data for their ordered ranks. 2 , 7 , 8 These methods are also used in cases in which at least one of the variables has ordinal characteristics (for example, functional class, educational level, cancer staging, social class).

Another advantage of using the Spearman and Kendall nonparametric tests is that they are not restricted to linear correlations, as long as they exhibit monotonic behavior. In other words, they must exhibit a gradual relationship in the same direction (rising or falling) for the whole domain of the data studied.

In Figure 2 , it can be observed that there is no direct proportionality (linear) between the data for V1 and V5; rather there is an increase that is apparently exponential. Since the variation is monotonic (the data for V1 increase as a function of V5), the Spearman and Kendall coefficients can be used to estimate the correlation. In this example, to use the Pearson’s coefficient, it will be necessary to log transform the data to achieve a certain linearity of correlation ( Figure 2 : V1 x V6). It should be noted that the ρ and τ coefficients give the same resultant values for the correlations V1 vs. V5 and V1 vs. V6, since V6 is a transformation of V5 into monotonic data.

In biomedical sciences, the Spearman coefficient (ρ or rho) is the most widely used for evaluating the correlation between two quantitative variables, probably because it is similar to the Pearson method, once the data have been substituted for their ordered ranks. However, care should be taken when generalizing conclusions of interpretations of the correlation between the values of the ranks of data from the variables and the original data.

In contrast, the Kendall Tau-b coefficient (τ or t b ), has mathematical properties that make it more robust to extreme data (outliers), give it a greater capacity for populational inference and a smaller estimation error. While significance (p-value) and direction (+ or -) are similar to those of the Spearman method, the coefficient returns less extreme values and interpretation is different, since it signifies the percentage of observed pairs that take the same direction in the sample (agreement) minus the pairs that do not agree. For example, a τ coefficient of 0.60 signifies that 80% of pairs agree, while 20% disagree (τ = 0.80 - 0.20 = 0.60). 9

Transformation of data (for example, logarithmic, square root, 1/x) in order to obtain a normal distribution to enable Pearson’s coefficient to be tested is a valid option for samples with asymmetrical data distributions ( Figure 2 : V1 x V6). However, it should be borne in mind that, in common with techniques that employ ordered ranks, transformation of data alters the scale between measures and impacts on direct interpretation of the measures of effect. 7

The magnitude of the effect of the correlation between two or more variables is represented by correlation coefficients, which take values from -1 to +1, passing through zero (absence of correlation). Positive coefficients ( r > 0) indicate a direct relationship ( Figure 1 : V1 x V2) between variables; while negative coefficients ( r < 0) indicate an inverse correlation ( Figure 1 : V1 x V3 and V2 x V3).

Each correlation test has its own coefficient, demanding its own interpretation. In general, for the coefficients Pearson’s r and Spearman’s ρ, values from 0 to 0.3 (or 0 to -0.3) are biologically negligible; those from 0.31 to 0.5 (or -0.31 to -0.5) are weak; from 0.51 to 0.7 (or -0.51 and -0.7) are moderate; from 0.71 to 0.9 (or -0.71 to 0.9) are strong correlations; and correlations > 0.9 (or < -0.9) are considered very strong. 8

One peculiarity of Pearson’s r coefficient is that the square of its value provides an estimate of the percentage of variability in the values of one variable that is explained by the variability in the other. For example, a coefficient of r = 0.7 indicates that 49% of the variability of one variable can be explained by, or is followed by, the variation in the values of the other, in the sample tested.

In clinical and biomedical studies, the majority of coefficients with biological significance fall in the range of 0.5 to 0.8 (or -0.5 to -0.8). This is the result of errors of measurement, laboratory techniques, or variation of instruments, which affect the precision of measurements, and also, and primarily, because biological phenomena are affected by multifactorial influences and complex interactions, in which the variation of a single variable cannot totally explain the behavior of another. 2

Tests of the significance of a correlation between quantitative variables are based on the null hypothesis that there is no correlation between the variables ( r = 0), which makes the p-value subject to influence both from the dimension of the effect and from the sample size. This means that caution is necessary when interpreting coefficients that result in a weak correlation (r < 0.3), but have highly significant p-values, caused by overly-large sample sizes. Calculation of sample sizes for analysis of correlations has been explored in an earlier edition of this periodical. 10

Correlation coefficients have inferential properties and, in scientific texts, should preferably be expressed with their 95% confidence intervals and significance (p-value), for example: ρ = 0.76 (95%CI 0.61-0.91), p < 0.01. 11 , 12 In the case of multiple comparisons, coefficients can be shown on their own, in the form of a matrix, and with their significance indicated to facilitate interpretation of the data, as Brianezi et al. presented the 28 correlations between seven histological parameters in a single table. 13 Special cases involving hundreds or thousands of correlations may demand graphical representation techniques, such as the color heatmaps often used in genome studies, just as Hsu et al. represented 4,930 correlations between (85x58) genomic and metabolomic variables. 14