- Privacy Policy

Home » Evaluating Research – Process, Examples and Methods

Evaluating Research – Process, Examples and Methods

Table of Contents

Evaluating Research

Definition:

Evaluating Research refers to the process of assessing the quality, credibility, and relevance of a research study or project. This involves examining the methods, data, and results of the research in order to determine its validity, reliability, and usefulness. Evaluating research can be done by both experts and non-experts in the field, and involves critical thinking, analysis, and interpretation of the research findings.

Research Evaluating Process

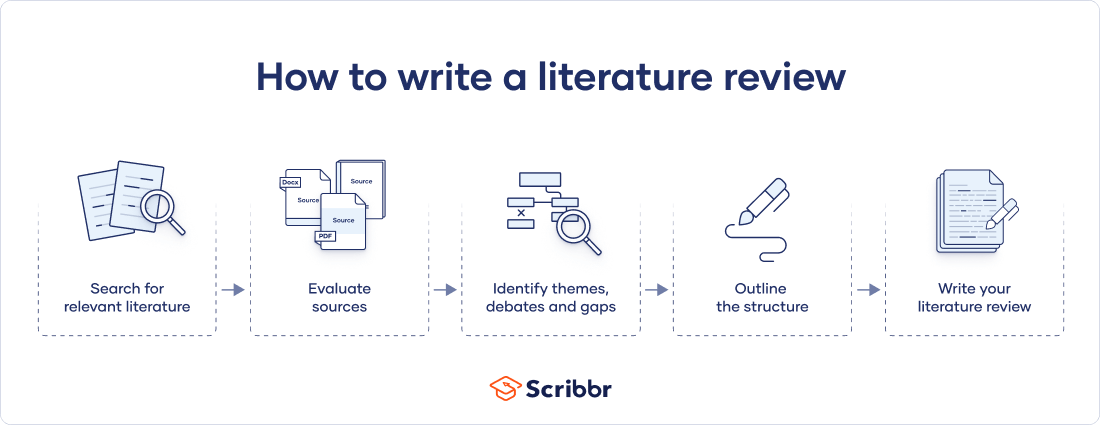

The process of evaluating research typically involves the following steps:

Identify the Research Question

The first step in evaluating research is to identify the research question or problem that the study is addressing. This will help you to determine whether the study is relevant to your needs.

Assess the Study Design

The study design refers to the methodology used to conduct the research. You should assess whether the study design is appropriate for the research question and whether it is likely to produce reliable and valid results.

Evaluate the Sample

The sample refers to the group of participants or subjects who are included in the study. You should evaluate whether the sample size is adequate and whether the participants are representative of the population under study.

Review the Data Collection Methods

You should review the data collection methods used in the study to ensure that they are valid and reliable. This includes assessing the measures used to collect data and the procedures used to collect data.

Examine the Statistical Analysis

Statistical analysis refers to the methods used to analyze the data. You should examine whether the statistical analysis is appropriate for the research question and whether it is likely to produce valid and reliable results.

Assess the Conclusions

You should evaluate whether the data support the conclusions drawn from the study and whether they are relevant to the research question.

Consider the Limitations

Finally, you should consider the limitations of the study, including any potential biases or confounding factors that may have influenced the results.

Evaluating Research Methods

Evaluating Research Methods are as follows:

- Peer review: Peer review is a process where experts in the field review a study before it is published. This helps ensure that the study is accurate, valid, and relevant to the field.

- Critical appraisal : Critical appraisal involves systematically evaluating a study based on specific criteria. This helps assess the quality of the study and the reliability of the findings.

- Replication : Replication involves repeating a study to test the validity and reliability of the findings. This can help identify any errors or biases in the original study.

- Meta-analysis : Meta-analysis is a statistical method that combines the results of multiple studies to provide a more comprehensive understanding of a particular topic. This can help identify patterns or inconsistencies across studies.

- Consultation with experts : Consulting with experts in the field can provide valuable insights into the quality and relevance of a study. Experts can also help identify potential limitations or biases in the study.

- Review of funding sources: Examining the funding sources of a study can help identify any potential conflicts of interest or biases that may have influenced the study design or interpretation of results.

Example of Evaluating Research

Example of Evaluating Research sample for students:

Title of the Study: The Effects of Social Media Use on Mental Health among College Students

Sample Size: 500 college students

Sampling Technique : Convenience sampling

- Sample Size: The sample size of 500 college students is a moderate sample size, which could be considered representative of the college student population. However, it would be more representative if the sample size was larger, or if a random sampling technique was used.

- Sampling Technique : Convenience sampling is a non-probability sampling technique, which means that the sample may not be representative of the population. This technique may introduce bias into the study since the participants are self-selected and may not be representative of the entire college student population. Therefore, the results of this study may not be generalizable to other populations.

- Participant Characteristics: The study does not provide any information about the demographic characteristics of the participants, such as age, gender, race, or socioeconomic status. This information is important because social media use and mental health may vary among different demographic groups.

- Data Collection Method: The study used a self-administered survey to collect data. Self-administered surveys may be subject to response bias and may not accurately reflect participants’ actual behaviors and experiences.

- Data Analysis: The study used descriptive statistics and regression analysis to analyze the data. Descriptive statistics provide a summary of the data, while regression analysis is used to examine the relationship between two or more variables. However, the study did not provide information about the statistical significance of the results or the effect sizes.

Overall, while the study provides some insights into the relationship between social media use and mental health among college students, the use of a convenience sampling technique and the lack of information about participant characteristics limit the generalizability of the findings. In addition, the use of self-administered surveys may introduce bias into the study, and the lack of information about the statistical significance of the results limits the interpretation of the findings.

Note*: Above mentioned example is just a sample for students. Do not copy and paste directly into your assignment. Kindly do your own research for academic purposes.

Applications of Evaluating Research

Here are some of the applications of evaluating research:

- Identifying reliable sources : By evaluating research, researchers, students, and other professionals can identify the most reliable sources of information to use in their work. They can determine the quality of research studies, including the methodology, sample size, data analysis, and conclusions.

- Validating findings: Evaluating research can help to validate findings from previous studies. By examining the methodology and results of a study, researchers can determine if the findings are reliable and if they can be used to inform future research.

- Identifying knowledge gaps: Evaluating research can also help to identify gaps in current knowledge. By examining the existing literature on a topic, researchers can determine areas where more research is needed, and they can design studies to address these gaps.

- Improving research quality : Evaluating research can help to improve the quality of future research. By examining the strengths and weaknesses of previous studies, researchers can design better studies and avoid common pitfalls.

- Informing policy and decision-making : Evaluating research is crucial in informing policy and decision-making in many fields. By examining the evidence base for a particular issue, policymakers can make informed decisions that are supported by the best available evidence.

- Enhancing education : Evaluating research is essential in enhancing education. Educators can use research findings to improve teaching methods, curriculum development, and student outcomes.

Purpose of Evaluating Research

Here are some of the key purposes of evaluating research:

- Determine the reliability and validity of research findings : By evaluating research, researchers can determine the quality of the study design, data collection, and analysis. They can determine whether the findings are reliable, valid, and generalizable to other populations.

- Identify the strengths and weaknesses of research studies: Evaluating research helps to identify the strengths and weaknesses of research studies, including potential biases, confounding factors, and limitations. This information can help researchers to design better studies in the future.

- Inform evidence-based decision-making: Evaluating research is crucial in informing evidence-based decision-making in many fields, including healthcare, education, and public policy. Policymakers, educators, and clinicians rely on research evidence to make informed decisions.

- Identify research gaps : By evaluating research, researchers can identify gaps in the existing literature and design studies to address these gaps. This process can help to advance knowledge and improve the quality of research in a particular field.

- Ensure research ethics and integrity : Evaluating research helps to ensure that research studies are conducted ethically and with integrity. Researchers must adhere to ethical guidelines to protect the welfare and rights of study participants and to maintain the trust of the public.

Characteristics Evaluating Research

Characteristics Evaluating Research are as follows:

- Research question/hypothesis: A good research question or hypothesis should be clear, concise, and well-defined. It should address a significant problem or issue in the field and be grounded in relevant theory or prior research.

- Study design: The research design should be appropriate for answering the research question and be clearly described in the study. The study design should also minimize bias and confounding variables.

- Sampling : The sample should be representative of the population of interest and the sampling method should be appropriate for the research question and study design.

- Data collection : The data collection methods should be reliable and valid, and the data should be accurately recorded and analyzed.

- Results : The results should be presented clearly and accurately, and the statistical analysis should be appropriate for the research question and study design.

- Interpretation of results : The interpretation of the results should be based on the data and not influenced by personal biases or preconceptions.

- Generalizability: The study findings should be generalizable to the population of interest and relevant to other settings or contexts.

- Contribution to the field : The study should make a significant contribution to the field and advance our understanding of the research question or issue.

Advantages of Evaluating Research

Evaluating research has several advantages, including:

- Ensuring accuracy and validity : By evaluating research, we can ensure that the research is accurate, valid, and reliable. This ensures that the findings are trustworthy and can be used to inform decision-making.

- Identifying gaps in knowledge : Evaluating research can help identify gaps in knowledge and areas where further research is needed. This can guide future research and help build a stronger evidence base.

- Promoting critical thinking: Evaluating research requires critical thinking skills, which can be applied in other areas of life. By evaluating research, individuals can develop their critical thinking skills and become more discerning consumers of information.

- Improving the quality of research : Evaluating research can help improve the quality of research by identifying areas where improvements can be made. This can lead to more rigorous research methods and better-quality research.

- Informing decision-making: By evaluating research, we can make informed decisions based on the evidence. This is particularly important in fields such as medicine and public health, where decisions can have significant consequences.

- Advancing the field : Evaluating research can help advance the field by identifying new research questions and areas of inquiry. This can lead to the development of new theories and the refinement of existing ones.

Limitations of Evaluating Research

Limitations of Evaluating Research are as follows:

- Time-consuming: Evaluating research can be time-consuming, particularly if the study is complex or requires specialized knowledge. This can be a barrier for individuals who are not experts in the field or who have limited time.

- Subjectivity : Evaluating research can be subjective, as different individuals may have different interpretations of the same study. This can lead to inconsistencies in the evaluation process and make it difficult to compare studies.

- Limited generalizability: The findings of a study may not be generalizable to other populations or contexts. This limits the usefulness of the study and may make it difficult to apply the findings to other settings.

- Publication bias: Research that does not find significant results may be less likely to be published, which can create a bias in the published literature. This can limit the amount of information available for evaluation.

- Lack of transparency: Some studies may not provide enough detail about their methods or results, making it difficult to evaluate their quality or validity.

- Funding bias : Research funded by particular organizations or industries may be biased towards the interests of the funder. This can influence the study design, methods, and interpretation of results.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Research Questions – Types, Examples and Writing...

Institutional Review Board – Application Sample...

References in Research – Types, Examples and...

Conceptual Framework – Types, Methodology and...

Limitations in Research – Types, Examples and...

Research Gap – Types, Examples and How to...

- EXPLORE Random Article

- Happiness Hub

How to Evaluate a Research Paper

Last Updated: July 4, 2023 Fact Checked

This article was co-authored by Matthew Snipp, PhD . C. Matthew Snipp is the Burnet C. and Mildred Finley Wohlford Professor of Humanities and Sciences in the Department of Sociology at Stanford University. He is also the Director for the Institute for Research in the Social Science’s Secure Data Center. He has been a Research Fellow at the U.S. Bureau of the Census and a Fellow at the Center for Advanced Study in the Behavioral Sciences. He has published 3 books and over 70 articles and book chapters on demography, economic development, poverty and unemployment. He is also currently serving on the National Institute of Child Health and Development’s Population Science Subcommittee. He holds a Ph.D. in Sociology from the University of Wisconsin—Madison. There are 14 references cited in this article, which can be found at the bottom of the page. This article has been fact-checked, ensuring the accuracy of any cited facts and confirming the authority of its sources. This article has been viewed 57,265 times.

While writing a research paper can be tough, it can be even tougher to evaluate the strength of one. Whether you’re giving feedback to a fellow student or learning how to grade, you can judge a paper’s content and formatting to determine its excellence. By looking at the key attributes of successful research papers in the humanities and sciences, you can evaluate a given work work against high standards.

Examining a Humanities Research Paper

- A strong thesis statement might be: “Single-sex education helps girls develop more self-confidence than co-education. Using scholarly research, I will illustrate how young girls develop greater self-confidence in single-sex schools, why their self-confidence becomes more developed in this setting, and what this means for the future of education in America.”

- A poor thesis statement might be: “Single-sex education is a schooling choice that separates the sexes.” This is a description of single-sex education rather than an opinion that can be supported with research.

- In the example outlined above, one could theoretically create a valid argument for single-sex education negatively impacting the self-esteem of girls. This depth makes the topic worthy of examination.

- Ask yourself if the thesis feels obvious. If it does, it is probably not a strong choice.

- The paragraphs should each start with a topic sentence so you feel introduced to the research at hand.

- A typical 5-paragraph essay will have 3 supporting points of 1 paragraph each. Most good research papers are longer than 5 paragraphs, though, and may have multiple paragraphs about just one point of many that support the thesis.

- The more points and correlating research that support the thesis statement, the better.

- A paper with little supporting research to bolster its points likely falls short of adequately illustrating its thesis.

- Quotations longer than 4 lines should be set off in block format for readability.

- For example, if a thesis statement were that cats are smarter than dogs. A good supporting point might be that cats are better hunters than dogs. The author could introduce a source well in support of this by saying, “In animal expert Linda Smith’s book Cats are King , Smith describes cats’ superior hunting abilities. On page 8 she says, ‘Cats are the most developed hunters in the civilized world.’ Because hunting requires incredible mental focus and skill, this statement supports my view that cats are smarter than dogs.”

- Any quotations that are used to summarize a text are likely not pulling their weight in the research paper. All quotations should serve as direct supports.

- Doing this is a mark of a strong research paper and helps convince the reader that the author’s thesis is good and valid.

- A good research paper shows that the thesis is important beyond just the narrow context of its question.

Evaluating a Sciences Research Paper

- An effective abstract should also include a brief interpretation of those results, which will be explored further later.

- The author should note any overall trends or major revelations discovered in their research.

- The author of a successful scientific research paper should note any boundaries that limit their research.

- For example: If the author conducted a study of pregnant women, but only women over 35 responded to a call for participants, the author should mention it. An element like this could affect the conclusions the author draws from their research.

- An effective methodology section should be written in the past tense, as the author has already decided and executed their research.

- A good results section shouldn’t include raw data from the research; it should explain the findings of the research.

- A strong discussion section could present new solutions to the original problem or suggest further areas of study given the results.

- A good discussion moves beyond interpreting findings and offers some subjective analysis.

- The author might think about the potential real-world consequences of ignoring the research results, for example.

Checking the Formatting of a Research Paper

- For example: “Growing up Stronger: Why Girls’ Schools Create More Confident Women” is more interesting and concise than “Single-Sex Schools are Better than Co-Ed Schools at Developing Self Confidence for Girls.”

- The author should clearly identify any ideas that are not their own and reference other works that make up the research landscape for context.

- Common style guides for research papers include the APA style guide, the Chicago Manual of Style, the MLA Handbook, and the Turabian citation guide.

Expert Q&A

You Might Also Like

- ↑ https://writingcenter.unc.edu/tips-and-tools/thesis-statements/

- ↑ https://owl.purdue.edu/owl/general_writing/academic_writing/establishing_arguments/index.html

- ↑ https://owl.english.purdue.edu/owl/resource/658/02

- ↑ https://advice.writing.utoronto.ca/planning/thesis-statements/

- ↑ https://writingcenter.unc.edu/tips-and-tools/quotations/

- ↑ https://wts.indiana.edu/writing-guides/writing-conclusions.html

- ↑ https://libguides.usc.edu/writingguide/abstract

- ↑ https://libguides.usc.edu/writingguide/introduction

- ↑ https://libguides.usc.edu/writingguide/methodology

- ↑ https://libguides.usc.edu/writingguide/results

- ↑ https://libguides.usc.edu/writingguide/discussion

- ↑ https://libguides.usc.edu/writingguide/conclusion

- ↑ https://writing.umn.edu/sws/assets/pdf/quicktips/academicessaystructures.pdf

- ↑ https://subjectguides.library.american.edu/citation

About this article

Did this article help you?

- About wikiHow

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

Evaluating Research Articles

Understanding research statistics, critical appraisal, help us improve the libguide.

Imagine for a moment that you are trying to answer a clinical (PICO) question regarding one of your patients/clients. Do you know how to determine if a research study is of high quality? Can you tell if it is applicable to your question? In evidence based practice, there are many things to look for in an article that will reveal its quality and relevance. This guide is a collection of resources and activities that will help you learn how to evaluate articles efficiently and accurately.

Is health research new to you? Or perhaps you're a little out of practice with reading it? The following questions will help illuminate an article's strengths or shortcomings. Ask them of yourself as you are reading an article:

- Is the article peer reviewed?

- Are there any conflicts of interest based on the author's affiliation or the funding source of the research?

- Are the research questions or objectives clearly defined?

- Is the study a systematic review or meta analysis?

- Is the study design appropriate for the research question?

- Is the sample size justified? Do the authors explain how it is representative of the wider population?

- Do the researchers describe the setting of data collection?

- Does the paper clearly describe the measurements used?

- Did the researchers use appropriate statistical measures?

- Are the research questions or objectives answered?

- Did the researchers account for confounding factors?

- Have the researchers only drawn conclusions about the groups represented in the research?

- Have the authors declared any conflicts of interest?

If the answer to these questions about an article you are reading are mostly YESes , then it's likely that the article is of decent quality. If the answers are most NOs , then it may be a good idea to move on to another article. If the YESes and NOs are roughly even, you'll have to decide for yourself if the article is good enough quality for you. Some factors, like a poor literature review, are not as important as the researchers neglecting to describe the measurements they used. As you read more research, you'll be able to more easily identify research that is well done vs. that which is not well done.

North Carolina State University Libraries has a short video that describes how to evaluate sources for credibility.

Determining if a research study has used appropriate statistical measures is one of the most critical and difficult steps in evaluating an article. The following links are great, quick resources for helping to better understand how to use statistics in health research.

- How to read a paper: Statistics for the non-statistician. II: “Significant” relations and their pitfalls This article continues the checklist of questions that will help you to appraise the statistical validity of a paper. Greenhalgh Trisha. How to read a paper: Statistics for the non-statistician. II: “Significant” relations and their pitfalls BMJ 1997; 315 :422 *On the PMC PDF, you need to scroll past the first article to get to this one.*

- A consumer's guide to subgroup analysis The extent to which a clinician should believe and act on the results of subgroup analyses of data from randomized trials or meta-analyses is controversial. Guidelines are provided in this paper for making these decisions.

Statistical Versus Clinical Significance

When appraising studies, it's important to consider both the clinical and statistical significance of the research. This video offers a quick explanation of why.

If you have a little more time, this video explores statistical and clinical significance in more detail, including examples of how to calculate an effect size.

- Statistical vs. Clinical Significance Transcript Transcript document for the Statistical vs. Clinical Significance video.

- Effect Size Transcript Transcript document for the Effect Size video.

- P Values, Statistical Significance & Clinical Significance This handout also explains clinical and statistical significance.

- Absolute versus relative risk – making sense of media stories Understanding the difference between relative and absolute risk is essential to understanding statistical tests commonly found in research articles.

Critical appraisal is the process of systematically evaluating research using established and transparent methods. In critical appraisal, health professionals use validated checklists/worksheets as tools to guide their assessment of the research. It is a more advanced way of evaluating research than the more basic method explained above. To learn more about critical appraisal or to access critical appraisal tools, visit the websites below.

- Last Updated: Sep 19, 2024 8:04 PM

- URL: https://libguides.massgeneral.org/evaluatingarticles

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

The PMC website is updating on October 15, 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Perspect Clin Res

- v.12(2); Apr-Jun 2021

Critical appraisal of published research papers – A reinforcing tool for research methodology: Questionnaire-based study

Snehalata gajbhiye.

Department of Pharmacology and Therapeutics, Seth GS Medical College and KEM Hospital, Mumbai, Maharashtra, India

Raakhi Tripathi

Urwashi parmar, nishtha khatri, anirudha potey.

1 Department of Clinical Trials, Serum Institute of India, Pune, Maharashtra, India

Background and Objectives:

Critical appraisal of published research papers is routinely conducted as a journal club (JC) activity in pharmacology departments of various medical colleges across Maharashtra, and it forms an important part of their postgraduate curriculum. The objective of this study was to evaluate the perception of pharmacology postgraduate students and teachers toward use of critical appraisal as a reinforcing tool for research methodology. Evaluation of performance of the in-house pharmacology postgraduate students in the critical appraisal activity constituted secondary objective of the study.

Materials and Methods:

The study was conducted in two parts. In Part I, a cross-sectional questionnaire-based evaluation on perception toward critical appraisal activity was carried out among pharmacology postgraduate students and teachers. In Part II of the study, JC score sheets of 2 nd - and 3 rd -year pharmacology students over the past 4 years were evaluated.

One hundred and twenty-seven postgraduate students and 32 teachers participated in Part I of the study. About 118 (92.9%) students and 28 (87.5%) faculties considered the critical appraisal activity to be beneficial for the students. JC score sheet assessments suggested that there was a statistically significant improvement in overall scores obtained by postgraduate students ( n = 25) in their last JC as compared to the first JC.

Conclusion:

Journal article criticism is a crucial tool to develop a research attitude among postgraduate students. Participation in the JC activity led to the improvement in the skill of critical appraisal of published research articles, but this improvement was not educationally relevant.

INTRODUCTION

Critical appraisal of a research paper is defined as “The process of carefully and systematically examining research to judge its trustworthiness, value and relevance in a particular context.”[ 1 ] Since scientific literature is rapidly expanding with more than 12,000 articles being added to the MEDLINE database per week,[ 2 ] critical appraisal is very important to distinguish scientifically useful and well-written articles from imprecise articles.

Educational authorities like the Medical Council of India (MCI) and Maharashtra University of Health Sciences (MUHS) have stated in pharmacology postgraduate curriculum that students must critically appraise research papers. To impart training toward these skills, MCI and MUHS have emphasized on the introduction of journal club (JC) activity for postgraduate (PG) students, wherein students review a published original research paper and state the merits and demerits of the paper. Abiding by this, pharmacology departments across various medical colleges in Maharashtra organize JC at frequent intervals[ 3 , 4 ] and students discuss varied aspects of the article with teaching faculty of the department.[ 5 ] Moreover, this activity carries a significant weightage of marks in the pharmacology university examination. As postgraduate students attend this activity throughout their 3-year tenure, it was perceived by the authors that this activity of critical appraisal of research papers could emerge as a tool for reinforcing the knowledge of research methodology. Hence, a questionnaire-based study was designed to find out the perceptions from PG students and teachers.

There have been studies that have laid emphasis on the procedure of conducting critical appraisal of research papers and its application into clinical practice.[ 6 , 7 ] However, there are no studies that have evaluated how well students are able to critically appraise a research paper. The Department of Pharmacology and Therapeutics at Seth GS Medical College has developed an evaluation method to score the PG students on this skill and this tool has been implemented for the last 5 years. Since there are no research data available on the performance of PG Pharmacology students in JC, capturing the critical appraisal activity evaluation scores of in-house PG students was chosen as another objective of the study.

MATERIALS AND METHODS

Description of the journal club activity.

JC is conducted in the Department of Pharmacology and Therapeutics at Seth GS Medical College once in every 2 weeks. During the JC activity, postgraduate students critically appraise published original research articles on their completeness and aptness in terms of the following: study title, rationale, objectives, study design, methodology-study population, inclusion/exclusion criteria, duration, intervention and safety/efficacy variables, randomization, blinding, statistical analysis, results, discussion, conclusion, references, and abstract. All postgraduate students attend this activity, while one of them critically appraises the article (who has received the research paper given by one of the faculty members 5 days before the day of JC). Other faculties also attend these sessions and facilitate the discussions. As the student comments on various sections of the paper, the same predecided faculty who gave the article (single assessor) evaluates the student on a total score of 100 which is split per section as follows: Introduction –20 marks, Methodology –20 marks, Discussion – 20 marks, Results and Conclusion –20 marks, References –10 marks, and Title, Abstract, and Keywords – 10 marks. However, there are no standard operating procedures to assess the performance of students at JC.

Methodology

After seeking permission from the Institutional Ethics Committee, the study was conducted in two parts. Part I consisted of a cross-sectional questionnaire-based survey that was conducted from October 2016 to September 2017. A questionnaire to evaluate perception towards the activity of critical appraisal of published papers as research methodology reinforcing tool was developed by the study investigators. The questionnaire consisted of 20 questions: 14 questions [refer Figure 1 ] graded on a 3-point Likert scale (agree, neutral, and disagree), 1 multiple choice selection question, 2 dichotomous questions, 1 semi-open-ended questions, and 2 open-ended questions. Content validation for this questionnaire was carried out with the help of eight pharmacology teachers. The content validity ratio per item was calculated and each item in the questionnaire had a CVR ratio (CVR) of >0.75.[ 8 ] The perception questionnaire was either E-mailed or sent through WhatsApp to PG pharmacology students and teaching faculty in pharmacology departments at various medical colleges across Maharashtra. Informed consent was obtained on E-mail from all the participants.

Graphical representation of the percentage of students/teachers who agreed that critical appraisal of research helped them improve their knowledge on various aspects of research, perceived that faculty participation is important in this activity, and considered critical appraisal activity beneficial for students. The numbers adjacent to the bar diagrams indicate the raw number of students/faculty who agreed, while brackets indicate %

Part II of the study consisted of evaluating the performance of postgraduate students toward skills of critical appraisal of published papers. For this purpose, marks obtained by 2 nd - and 3 rd -year residents during JC sessions conducted over a period of 4 years from October 2013 to September 2017 were recorded and analyzed. No data on personal identifiers of the students were captured.

Statistical analysis

Marks obtained by postgraduate students in their first and last JC were compared using Wilcoxon signed-rank test, while marks obtained by 2 nd - and 3 rd -year postgraduate students were compared using Mann–Whitney test since the data were nonparametric. These statistical analyses were performed using GraphPad Prism statistical software, San Diego, Calfornia, USA, Version 7.0d. Data obtained from the perception questionnaire were entered in Microsoft Excel sheet and were expressed as frequencies (percentages) using descriptive statistics.

Participants who answered all items of the questionnaire were considered as complete responders and only completed questionnaires were analyzed. The questionnaire was sent through an E-mail to 100 students and through WhatsApp to 68 students. Out of the 100 students who received the questionnaire through E-mail, 79 responded completely and 8 were incomplete responders, while 13 students did not revert back. Out of the 68 students who received the questionnaire through WhatsApp, 48 responded completely, 6 gave an incomplete response, and 14 students did not revert back. Hence, of the 168 postgraduate students who received the questionnaire, 127 responded completely (student response rate for analysis = 75.6%). The questionnaire was E-mailed to 33 faculties and was sent through WhatsApp to 25 faculties. Out of the 33 faculties who received the questionnaire through E-mail, 19 responded completely, 5 responded incompletely, and 9 did not respond at all. Out of the 25 faculties who received the questionnaire through WhatsApp, 13 responded completely, 3 were incomplete responders, and 9 did not respond at all. Hence, of a total of 58 faculties who were contacted, 32 responded completely (faculty response rate for analysis = 55%). For Part I of the study, responses on the perception questionnaire from 127 postgraduate students and 32 postgraduate teachers were recorded and analyzed. None of the faculty who participated in the validation of the questionnaire participated in the survey. Number of responses obtained region wise (Mumbai region and rest of Maharashtra region) have been depicted in Table 1 .

Region-wise distribution of responses

| Students ( =127) | Faculty ( =32) | |

|---|---|---|

| Mumbai colleges | 58 (45.7) | 18 (56.3) |

| Rest of Maharashtra colleges | 69 (54.3) | 14 (43.7) |

Number of responses obtained from students/faculty belonging to Mumbai colleges and rest of Maharashtra colleges. Brackets indicate percentages

As per the data obtained on the Likert scale questions, 102 (80.3%) students and 29 (90.6%) teachers agreed that critical appraisal trains the students in doing a review of literature before selecting a particular research topic. Majority of the participants, i.e., 104 (81.9%) students and 29 (90.6%) teachers also believed that the activity increases student's knowledge regarding various experimental evaluation techniques. Moreover, 112 (88.2%) students and 27 (84.4%) faculty considered that critical appraisal activity results in improved skills of writing and understanding methodology section of research articles in terms of inclusion/exclusion criteria, endpoints, and safety/efficacy variables. About 103 (81.1%) students and 24 (75%) teachers perceived that this activity results in refinement of the student's research work. About 118 (92.9%) students and 28 (87.5%) faculty considered the critical appraisal activity to be beneficial for the students. Responses to 14 individual Likert scale items of the questionnaire have been depicted in Figure 1 .

With respect to the multiple choice selection question, 66 (52%) students and 16 (50%) teachers opined that faculty should select the paper, 53 (41.7%) students and 9 (28.1%) teachers stated that the papers should be selected by the presenting student himself/herself, while 8 (6.3%) students and 7 (21.9%) teachers expressed that some other student should select the paper to be presented at the JC.

The responses to dichotomous questions were as follows: majority of the students, that is, 109 (85.8%) and 23 (71.9%) teachers perceived that a standard checklist for article review should be given to the students before critical appraisal of journal article. Open-ended questions of the questionnaire invited suggestions from the participants regarding ways of getting trained on critical appraisal skills and of improving JC activity. Some of the suggestions given by faculty were as follows: increasing the frequency of JC activity, discussion of cited articles and new guidelines related to it, selecting all types of articles for criticism rather than only randomized controlled trials, and regular yearly exams on article criticism. Students stated that regular and frequent article criticism activity, practice of writing letter to the editor after criticism, active participation by peers and faculty, increasing weightage of marks for critical appraisal of papers in university examinations (at present marks are 50 out of 400), and a formal training for research criticism from 1 st year of postgraduation could improve critical appraisal program.

In Part II of this study, performance of the students on the skill of critical appraisal of papers was evaluated. Complete data of the first and last JC scores of a total of 25 students of the department were available, and when these scores were compared, it was seen that there was a statistically significant improvement in the overall scores ( P = 0.04), as well as in the scores obtained in methodology ( P = 0.03) and results section ( P = 0.02). This is depicted in Table 2 . Although statistically significant, the differences in scores in the methodology section, results section, and overall scores were 1.28/20, 1.28/20, and 4.36/100, respectively, amounting to 5.4%, 5.4%, and 4.36% higher scores in the last JC, which may not be considered educationally relevant (practically significant). The quantum of difference that would be considered practically significant was not decided a priori .

Comparison of marks obtained by pharmacology residents in their first and last journal club

| Section | Marks obtained by pharmacology residents in their first journal club ( =25) | Marks obtained by pharmacology residents in their last journal club ( =25) | Wilcoxon signed-rank test | ||

|---|---|---|---|---|---|

| Mean±SD | Median (IQR) | Mean±SD | Median (IQR) | value | |

| Introduction (maximum: 20 marks) | 13.48±2.52 | 14 (12-16) | 14.28±2.32 | 14 (13-16) | 0.22 |

| Methodology (maximum: 20 marks) | 13.36±3.11 | 14 (12-16) | 14.64±2.40 | 14 (14-16.5) | 0.03* |

| Results and conclusion (maximum: 20 marks) | 13.60±2.42 | 14 (12-15.5) | 14.88±2.64 | 15 (13.5-16.5) | 0.02* |

| Discussion (maximum: 20 marks) | 13.44±3.20 | 14 (11-16) | 14.16±2.78 | 14 (12.5-16) | 0.12 |

| References (maximum: 10 marks) | 7.12±1.20 | 7 (6.5-8) | 7.06±1.28 | 7 (6-8) | 0.80 |

| Title, abstract, and keywords (maximum: 10 marks) | 7.44±0.92 | 7 (7-8) | 7.78±1.12 | 8 (7-9) | 0.17 |

| Overall score (maximum: 100 marks) | 68.44±11.39 | 72 (64-76) | 72.80±11.32 | 71 (68-82.5) | 0.04* |

Marks have been represented as mean±SD. The maximum marks that can be obtained in each section have been stated as maximum. *Indicates statistically significant ( P <0.05). IQR=Interquartile range, SD=Standard deviation

Scores of two groups, one group consisting of 2 nd -year postgraduate students ( n = 44) and second group consisting of 3 rd -year postgraduate students ( n = 32) were compared and revealed no statistically significant difference in overall score ( P = 0.84). This is depicted in Table 3 . Since the quantum of difference in the overall scores was meager 0.84/100 (0.84%), it cannot be considered practically significant.

Comparison of marks obtained by 2 nd - and 3 rd -year pharmacology residents in the activity of critical appraisal of research articles

| Section | Marks obtained by 2 -year pharmacology students ( =44) | Marks obtained by 3 -year pharmacology students ( =32) | Mann-Whitney test, value | ||

|---|---|---|---|---|---|

| Mean±SD | Median (IQR) | Mean±SD | Median (IQR) | ||

| Introduction (maximum: 20 marks) | 14.09±2.41 | 14 (13-16) | 14.28±2.14 | 14 (13-16) | 0.7527 |

| Methodology (maximum: 20 marks) | 14.30±2.90 | 14.5 (13-16) | 14.41±2.24 | 14 (13-16) | 0.8385 |

| Results and conclusion (maximum: 20 marks) | 14.09±2.44 | 14 (12.5-16) | 14.59±2.61 | 14.5 (13-16) | 0.4757 |

| Discussion (maximum: 20 marks) | 13.86±2.73 | 14 (12-16) | 14.16±2.71 | 14.5 (12.5-16) | 0.5924 |

| References (maximum: 10 marks) | 7.34±1.16 | 8 (7-8) | 7.05±1.40 | 7 (6-8) | 0.2551 |

| Title, abstract, and keywords (maximum: 10 marks) | 7.82±0.90 | 8 (7-8.5) | 7.83±1.11 | 8 (7-8.5) | 0.9642 |

| Overall score (maximum: 100 marks) | 71.50±10.71 | 71.5 (66.5-79.5) | 72.34±10.85 | 73 (66-79.5) | 0.8404 |

Marks have been represented as mean±SD. The maximum marks that can be obtained in each section have been stated as maximum. P <0.05 was considered to be statistically significant. IQR=Interquartile range, SD=Standard deviation

The present study gauged the perception of the pharmacology postgraduate students and teachers toward the use of critical appraisal activity as a reinforcing tool for research methodology. Both students and faculties (>50%) believed that critical appraisal activity increases student's knowledge on principles of ethics, experimental evaluation techniques, CONSORT guidelines, statistical analysis, concept of conflict of interest, current trends and recent advances in Pharmacology and trains on doing a review of literature, and improves skills on protocol writing and referencing. In the study conducted by Crank-Patton et al ., a survey on 278 general surgery program directors was carried out and more than 50% indicated that JC was important to their training program.[ 9 ]

The grading template used in Part II of the study was based on the IMRaD structure. Hence, equal weightage was given to the Introduction, Methodology, Results, and Discussion sections and lesser weightage was given to the references and title, abstract, and keywords sections.[ 10 ] While evaluating the scores obtained by 25 students in their first and last JC, it was seen that there was a statistically significant improvement in the overall scores of the students in their last JC. However, the meager improvement in scores cannot be considered educationally relevant, as the authors expected the students to score >90% for the upgrade to be considered educationally impactful. The above findings suggest that even though participation in the JC activity led to a steady increase in student's performance (~4%), the increment was not as expected. In addition, the students did not portray an excellent performance (>90%), with average scores being around 72% even in the last JC. This can be probably explained by the fact that students perform this activity in a routine setting and not in an examination setting. Unlike the scenario in an examination, students were aware that even if they performed at a mediocre level, there would be no repercussions.

A separate comparison of scores obtained by 44 students in their 2 nd year and 32 students in their 3 rd year of postgraduation students was also done. The number of student evaluation sheets reviewed for this analysis was greater than the number of student evaluation sheets reviewed to compare first and last JC scores. This can be spelled out by the fact that many students were still in 2 nd year when this analysis was done and the score data for their last JC, which would take place in 3 rd year, was not available. In addition, few students were asked to present at JC multiple times during the 2 nd /3 rd year of their postgraduation.

While evaluating the critical appraisal scores obtained by 2 nd - and 3 rd -year postgraduate students, it was found that although the 3 rd -year students had a mean overall score greater than the 2 nd -year students, this difference was not statistically significant. During the 1 st year of MD Pharmacology course, students at the study center attend JC once in every 2 weeks. Even though the 1 st -year students do not themselves present in JC, they listen and observe the criticism points stated by senior peers presenting at the JC, and thereby, incur substantial amount of knowledge required to critically appraise papers. By the time, they become 2 nd -year students, they are already well versed with the program and this could have led to similar overall mean scores between the 2 nd -year students (71.50 ± 10.71) and 3 rd -year students (72.34 ± 10.85). This finding suggests that attentive listening is as important as active participation in the JC. Moreover, although students are well acquainted with the process of criticism when they are in their 3 rd year, there is certainly a scope for improvement in terms of the mean overall scores.

Similar results were obtained in a study conducted by Stern et al ., in which 62 students in the internal medicine program at the New England Medical Center were asked to respond to a questionnaire, evaluate a sample article, and complete a self-assessment of competence in evaluation of research. Twenty-eight residents returned the questionnaire and the composite score for the resident's objective assessment was not significantly correlated with the postgraduate year or self-assessed critical appraisal skill.[ 11 ]

Article criticism activity provides the students with practical experience of techniques taught in research methodology workshop. However, this should be supplemented with activities that assess the improvement of designing and presenting studies, such as protocol and paper writing. Thus, critical appraisal plays a significant role in reinforcing good research practices among the new generation of physicians. Moreover, critical appraisal is an integral part of PG assessment, and although the current format of conducting JCs did not portray a clinically meaningful improvement, the authors believe that it is important to continue this activity with certain modifications suggested by students who participated in this study. Students suggested that an increase in the frequency of critical appraisal activity accompanied by the display of active participation by peers and faculty could help in the betterment of this activity. This should be brought to attention of the faculty, as students seem to be interested to learn. Critical appraisal should be a two-way teaching–learning process between the students and faculty and not a dire need for satisfying the students' eligibility criteria for postgraduate university examinations. This activity is not only for the trainee doctors but also a part of the overall faculty development program.[ 12 ]

In the present era, JCs have been used as a tool to not only teach critical appraisal skills but also to teach other necessary aspects such as research design, medical statistics, clinical epidemiology, and clinical decision-making.[ 13 , 14 ] A study conducted by Khan in 2013 suggested that success of JC program can be ensured if institutes develop a defined JC objective for the development of learning capability of students and also if they cultivate more skilled faculties.[ 15 ] A good JC is believed to facilitate relevant, meaningful scientific discussion, and evaluation of the research updates that will eventually benefit the patient care.[ 12 ]

Although there is a lot of literature emphasizing the importance of JC, there is a lack of studies that have evaluated the outcome of such activity. One such study conducted by Ibrahim et al . assessed the importance of critical appraisal as an activity in surgical trainees in Nigeria. They reported that 92.42% trainees considered the activity to be important or very important and 48% trainees stated that the activity helped in improving literature search.[ 16 ]

This study is unique since it is the first of its kind to evaluate how well students are able to critically appraise a research paper. Moreover, the study has taken into consideration the due opinions of the students as well as faculties, unlike the previous literature which has laid emphasis on only student's perception. A limitation of this study is that sample size for faculties was smaller than the students, as it was not possible to convince the distant faculty in other cities to fill the survey. Besides, there may be a variation in the manner of conduct of the critical appraisal activity in pharmacology departments across the various medical colleges in the country. Another limitation of this study was that a single assessor graded a single student during one particular JC. Nevertheless, each student presented at multiple JC and thereby came across multiple assessors. Since the articles addressed at different JC were disparate, interobserver variability was not taken into account in this study. Furthermore, the authors did not make an a priori decision on the quantum of increase in scores that would be considered educationally meaningful.

Pharmacology students and teachers acknowledge the role of critical appraisal in improving the ability to understand the crucial concepts of research methodology and research conduct. In our institute, participation in the JC activity led to an improvement in the skill of critical appraisal of published research articles among the pharmacology postgraduate students. However, this improvement was not educationally relevant. The scores obtained by final-year postgraduate students in this activity were nearly 72% indicating that there is still scope of betterment in this skill.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Acknowledgments

We would like to acknowledge the support rendered by the entire Department of Pharmacology and Therapeutics at Seth GS Medical College.

Research Paper: A step-by-step guide: 7. Evaluating Sources

- 1. Getting Started

- 2. Topic Ideas

- 3. Thesis Statement & Outline

- 4. Appropriate Sources

- 5. Search Techniques

- 6. Taking Notes & Documenting Sources

- 7. Evaluating Sources

- 8. Citations & Plagiarism

- 9. Writing Your Research Paper

Evaluation Criteria

It's very important to evaluate the materials you find to make sure they are appropriate for a research paper. It's not enough that the information is relevant; it must also be credible. You will want to find more than enough resources, so that you can pick and choose the best for your paper. Here are some helpful criteria you can apply to the information you find:

C urrency :

- When was the information published?

- Is the source out-of-date for the topic?

- Are there new discoveries or important events since the publication date?

R elevancy:

- How is the information related to your argument?

- Is the information too advanced or too simple?

- Is the audience focus appropriate for a research paper?

- Are there better sources elsewhere?

A uthority :

- Who is the author?

- What is the author's credential in the related field?

- Is the publisher well-known in the field?

- Did the information go through the peer-review process or some kind of fact-checking?

A ccuracy :

- Can the information be verified?

- Are sources cited?

- Is the information factual or opinion based?

- Is the information biased?

- Is the information free of grammatical or spelling errors?

- What is the motive of providing the information: to inform? to sell? to persuade? to entertain?

- Does the author or publisher make their intentions clear? Who is the intended audience?

Evaluating Web Sources

Most web pages are not fact-checked or anything like that, so it's especially important to evaluate information you find on the web. Many articles on websites are fine for information, and many others are distorted or made up. Check out our media evaluation guide for tips on evaluating what you see on social media, news sites, blogs, and so on.

This three-part video series, in which university students, historians, and pro fact-checkers go head-to-head in checking out online information, is also helpful.

- << Previous: 6. Taking Notes & Documenting Sources

- Next: 8. Citations & Plagiarism >>

- Last Updated: Apr 18, 2023 12:12 PM

- URL: https://butte.libguides.com/ResearchPaper

- Your Science & Health Librarians

- How To Find Articles with Databases

- Video Learning

- Artificial Intelligence Tools

- Industry Reports

- How To Evaluate Articles

- Search Tips, General

- Develop a Research Question

- How To Read A Scientific Paper

- How To Interpret Data

- How To Write A Scientific Paper

- Teaching Materials

- Systematic & Evideced-Based Reviews

- Get More Help

- New Books, STEM areas

Useful Sources

- How to Critically Evaluate the Quality of a Research Article?

- How to Evaluate Journal Articles

Evaluating Articles

Not all articles are created equally. Evaluating sources for relevancy and usefulness is one the most important steps in research. This helps researchers in the STEM disciplines to gather the information they need. There are several tools to use when evaluating an article.

- Purposes can include persuasion, informing, or proving something to the reader. Depending on the topic of your paper, you will want to evaluate the article's purpose to support your own position.

Publication

- Most sources for college papers should come from scholarly journals. Scholarly journals are journals that are peer-reviewed before publication. This means that experts in the field read and approve the material in the article before it is published.

- The date of publication is especially relevant to those in the STEM disciplines. Research in science, technology, engineering, and mathematics moves very quickly, so have an article that was published recently will be more useful.

- Many universities operate their own press, Indiana University included! Books and articles that are published by a reputable institution are generally accepted as valuable information. Other notable presses included Harvard University Press and The MIT Press.

- Good sources of information come from experts in the field. This is called authority. Many times these individuals will be employed at research institutions such as universities, labs, or founded associations.

- Many times, authors with authority have written more than one article about a topic in their field. Not only does this add support to their reputation, but can also be a great source for more articles.

- Citation is a great indicator to the effectiveness of the article. If other experts are citing the article, it is a good sign this source is trusted and relevant.

Bibliography

- Scholarly works will always contain a bibliography of the sources used. Trusted articles will have sources that are also scholarly in nature and authored by individuals with authority in their field.

- Much like evaluating the publication of an article, the bibliography of the source should also contain sources that are up-to-date.

- << Previous: Industry Reports

- Next: Search Tips, General >>

- Last Updated: Sep 12, 2024 2:44 PM

- URL: https://guides.libraries.indiana.edu/STEM

Social media

Additional resources, featured databases.

- OneSearch@IU

- Google Scholar

- HathiTrust Digital Library

IU Libraries

- Our Departments

- Intranet SharePoint (Staff)

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

A simplified approach to critically appraising research evidence

Affiliation.

- 1 School of Health and Life Sciences, Teesside University, Middlesbrough, England.

- PMID: 33660465

- DOI: 10.7748/nr.2021.e1760

Background Evidence-based practice is embedded in all aspects of nursing and care. Understanding research evidence and being able to identify the strengths, weaknesses and limitations of published primary research is an essential skill of the evidence-based practitioner. However, it can be daunting and seem overly complex.

Aim: To provide a single framework that researchers can use when reading, understanding and critically assessing published research.

Discussion: To make sense of published research papers, it is helpful to understand some key concepts and how they relate to either quantitative or qualitative designs. Internal and external validity, reliability and trustworthiness are discussed. An illustration of how to apply these concepts in a practical way using a standardised framework to systematically assess a paper is provided.

Conclusion: The ability to understand and evaluate research builds strong evidence-based practitioners, who are essential to nursing practice.

Implications for practice: This framework should help readers to identify the strengths, potential weaknesses and limitations of a paper to judge its quality and potential usefulness.

Keywords: literature review; qualitative research; quantitative research; research; systematic review.

©2021 RCN Publishing Company Ltd. All rights reserved. Not to be copied, transmitted or recorded in any way, in whole or part, without prior permission of the publishers.

PubMed Disclaimer

Conflict of interest statement

None declared

Similar articles

- The Effectiveness of Integrated Care Pathways for Adults and Children in Health Care Settings: A Systematic Review. Allen D, Gillen E, Rixson L. Allen D, et al. JBI Libr Syst Rev. 2009;7(3):80-129. doi: 10.11124/01938924-200907030-00001. JBI Libr Syst Rev. 2009. PMID: 27820426

- The future of Cochrane Neonatal. Soll RF, Ovelman C, McGuire W. Soll RF, et al. Early Hum Dev. 2020 Nov;150:105191. doi: 10.1016/j.earlhumdev.2020.105191. Epub 2020 Sep 12. Early Hum Dev. 2020. PMID: 33036834

- Promoting and supporting self-management for adults living in the community with physical chronic illness: A systematic review of the effectiveness and meaningfulness of the patient-practitioner encounter. Rees S, Williams A. Rees S, et al. JBI Libr Syst Rev. 2009;7(13):492-582. doi: 10.11124/01938924-200907130-00001. JBI Libr Syst Rev. 2009. PMID: 27819974

- Health professionals' experience of teamwork education in acute hospital settings: a systematic review of qualitative literature. Eddy K, Jordan Z, Stephenson M. Eddy K, et al. JBI Database System Rev Implement Rep. 2016 Apr;14(4):96-137. doi: 10.11124/JBISRIR-2016-1843. JBI Database System Rev Implement Rep. 2016. PMID: 27532314 Review.

- The nursing contribution to qualitative research in palliative care 1990-1999: a critical evaluation. Bailey C, Froggatt K, Field D, Krishnasamy M. Bailey C, et al. J Adv Nurs. 2002 Oct;40(1):48-60. doi: 10.1046/j.1365-2648.2002.02339.x. J Adv Nurs. 2002. PMID: 12230529 Review.

- Special Collection Editorial: The digital movement in nursing. Cronin C. Cronin C. J Res Nurs. 2022 Aug;27(5):411-420. doi: 10.1177/17449871221117437. Epub 2022 Sep 17. J Res Nurs. 2022. PMID: 36131703 Free PMC article. No abstract available.

- Search in MeSH

LinkOut - more resources

Other literature sources.

- scite Smart Citations

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Writing Rules

- Running Head & Page numbersÂ

- Using Quotations

- Citing Sources

- Reference List

- General Reference List Principles

- Structure of the Report

Introduction

- References & Appendices

- Unpacking the Assignment Topic

- Planning and Structuring the Assignment

- Writing the Assignment

- Writing Concisely

- Developing Arguments

Critically Evaluating Research

- Editing the Assignment

- Writing in the Third Person

- Directive Words

- Before You Submit

- Cover Sheet & Title Page

- Academic Integrity

- Marking Criteria

- Word Limit Rules

- Submitting Your WorkÂ

- Writing Effective E-mails

- Writing Concisely Exercise

- About Redbook

Some research reports or assessments will require you critically evaluate a journal article or piece of research. Below is a guide with examples of how to critically evaluate research and how to communicate your ideas in writing.

To develop the skill of being able to critically evaluate, when reading research articles in psychology read with an open mind and be active when reading. Ask questions as you go and see if the answers are provided. Initially skim through the article to gain an overview of the problem, the design, methods, and conclusions. Then read for details and consider the questions provided below for each section of a journal article.

- Did the title describe the study?

- Did the key words of the title serve as key elements of the article?

- Was the title concise, i.e., free of distracting or extraneous phrases?

- Was the abstract concise and to the point?

- Did the abstract summarise the study’s purpose/research problem, the independent and dependent variables under study, methods, main findings, and conclusions?

- Did the abstract provide you with sufficient information to determine what the study is about and whether you would be interested in reading the entire article?

- Was the research problem clearly identified?

- Is the problem significant enough to warrant the study that was conducted?

- Did the authors present an appropriate theoretical rationale for the study?

- Is the literature review informative and comprehensive or are there gaps?

- Are the variables adequately explained and operationalised?

- Are hypotheses and research questions clearly stated? Are they directional? Do the author’s hypotheses and/or research questions seem logical in light of the conceptual framework and research problem?

- Overall, does the literature review lead logically into the Method section?

- Is the sample clearly described, in terms of size, relevant characteristics (gender, age, SES, etc), selection and assignment procedures, and whether any inducements were used to solicit subjects (payment, subject credit, free therapy, etc)?

- What population do the subjects represent (external validity)?

- Are there sufficient subjects to produce adequate power (statistical validity)?

- Have the variables and measurement techniques been clearly operationalised?

- Do the measures/instruments seem appropriate as measures of the variables under study (construct validity)?

- Have the authors included sufficient information about the psychometric properties (eg. reliability and validity) of the instruments?

- Are the materials used in conducting the study or in collecting data clearly described?

- Are the study’s scientific procedures thoroughly described in chronological order?

- Is the design of the study identified (or made evident)?

- Do the design and procedures seem appropriate in light of the research problem, conceptual framework, and research questions/hypotheses?

- Are there other factors that might explain the differences between groups (internal validity)?

- Were subjects randomly assigned to groups so there was no systematic bias in favour of one group? Was there a differential drop-out rate from groups so that bias was introduced (internal validity and attrition)?

- Were all the necessary control groups used? Were participants in each group treated identically except for the administration of the independent variable?

- Were steps taken to prevent subject bias and/or experimenter bias, eg, blind or double blind procedures?

- Were steps taken to control for other possible confounds such as regression to the mean, history effects, order effects, etc (internal validity)?

- Were ethical considerations adhered to, eg, debriefing, anonymity, informed consent, voluntary participation?

- Overall, does the method section provide sufficient information to replicate the study?

- Are the findings complete, clearly presented, comprehensible, and well organised?

- Are data coding and analysis appropriate in light of the study’s design and hypotheses? Are the statistics reported correctly and fully, eg. are degrees of freedom and p values given?

- Have the assumptions of the statistical analyses been met, eg. does one group have very different variance to the others?

- Are salient results connected directly to hypotheses? Are there superfluous results presented that are not relevant to the hypotheses or research question?

- Are tables and figures clearly labelled? Well-organised? Necessary (non-duplicative of text)?

- If a significant result is obtained, consider effect size. Is the finding meaningful? If a non-significant result is found, could low power be an issue? Were there sufficient levels of the IV?

- If necessary have appropriate post-hoc analyses been performed? Were any transformations performed; if so, were there valid reasons? Were data collapsed over any IVs; if so, were there valid reasons? If any data was eliminated, were valid reasons given?

Discussion and Conclusion

- Are findings adequately interpreted and discussed in terms of the stated research problem, conceptual framework, and hypotheses?

- Is the interpretation adequate? i.e., does it go too far given what was actually done or not far enough? Are non-significant findings interpreted inappropriately?

- Is the discussion biased? Are the limitations of the study delineated?

- Are implications for future research and/or practical application identified?

- Are the overall conclusions warranted by the data and any limitations in the study? Are the conclusions restricted to the population under study or are they generalised too widely?

- Is the reference list sufficiently specific to the topic under investigation and current?

- Are citations used appropriately in the text?

General Evaluation

- Is the article objective, well written and organised?

- Does the information provided allow you to replicate the study in all its details?

- Was the study worth doing? Does the study provide an answer to a practical or important problem? Does it have theoretical importance? Does it represent a methodological or technical advance? Does it demonstrate a previously undocumented phenomenon? Does it explore the conditions under which a phenomenon occurs?

How to turn your critical evaluation into writing

Example from a journal article.

- The Open University

- Accessibility hub

- Guest user / Sign out

- Study with The Open University

My OpenLearn Profile

Personalise your OpenLearn profile, save your favourite content and get recognition for your learning

About this free course

Become an ou student, download this course, share this free course.

Start this free course now. Just create an account and sign in. Enrol and complete the course for a free statement of participation or digital badge if available.

1 Important points to consider when critically evaluating published research papers

Simple review articles (also referred to as ‘narrative’ or ‘selective’ reviews), systematic reviews and meta-analyses provide rapid overviews and ‘snapshots’ of progress made within a field, summarising a given topic or research area. They can serve as useful guides, or as current and comprehensive ‘sources’ of information, and can act as a point of reference to relevant primary research studies within a given scientific area. Narrative or systematic reviews are often used as a first step towards a more detailed investigation of a topic or a specific enquiry (a hypothesis or research question), or to establish critical awareness of a rapidly-moving field (you will be required to demonstrate this as part of an assignment, an essay or a dissertation at postgraduate level).

The majority of primary ‘empirical’ research papers essentially follow the same structure (abbreviated here as IMRAD). There is a section on Introduction, followed by the Methods, then the Results, which includes figures and tables showing data described in the paper, and a Discussion. The paper typically ends with a Conclusion, and References and Acknowledgements sections.

The Title of the paper provides a concise first impression. The Abstract follows the basic structure of the extended article. It provides an ‘accessible’ and concise summary of the aims, methods, results and conclusions. The Introduction provides useful background information and context, and typically outlines the aims and objectives of the study. The Abstract can serve as a useful summary of the paper, presenting the purpose, scope and major findings. However, simply reading the abstract alone is not a substitute for critically reading the whole article. To really get a good understanding and to be able to critically evaluate a research study, it is necessary to read on.

While most research papers follow the above format, variations do exist. For example, the results and discussion sections may be combined. In some journals the materials and methods may follow the discussion, and in two of the most widely read journals, Science and Nature, the format does vary from the above due to restrictions on the length of articles. In addition, there may be supporting documents that accompany a paper, including supplementary materials such as supporting data, tables, figures, videos and so on. There may also be commentaries or editorials associated with a topical research paper, which provide an overview or critique of the study being presented.

Box 1 Key questions to ask when appraising a research paper

- Is the study’s research question relevant?

- Does the study add anything new to current knowledge and understanding?

- Does the study test a stated hypothesis?

- Is the design of the study appropriate to the research question?

- Do the study methods address key potential sources of bias?

- Were suitable ‘controls’ included in the study?

- Were the statistical analyses appropriate and applied correctly?

- Is there a clear statement of findings?

- Does the data support the authors’ conclusions?

- Are there any conflicts of interest or ethical concerns?

There are various strategies used in reading a scientific research paper, and one of these is to start with the title and the abstract, then look at the figures and tables, and move on to the introduction, before turning to the results and discussion, and finally, interrogating the methods.

Another strategy (outlined below) is to begin with the abstract and then the discussion, take a look at the methods, and then the results section (including any relevant tables and figures), before moving on to look more closely at the discussion and, finally, the conclusion. You should choose a strategy that works best for you. However, asking the ‘right’ questions is a central feature of critical appraisal, as with any enquiry, so where should you begin? Here are some critical questions to consider when evaluating a research paper.

Look at the Abstract and then the Discussion : Are these accessible and of general relevance or are they detailed, with far-reaching conclusions? Is it clear why the study was undertaken? Why are the conclusions important? Does the study add anything new to current knowledge and understanding? The reasons why a particular study design or statistical method were chosen should also be clear from reading a research paper. What is the research question being asked? Does the study test a stated hypothesis? Is the design of the study appropriate to the research question? Have the authors considered the limitations of their study and have they discussed these in context?

Take a look at the Methods : Were there any practical difficulties that could have compromised the study or its implementation? Were these considered in the protocol? Were there any missing values and, if so, was the number of missing values too large to permit meaningful analysis? Was the number of samples (cases or participants) too small to establish meaningful significance? Do the study methods address key potential sources of bias? Were suitable ‘controls’ included in the study? If controls are missing or not appropriate to the study design, we cannot be confident that the results really show what is happening in an experiment. Were the statistical analyses appropriate and applied correctly? Do the authors point out the limitations of methods or tests used? Were the methods referenced and described in sufficient detail for others to repeat or extend the study?

Take a look at the Results section and relevant tables and figures : Is there a clear statement of findings? Were the results expected? Do they make sense? What data supports them? Do the tables and figures clearly describe the data (highlighting trends etc.)? Try to distinguish between what the data show and what the authors say they show (i.e. their interpretation).

Moving on to look in greater depth at the Discussion and Conclusion : Are the results discussed in relation to similar (previous) studies? Do the authors indulge in excessive speculation? Are limitations of the study adequately addressed? Were the objectives of the study met and the hypothesis supported or refuted (and is a clear explanation provided)? Does the data support the authors’ conclusions? Maybe there is only one experiment to support a point. More often, several different experiments or approaches combine to support a particular conclusion. A rule of thumb here is that if multiple approaches and multiple lines of evidence from different directions are presented, and all point to the same conclusion, then the conclusions are more credible. But do question all assumptions. Identify any implicit or hidden assumptions that the authors may have used when interpreting their data. Be wary of data that is mixed up with interpretation and speculation! Remember, just because it is published, does not mean that it is right.

O ther points you should consider when evaluating a research paper : Are there any financial, ethical or other conflicts of interest associated with the study, its authors and sponsors? Are there ethical concerns with the study itself? Looking at the references, consider if the authors have preferentially cited their own previous publications (i.e. needlessly), and whether the list of references are recent (ensuring that the analysis is up-to-date). Finally, from a practical perspective, you should move beyond the text of a research paper, talk to your peers about it, consult available commentaries, online links to references and other external sources to help clarify any aspects you don’t understand.