13. Study design and choosing a statistical test

Sample size.

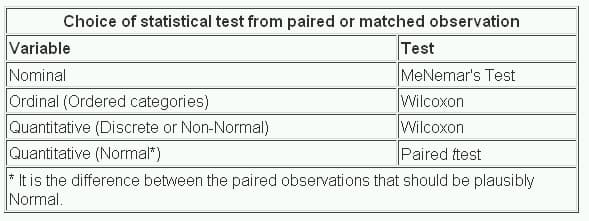

Choice of test

3.1 Study selection

3.2 Tools specific for prevalence studies

| Instrument (name of the instrument or author, year) | Context of development (clinical condition) | Process of development | Structure | Summary and reporting of results |

|---|---|---|---|---|

| Al-Jader et al., 2002 [ ] | Genetic disorders. | First version of the tool; pilot test with multidisciplinary assessors to evaluate reproducibility and feasibility; final version of the tool and test for inter-rater agreement. | Seven questions, with different answer options; each answer option with an associated score. | Maximum score: 100 points. No cutoff point defined. |

| Boyle, 1998 [ ] | Psychiatric disorders on general population settings. | NR | Ten questions, split in three sections. No predefined answer options. | No overall summary. Descriptive reporting of results. |

| Giannakapoulos et al., 2012 [ ] | Any clinical condition. | Search for criteria to define a high-quality study of prevalence; development of the first version; pilot tests to determine inter-rater agreement and reliability; the final version of the tool. | Eleven questions, split in three sections, plus a question about ethics. Each question with two or three answer options; each answer option with an associated score. | Maximum score: 19 points. Studies are classified in accordance with their total score as poor (0–4), moderate (5–9), good (10–14), or outstanding (15–19). |

| Hoy et al., 2012 [ ] | Any clinical condition. | Search for instruments; definition of important criteria to be assessed and creation of the draft tool; pilot tests with professionals; assessment of inter-rater agreement, ease of use, timeliness; the final version of the tool. | Ten questions with two standard answer options ( ). | Question for overall appraisal with three answer options ( ), based on rater's judgment. |

| Loney et al., 1998 [ ] | Any clinical condition. | Review of important criteria; development of the tool; pilot test in prevalence studies of dementia. | Eight statements, one point for each criterion achieved. | Maximum score: eight points. No cutoff point defined. |

| MORE, 2010 [ ] | Chronic conditions. | Systematic search for instruments to assess prevalence and incidence studies; selection of important criteria; development of the first version; pilot test with experts to assess face validity, inter-rater agreement, and reliability; the final version of the tool. | Thirty-two questions, with different answer options; each answer option is classified as ‘minor flaw’, ‘major flaw’, or ‘poor reporting’. | No overall summary Descriptive reporting of results. |

| Silva et al., 2001 [ ] | Risk factors of chronic diseases. | NR | Nineteen questions, split in three sections. Two or three answer options, with an associated score. | Maximum score: 100 points. No cutoff point defined. |

| The Joanna Briggs Institute Prevalence Critical Appraisal Tool, 2014 [ , ] | Any clinical condition. | Systematic search for instruments to assess prevalence studies; review and selection of applicable criteria; development of the draft tool; pilot tests with professionals to assess face validity, applicability, acceptability, timeliness, and ease of use; the final version of the tool. | Nine questions with four standard answer options ( / ). | Question for overall appraisal with three answer options ( ), based on rater's judgment. |

- Open table in a new tab

| Instrument (name of the instrument or author, year) | Domain | |||

|---|---|---|---|---|

| Population and setting | Condition measurement | Statistics | Other | |

| Al-Jader et al., 2002 [ ] | Representative sample, ethnic characteristics of population source, appropriate size of population source | – | Precision of estimates | Description of condition of interest, reporting of year of conduction of studies, reporting of size of population source |

| Boyle, 1998 [ ] | Representative sample, appropriate sampling | Reliable and valid measurement of condition | Precision of estimates, data analysis considering sampling | Description of target population, standard data collection |

| Giannakapoulos et al., 2012 [ ] | Appropriate sampling and response rate | Reliable, standard, and valid measurement of condition | Precision of estimates, data analysis considering response rate and special features | Description of target population, ethics |

| Hoy et al., 2012 [ ] | Representative sample, appropriate sampling, and appropriate response rate | Appropriate definition of condition, reliable, standard, and valid measurement of condition, and appropriate length of prevalence period | Appropriate numerator and denominator parameters | Appropriate data collection |

| Loney et al., 1998 [ ] | Appropriate sampling and appropriate response rate | Appropriate, standard, and unbiased measurement of condition | Appropriate sample size, precision of estimates | Appropriate study design, description of participants, setting, and nonresponders |

| MORE, 2010 [ ] | Appropriate sampling and appropriate response rate | Appropriate, reliable, standard, and valid measurement of condition, assessment of disease severity and frequency of symptoms, type of prevalence estimate (point or period), and appropriate length of prevalence period | Precision of estimates, appropriate exclusion from analysis, data analysis considering sampling, subgroup analysis, and adjustment of estimates | Reporting of study design, description of study objectives, reporting of inclusion flowchart, description, and role funding, and reporting of conflict of interest, ethics |

| Silva et al., 2001 [ ] | Appropriate sample source and appropriate sampling | Appropriate definition of condition, standard and valid measurement of condition | Precision of estimates, subgroup analysis, data analysis considering sampling | Description of study objectives and sampling frame, quality control of data, applicability and generalizability of results |

| The Joanna Briggs Institute Prevalence Critical Appraisal Tool, 2014 [ , ] | Representative sample, appropriate sampling, and appropriate response rate | Reliable, standard, and valid measurement of condition | Appropriate sample size, appropriate statistical analysis, data analysis considering response rate | Description of participants and setting, objective criteria for subgroup definitions |

3.3 Tools adapted for prevalence studies

| Population and setting ( = 12) | Condition measurement ( = 16) | Statistics ( = 14) |

|---|---|---|

| (seven tools) (one tool) (one tool) (two tools) (one tool) (one tool) | (one tool) (one tool) (two tools) (two tools) (one tool) (one tool) (one tool) | (10 tools) (one tool) (three tools) (two tools) |

| Manuscript writing and reporting ( = 48) | Study protocol and methods ( = 12) | Nonclassified ( = 17) |

|---|---|---|

| (one tool) (one tool) (one tool) (one tool) (one tool) (one tool) (one tool) (eight tools) (three tools) (seven tools) (three tools) (10 tools) (three tools) (two tools) (one tool) (two tools) (one tool) (two tools) (two tools) (one tool) (three tools) (one tool) (one tool) (two tools) (five tools) (one tool) (three tools) (one tool) (two tools) (10 tools) (one tool) (one tool) (two tools) | (two tools) (one tool) (one tool) (two tools) (three tools) (one tool) (one tool) | (three tools) (one tool) (one tool) (one tool) (three tools) (one tool) (two tools) (one tool) (one tool) (two tools) (one tool) (three tools) (one tool) |

4 Discussion

5 conclusions, credit authorship contribution statement, supplementary data (5), article metrics, related articles.

- Download Hi-res image

- Download .PPT

- Access for Developing Countries

- Articles and Issues

- Articles in Press

- Current Issue

- List of Issues

- Supplements

- Collections

- For Authors

- About Open Access

- Author Information

- Permissions

- Researcher Academy

- Submit Manuscript

- Journal Info

- About the Journal

- Abstracting/Indexing

- Career Opportunities

- Contact Information

- Editorial Board

- Information for Advertisers

- Info for Authors and Readers

- New Content Alerts

- More Periodicals

- Find a Periodical

- Go to Product Catalog

The content on this site is intended for healthcare professionals.

- Privacy Policy

- Terms and Conditions

- Accessibility

- Help & Contact

- View PDF

- Download full issue

Journal of Clinical Epidemiology

Original article quality assessment of prevalence studies: a systematic review, study design and setting.

- Previous article in issue

- Next article in issue

Cited by (0)

- Research article

- Open access

- Published: 26 April 2020

How are systematic reviews of prevalence conducted? A methodological study

- Celina Borges Migliavaca 1 , 2 ,

- Cinara Stein 2 ,

- Verônica Colpani 2 ,

- Timothy Hugh Barker 3 ,

- Zachary Munn 3 &

- Maicon Falavigna 1 , 2 , 4

on behalf of the Prevalence Estimates Reviews – Systematic Review Methodology Group (PERSyst)

BMC Medical Research Methodology volume 20 , Article number: 96 ( 2020 ) Cite this article

35k Accesses

169 Citations

26 Altmetric

Metrics details

There is a notable lack of methodological and reporting guidance for systematic reviews of prevalence data. This information void has the potential to result in reviews that are inconsistent and inadequate to inform healthcare policy and decision making. The aim of this meta-epidemiological study is to describe the methodology of recently published prevalence systematic reviews.

We searched MEDLINE (via PubMed) from February 2017 to February 2018 for systematic reviews of prevalence studies. We included systematic reviews assessing the prevalence of any clinical condition using patients as the unit of measurement and we summarized data related to reporting and methodology of the reviews.

A total of 235 systematic reviews of prevalence were analyzed. The median number of authors was 5 (interquartile range [IQR] 4–7), the median number of databases searched was 4 (3–6) and the median number of studies included in each review was 24 (IQR 15–41.5). Search strategies were presented for 68% of reviews. Forty five percent of reviews received external funding, and 24% did not provide funding information. Twenty three percent of included reviews had published or registered the systematic review protocol. Reporting guidelines were used in 72% of reviews. The quality of included studies was assessed in 80% of reviews. Nine reviews assessed the overall quality of evidence (4 using GRADE). Meta-analysis was conducted in 65% of reviews; 1% used Bayesian methods. Random effect meta-analysis was used in 94% of reviews; among them, 75% did not report the variance estimator used. Among the reviews with meta-analysis, 70% did not report how data was transformed; 59% percent conducted subgroup analysis, 38% conducted meta-regression and 2% estimated prediction interval; I 2 was estimated in 95% of analysis. Publication bias was examined in 48%. The most common software used was STATA (55%).

Conclusions

Our results indicate that there are significant inconsistencies regarding how these reviews are conducted. Many of these differences arose in the assessment of methodological quality and the formal synthesis of comparable data. This variability indicates the need for clearer reporting standards and consensus on methodological guidance for systematic reviews of prevalence data.

Peer Review reports

The proportion of a population currently suffering from a disease or particular condition of interest (prevalence) is an important [ 1 ] metric that allows researchers to assess disease burden, that is, who among the population is experiencing a certain disease, at a very specific point in time, typically measured using a cross-sectional study design [ 2 ]. The subsequent synthesis of this information in the form of a rigorously conducted and transparently reported systematic review has significant potential to better inform social and healthcare professionals, policy makers and consumers to better manage and plan for this disease burden [ 3 ].

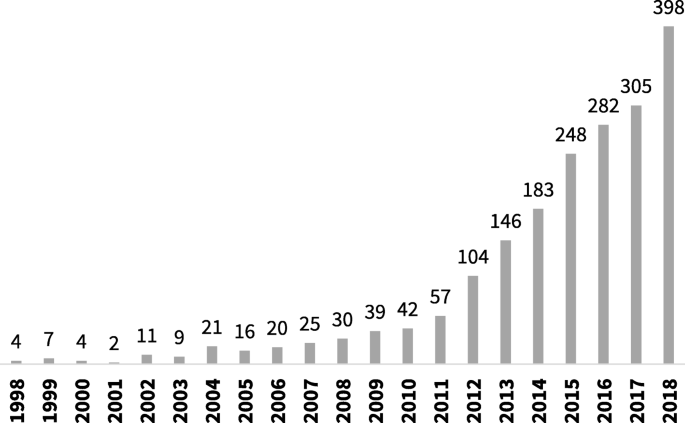

The number of systematic reviews of prevalence data has increased steadily over the last decade [ 4 ]. As can be seen in Fig. 1 , a search of PubMed, conducted in October 2019 using the terms “systematic review” and “prevalence” in the title, identified a more than ten-fold increase in the number of reviews published from 2007 to 2017.

Number of systematic reviews of prevalence indexed in PubMed between 1998 and 2018

Despite this uptake and increased interest in the scientific community in the conduct of a systematic review of prevalence data, there remains little discourse on how these reviews should be conducted and reported. Although methodological guidance from the Joanna Briggs Institute (JBI) exists for these review types, [ 5 ] there currently appears to be little other discussion on how these reviews should be prepared, performed (including when and how results can be pooled using meta-analytical methods) and reported. This is an important consideration, as standards of reporting and conduct for traditional systematic reviews (i.e. systematic reviews of interventions) [ 6 ] are considered commonplace, and even endorsed by journal editors. Even though such standards and guidelines could be adapted for systematic reviews of prevalence, they do not comprehend specific issues for this type of study, and have not been as readily adopted. This is despite the fact that there is now general acceptance that there needs to be different types of approaches for systematic reviews looking at different types of evidence [ 6 , 7 , 8 ].

This lack of accepted, readily adopted and easy to implement methodological and reporting guidance for systematic reviews of prevalence data has the potential to result in inconsistent, varied, inadequate and potentially biased research conduct. Due to the particular importance of this kind of data in enabling health researchers and policy makers to quantify disease amongst populations, such research conducted without appropriate guidance is likely to have far-reaching and complicated consequences for the wider public community.

As such, the objective of this research project was to conduct a meta-epidemiological review of a sample of systematic reviews that have been published in peer-reviewed journals and evaluated a question regarding the prevalence of a certain disease, symptom or condition. This project allowed us to investigate how these reviews are conducted and to provide an overview of all methods utilized by systematic reviews authors asking a question of prevalence. The results of this project can potentially inform the development of future guidance for this review type.

Search strategy

To retrieve potentially relevant reviews, we searched MEDLINE (via PubMed) using the terms ‘systematic review’ and ‘prevalence’ in the title ( prevalence [TI] AND “systematic review”[TI] AND (“2017/02/01”[PDAT]: “2018/02/01”[PDAT]) ). The search was limited to studies published between February 1st 2017 and February 1st 2018.

Study selection and eligibility criteria

The selection of studies was conducted in two phases by two independent reviewers. First, we screened titles and abstracts. Then, we retrieved the full-text of potentially relevant studies to identify studies meeting our inclusion criteria.

We included systematic reviews of prevalence of any clinical condition, including diseases or symptoms. We excluded primary studies, letters, narrative reviews, systematic reviews of interventions or diagnostic accuracy, systematic reviews assessing the association between variables and systematic reviews of prevalence that did not use patients as the unit of measurement, as well as studies not published in English.

Data abstraction

Using a standard and piloted form, one author extracted relevant data from each review and another author independently checked all data. Discrepancies were discussed and solved by consensus or by a third reviewer.

We abstracted the following data from individual studies: general information about the paper (number of authors, journal and year of publication), reporting of funding, reporting of search strategy, number and description of databases consulted, number of reviewers involved in each step of the review (study screening, selection, inclusion and data extraction), number of studies included in the review, methods for risk of bias appraisal and quality of evidence assessment, synthesis of results, assessment of publication bias and details of meta-analytic processes if meta-analysis was conducted (including variance estimator, transformation of data, heterogeneity assessment, and software used).

Data analysis

Results are presented using descriptive statistics. Quantitative variables are presented as means and standard deviations or median and interquartile range, as appropriate; and qualitative variables are presented in absolute and relative frequencies.

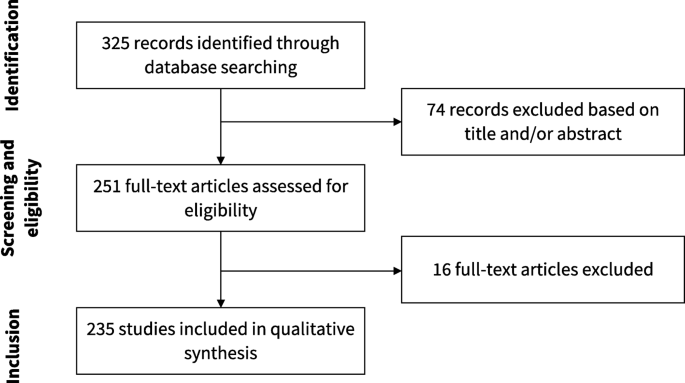

Our search resulted in 325 articles. We assessed the full text of 251 and included 235 of them in our analysis. Figure 2 presents the flowchart of study selection. The main characteristics of the included studies are described in Table 1 . The complete list of included studies is presented in Additional file 1 and the list of full text excluded with reasons is presented in Additional file 2 . The complete data extraction table is presented in Additional file 3 .

Flowchart of study selection

Of 235 studies included, 83 (35.3%) presented narrative synthesis, without quantitative synthesis, while 152 (64.7%) performed meta-analysis. These 235 articles were published in 85 different journals. The leading publication journals (with 5 or more published reviews) were PLoS One, BMJ Open, Lancet Global Health and Medicine. The complete list of journals where included reviews were published is presented in Additional file 4 .

Fifty-three studies (22.6%) published or registered a protocol, and 170 (72.3%) used a reporting guideline, including PRISMA ( n = 161, 68.5%), MOOSE ( n = 27, 11.5%) and GATHER ( n = 2, 0.9%). In 56 studies (45.1%) there was no reporting of funding.

The median number of databases searched in each review was 4 (IQR 3–6); 231 studies (98.3%) used PubMed, 146 (62.1%) used Embase, 93 (39.6%) used Web of Science, and 70 (29.8%) used Cochrane CENTRAL, even though this is a database focused on interventional studies and systematic reviews. Most reviews ( n = 228, 97.0%) reported the search strategy used, although 69 (29.4) reported it incompletely (presented the terms used, but not how the search strategy was designed). The median number of included original studies in each systematic review was 24 (IQR 15–41.5).

One hundred and eighty-eight reviews (80.0%) assessed the methodological quality of included studies. One hundred and five (44.7%) reported that this assessment was performed by at least two reviewers. There was a great variability regarding the instruments used to critically appraise included studies. Twenty-four studies (10.2%) developed new tools, and 24 (10.2%) used non-validated tools from previous similar systematic reviews. Among validated and specific tools to assess prevalence studies, the JBI prevalence critical appraisal tool was the most used ( n = 21, 8.9%). Fifteen reviews (6.4%) used STROBE, a reporting guideline, to assess the methodological quality of included studies. Thirty-two reviews (17.0%) used assessment of methodological quality as an inclusion criterion for the review.

Nine studies (3.8%) assessed the quality of the body of evidence, with GRADE being the highest cited methodology ( n = 4, 1.7%). Not all methods used to assess the quality of evidence were validated and appropriate. For instance, one study summarized the quality of evidence as the mean STROBE score of included studies.

Statistical methods for meta-analysis

One hundred and fifty-two studies (64.7%) conducted meta-analysis to summarize prevalence estimates. The methods used by these reviews are summarized in Table 2 .

The vast majority of studies (n = 151, 99.3%) used classic methods instead of Bayesian approaches to pool prevalence estimates. The majority of studies pooled estimates using a random effects model ( n = 141, 93.4%), and 7 studies (4.6%) utilized the fixed effect model. Two studies (1.3%) used the ‘quality model’, where the weight of each study was calculated based on a quality assessment. However, both reviews used non-validated methods to critically appraise included studies.

In relation to variance estimation in the reviews that conducted random effects meta-analysis, the DerSimonian and Laird method was used in 30 reviews (21.3%). However, 106 studies (75.2%) did not report the variance estimator used. Forty-five studies (29.6%) reported how they transformed the prevalence estimates, and the most used methods were Freeman-Tukey double arcsine ( n = 32, 21.1%), logit ( n = 5, 3.3%) and log ( n = 4, 2.6%). Heterogeneity among studies was assessed with the I 2 statistics in 114 studies (94.7%), meta-regression in 57 studies (37.5%) and subgroup analysis was performed in 89 studies (58.6%). Most analyses ( n = 105, 76.1%) had an I 2 estimate of 90% or more. Publication bias was assessed with funnel plots ( n = 56, 36.8%) and Egger’s test ( n = 54, 35.5%). Prediction interval was estimated in 3 reviews (2.0%).

This meta-epidemiological study identified 235 systematic reviews addressing a question related to the prevalence of any clinical condition. Our investigations have found that across the included systematic reviews of prevalence, there are significant and important discrepancies in how these reviews consider searching, risk of bias and data synthesis. In line with our results, a recently published study assessed a random sample of 215 systematic reviews of prevalence and cumulative incidence, and also found great heterogeneity among the methods used to conduct these reviews [ 7 ]. In our view, the study conducted by Hoffmann et al. and our study are complimentary. For instance, in the first one, the authors included reviews published in any year and compared the characteristics of reviews published before or after 2015 and with or without metanalysis; in our study, we assessed other methodological characteristics of the included reviews, specially related to the conduction of meta-analysis.

One area for potential guidance to inform future systematic reviews of prevalence data is in the risk of bias or critical appraisal stage. As can be seen from the results of our review, there are a number of checklists and tools that have been used. Some of the tools utilized were not appropriate for this assessment, such as the Newcastle Ottawa Scale (designed for cohort and case-control studies) [ 8 ] or STROBE (a reporting standard) [ 9 ]. Interestingly, some of the tools identified were designed specifically for studies reporting prevalence information, whilst other tools were adapted for this purpose, with the most adaptions to any one tool ( n = 13, 5.5%) being made to the Newcastle Ottawa Scale. This is particularly concerning when reviews have used results of quality assessment to determine inclusion in the review, as was the case in 17% of the included studies. The combination of (a) using inadequate or inappropriate critical appraisal tools and (b) using the results of these tools to decide upon inclusion in a systematic review could lead to the inappropriate exclusion of relevant studies, which can alter the final results and produce misleading estimates. As such, there is an urgent need for the development and validation of a tool for assessing prevalence, along with endorsement and acceptance by the community to assist with standardization in this field. In the meantime, we urge reviewers to refer to the JBI critical appraisal tool, [ 4 ] which has been formally evaluated and is increasingly used across these types of reviews.

Encouragingly, 72.3% of the included reviews adhered to or cited a reporting guideline in their review. The main reporting guideline reported was the PRISMA statement [ 6 ]. However, this reporting guideline was designed particularly for reviews of interventions of therapies. As such, there have been multiple extensions to the original PRISMA statement for various review types [ 10 , 11 ], yet no extension has yet been considered for systematic reviews of prevalence data. To ensure there is a reporting standard for use in prevalence systematic reviews, an extension or a broader version of the PRISMA statement including items important for this review type is recommended.

It was encouraging to see that multiple databases were often searched during the systematic review process, which is a recommendation for all review types. However, it is also important in systematic reviews that all the evidence is identified, and in the case of prevalence information, it may be particularly useful to search for data in unpublished sources, such as clinical registries, government reports, census data, and national administrative datasets, for example [ 12 , 13 ]. However, there are no clearly established procedures on how to deal with this kind of information. Further guidance on searching for evidence in prevalence reviews is required.

In our review, we found 64.7% of reviewers conducted meta-analysis. There has been debate regarding the appropriateness of meta-analysis within systematic reviews of prevalence, [ 14 , 15 ] [ 16 , 17 , 18 ] largely surrounding whether synthesizing across different populations is appropriate, as we reasonably expect prevalence rates to vary across different contexts and where different diagnostic criteria may be employed. However, meta-analysis, when done appropriately and using correct methodology, can provide important information regarding the burden of disease, including identifying differences amongst populations and regions, changes over time, and can provide a summarized estimate that can be used when calculating baseline risk, such as in GRADE summary of findings tables.

The vast majority of meta-analyses used classic methods and a random effects model, which is appropriate in these types of reviews [ 17 ] [ 18 ]. Although the common use of random effects across the reviews is encouraging, this is where the consistency ends, as we once again see considerable variability in the choice of methods to transform prevalence estimates for proportional meta-analysis. The most widely used approach was the Freeman-Tukey double arscine transformation. This has been recommended as the preferred methods for transformation [ 15 , 18 ], although more recently it has come under question [ 19 ]. As such, further guidance and investigation into meta-analytical techniques is urgently required.

In reviews including meta-analysis, heterogeneity was assessed with the I 2 in 94.7% of the included studies. Although I 2 provides a useful indication of statistical heterogeneity amongst studies included in a meta-analysis, it can be misleading in cases where studies are providing large datasets with precise confidence intervals (such as in prevalence reviews) [ 20 ]. Other assessments of heterogeneity, such as T 2 and prediction intervals, may be more appropriate in these types of reviews [ 20 , 21 ]. Prediction intervals include the expected range of true effects in similar studies [ 22 ]. This is a more conservative way to incorporate uncertainty in the analysis when true heterogeneity is expected; however, it is still underused in meta-analysis of prevalence. Further guidance on assessing heterogeneity in these types of reviews is required.

Of the 235 systematic reviews analyzed, only 9 (3.8%) included a formal quality assessment or process to establish certainty of the entire body of evidence. Separate to critical appraisal, quality assessment of the entire body of evidence considers other factors in addition to methodological assessment that may impact on the subsequent recommendations drawn from such evidence [ 23 ]. Of these 9 reviews, only 4 (1.7%) followed the GRADE approach [ 24 ] which is now the commonplace methodology to reliably and sensibly rate the quality of the body of evidence. The considerably small number of identified reviews that included formal quality assessment of the entire body of evidence might be linked to the lack of formal guidance from the GRADE working group. The GRADE approach was developed to assess issues related to interventions (using evidence either from interventional or observational studies) and for diagnostic tests, however there are several extensions for the application of GRADE methodology. While there is no formal guidance for GRADE in systematic reviews of prevalence, there is some guidance into the use of GRADE for baseline risk or overall prognosis [ 25 ] which may be useful for these types of reviews. While no formal guidance exists, using the guidance cited above can serve as an interim method and is recommended by the authors for all future systematic reviews of prevalence. Whilst the number of identified studies that utilized GRADE in particular is small, it is encouraging to see the adoption of these methods in systematic reviews of prevalence data, as these examples will help contribute to the design of formal guidance for this data type in future.

It is reasonable to expect some differences in how these types of reviews are conducted, as different authors groups will rationally disagree regarding key issues, such as whether Bayesian approaches are best or which is the ideal method for transforming data. However, the wide inconsistency and variability noted in our review are far beyond the range of what could be considered reasonable and is of considerable concern for a number of reasons: this lack of standardization may encourage unnecessary duplication of reviews as reviewers approach similar questions with their own preferred methods; (2) novice reviewers searching for exemplar reviews may follow inadequate methods as they conduct these reviews; (3) the general confusion in end users, peer reviewers and readers of these reviews as they are required to become accustomed to various ways of conducting these studies; (4) review authors themselves following inadequate approaches, missing key steps or information sources, and importantly increasing the potential for review authors to report inaccurate or misleading summarized estimates; (5) a lack of standardization across reviews limits the ability to streamline, automate or use artificial intelligence to assist systematic review production, which is a burgeoning field of inquiry and research; and (6) these poorly conducted and reported reviews may have limited or even detrimental impacts on the planning and provision of healthcare. As such, we urgently call for the following to occur to rectify these issues, (1); further methods development in this field and for updated guidance on the conduct of these types of reviews, (2); we urgently require a reporting standard for these reviews (such as an extension to PRISMA), (4); the development (or endorsement) of a tailored risk of bias tool for studies reporting prevalence estimates, (5); the further development and promotion of software [ 26 ] and training materials [ 27 ] for these review types to support authors conducting these reviews.

Limitations of our study

In this study, we have collated and interrogated the largest dataset of systematic reviews of prevalence currently available. Although only a sample, it is likely to be representative of all published prevalence systematic reviews, although it is important that we acknowledge we only searched MEDLINE over a period of 1 year using a search strategy that only retrieved studies with the terms “prevalence” and “systematic review” in the title. There is potential that systematic reviews of prevalence published in journals not indexed (or not published at all) are meaningfully different from those characterized in our results. However, given that reviews indexed on MEDLINE are (hypothetically) likely to be of higher quality than those not indexed, and given that we still identified substantial inconsistency, variability and potentially inappropriate practices in this sample, we doubt that a broader search will have altered our main conclusions significantly. Similarly, we believe that reviews that do not use the terms “prevalence” and “systematic review” in the title terms would have, overall, even more inappropriate methods. Regarding the timeframe limitation, we decided to include only reviews recently published because older reviews may not reflect the current practice.

This meta-epidemiological review found that among this sample of published systematic reviews of prevalence, there are considerable discrepancies in terms of conduct, reporting, risk of bias assessment and data synthesis. This variability is understandable given the limited amount of guidance in this field, the lack of a reporting standard and a widely accepted risk of bias or critical appraisal tool. Our findings are a call to action to the evidence synthesis community to develop guidance and reporting standards urgently for these types of systematic reviews.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

Abbreviations

Agency for Health Research and Quality

Guidelines for Accurate and Transparent Health Estimates Reporting

Grading of Recommendations Assessment, Development and Evaluation

Interquartile range

Joanna Briggs Institute

Meta-Analysis of Observational Studies in Epidemiology

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

International Prospective Register of Systematic Reviews

Fletcher RH, Fletcher SW, Fletcher GS. Clinical epidemiology: the essentials: Lippincott Williams & Wilkins; 2012.

Google Scholar

Coggon D, Barker D, Rose G. Epidemiology for the uninitiated: John Wiley & Sons; 2009.

Munn Z, Moola S, Riitano D, Lisy K. The development of a critical appraisal tool for use in systematic reviews addressing questions of prevalence. Int J Health Policy Manag. 2014;3(3):123.

Article Google Scholar

Munn Z, Moola S, Riitano D, Lisy K. The development of a critical appraisal tool for use in systematic reviews addressing questions of prevalence. Int J Health Policy Manag. 2014;3(3):123–8.

Munn Z, Moola S, Lisy K, Riitano D, Tufanaru C. Systematic reviews of prevalence and incidence. In: Aromataris E, Munn Z, editors. Joanna Briggs Institute Reviewer's. Manual: The Joanna Briggs Institute; 2017.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ. 2009;339:b2700.

Hoffmann F, Eggers D, Pieper D, Zeeb H, Allers K. An observational study found large methodological heterogeneity in systematic reviews addressing prevalence and cumulative incidence. J Clin Epidemiol. 2020;119:92–9.

Wells G, Shea B, O’connell D, Peterson J, Welch V, Losos M, et al. The Newcastle-Ottawa Scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. Ottawa (ON): Ottawa Hospital Research Institute; 2009. Available in March 2016.

Von Elm E, Altman DG, Egger M, Pocock SJ, Gøtzsche PC, Vandenbroucke JP. The strengthening the reporting of observational studies in epidemiology (STROBE) statement: guidelines for reporting observational studies. Ann Intern Med. 2007;147(8):573–7.

Tricco AC, Lillie E, Zarin W, O'Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

McInnes MD, Moher D, Thombs BD, McGrath TA, Bossuyt PM, Clifford T, et al. Preferred reporting items for a systematic review and meta-analysis of diagnostic test accuracy studies: the PRISMA-DTA statement. Jama. 2018;319(4):388–96.

Munn Z, Moola S, Lisy K, Riitano D. The synthesis of prevalence and incidence data. Pearson a, editor. Philadelphia: Lippincott Williams & Wilkins; 2014.

Iorio A, Stonebraker JS, Chambost H, et al; for the Data and Demographics Committee of the World Federation of Hemophilia. Establishing the Prevalence and Prevalence at Birth of Hemophilia in Males: A Meta-analytic Approach Using National Registries. Ann Intern Med. 2019;171:540–6. Epub ahead of print 10 September 2019.

Rao SR, Graubard BI, Schmid CH, Morton SC, Louis TA, Zaslavsky AM, et al. Meta-analysis of survey data: application to health services research. Health Serv Outcome Res Methodol. 2008;8(2):98–114.

Barendregt JJ, Doi SA, Lee YY, Norman RE, Vos T. Meta-analysis of prevalence. J Epidemiol Community Health. 2013;67(11):974–8.

Jablensky A. Schizophrenia: the epidemiological horizon. In: Hirsch S, Weinberger D, editors. Schizophrenia. Oxford: Black-well Science; 2003. p. 203–31.

Chapter Google Scholar

Saha S, Chant D, McGrath J. Meta-analyses of the incidence and prevalence of schizophrenia: conceptual and methodological issues. Int J Methods Psychiatr Res. 2008;17(1):55–61.

Munn Z, Moola S, Lisy K, Riitano D, Tufanaru C. Methodological guidance for systematic reviews of observational epidemiological studies reporting prevalence and cumulative incidence data. Int J Evid Based Healthc. 2015;13(3):147–53.

Schwarzer G, Chemaitelly H, Abu-Raddad LJ, Rücker G. Seriously misleading results using inverse of Freeman-Tukey double arcsine transformation in meta-analysis of single proportions. Res Syn Meth. 2019;10:476–83.

Rücker G, Schwarzer G, Carpenter JR, Schumacher M. Undue reliance on I 2 in assessing heterogeneity may mislead. BMC Med Res Methodol. 2008;8(1):79.

Borenstein M, Higgins JP, Hedges LV, Rothstein HR. Basics of meta-analysis: I2 is not an absolute measure of heterogeneity. Res Synth Methods. 2017;8(1):5–18.

IntHout J, Ioannidis JPA, Rovers MM, Goeman JJ. Plea for routinely presenting prediction intervals in meta-analysis. BMJ Open. 2016;6(7):e010247-e.

Guyatt GH, Oxman AD, Kunz R, Vist GE, Falck-Ytter Y, Schünemann HJ. What is “quality of evidence” and why is it important to clinicians? BMJ. 2008;336(7651):995.

Guyatt GH, Oxman AD, Vist GE, Kunz R, Falck-Ytter Y, Alonso-Coello P, et al. GRADE: an emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924.

Iorio A, Spencer FA, Falavigna M, Alba C, Lang E, Burnand B, et al. Use of GRADE for assessment of evidence about prognosis: rating confidence in estimates of event rates in broad categories of patients. BMJ. 2015;350:h870.

Munn Z, Aromataris E, Tufanaru C, Stern C, Porritt K, Farrow J, et al. The development of software to support multiple systematic review types: the Joanna Briggs institute system for the unified management, assessment and review of information (JBI SUMARI). Int J Evid Based Healthc. 2019;17(1):36–43.

Stern C, Munn Z, Porritt K, Lockwood C, Peters MD, Bellman S, et al. An international educational training course for conducting systematic reviews in health care: the Joanna Briggs Institute's comprehensive systematic review training program. Worldviews Evid-Based Nurs. 2018;15(5):401–8.

Download references

Acknowledgements

Not applicable.

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and affiliations.

Programa de Pós-Graduação em Epidemiologia, Universidade Federal do Rio Grande do Sul, Porto Alegre, Rio Grande do Sul, Brazil

Celina Borges Migliavaca & Maicon Falavigna

Hospital Moinhos de Vento, Porto Alegre, Rio Grande do Sul, Brazil

Celina Borges Migliavaca, Cinara Stein, Verônica Colpani & Maicon Falavigna

JBI, Faculty of Health Sciences, University of Adelaide, Adelaide, Australia

Timothy Hugh Barker & Zachary Munn

Department of Health Research Methods, Evidence and Impact, McMaster University, Hamilton, ON, Canada

Maicon Falavigna

You can also search for this author in PubMed Google Scholar

Contributions

MF conceived the study. CBM, CS and VC conducted study selection, data extraction and data analysis. VC, MF and ZM supervised the conduction of study. All authors discussed the concepts of this manuscript and interpreted the data. CBM and THB wrote the first version of the manuscript. All authors made substantial revisions and read and approved the final manuscript.

Corresponding author

Correspondence to Celina Borges Migliavaca .

Ethics declarations

Ethics approval and consent to participate, consent for publication, competing interests.

Zachary Munn is a member of the editorial board of this journal. Zachary Munn and Timothy Hugh Barker members of the Joanna Briggs Institute. Zachary Munn and Maicon Falavigna are members of the GRADE Working Group. The authors have no other competing interests to declare.

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Additional file 1..

Reference list of included articles.

Additional file 2.

List of excluded articles, with reasons for exclusion.

Additional file 3.

Complete data extraction table.

Additional file 4.

Journals of publication of included systematic reviews.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ . The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

Reprints and permissions

About this article

Cite this article.

Borges Migliavaca, C., Stein, C., Colpani, V. et al. How are systematic reviews of prevalence conducted? A methodological study. BMC Med Res Methodol 20 , 96 (2020). https://doi.org/10.1186/s12874-020-00975-3

Download citation

Received : 12 December 2019

Accepted : 12 April 2020

Published : 26 April 2020

DOI : https://doi.org/10.1186/s12874-020-00975-3

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Systematic review

- Methodological quality

- Meta-epidemiological study

BMC Medical Research Methodology

ISSN: 1471-2288

- General enquiries: [email protected]

Studies of prevalence: how a basic epidemiology concept has gained recognition in the COVID-19 pandemic

- October 2022

- BMJ Open 12(10):e061497

- This person is not on ResearchGate, or hasn't claimed this research yet.

- Universität Bern

Discover the world's research

- 25+ million members

- 160+ million publication pages

- 2.3+ billion citations

- Fabiana Alves Soares

- Emanuella Emanuella

- Alcione Miranda dos Santos

- Dominik Abbühl

- Zayne Milena Roa-Díaz

- Alyaa Ulaa Dhiya Ul Haq

- Javier Martinez-Calderon

- Marta Infante-Cano

- Javier Matias-Soto

- CLIN RHEUMATOL

- Nancy Herrera-Leaño

- D. Buitrago-Garcia

- J INFECTION

- Dongyou Liu

- Matthew Whitaker

- Marc Chadeau-Hyam

- Melina Michelen

- Natalie Elkheir

- Charitini Stavropoulou

- Steven Riley

- Caroline E. Walters

- Haowei Wang

- Micah Eimer

- Christina Atchison

- Deborah Ashby

- BMC MED RES METHODOL

- Fabienne Krauer

- EUR J EPIDEMIOL

- Eva Rumpler

- Marc Lipsitch

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- How to Write a Strong Hypothesis | Steps & Examples

How to Write a Strong Hypothesis | Steps & Examples

Published on May 6, 2022 by Shona McCombes . Revised on November 20, 2023.

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection .

Example: Hypothesis

Daily apple consumption leads to fewer doctor’s visits.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, other interesting articles, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more types of variables .

- An independent variable is something the researcher changes or controls.

- A dependent variable is something the researcher observes and measures.

If there are any control variables , extraneous variables , or confounding variables , be sure to jot those down as you go to minimize the chances that research bias will affect your results.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

Step 1. Ask a question

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2. Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to ensure that you’re embarking on a relevant topic . This can also help you identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalize more complex constructs.

Step 3. Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

4. Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

5. Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if…then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis . The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

- H 0 : The number of lectures attended by first-year students has no effect on their final exam scores.

- H 1 : The number of lectures attended by first-year students has a positive effect on their final exam scores.

| Research question | Hypothesis | Null hypothesis |

|---|---|---|

| What are the health benefits of eating an apple a day? | Increasing apple consumption in over-60s will result in decreasing frequency of doctor’s visits. | Increasing apple consumption in over-60s will have no effect on frequency of doctor’s visits. |

| Which airlines have the most delays? | Low-cost airlines are more likely to have delays than premium airlines. | Low-cost and premium airlines are equally likely to have delays. |

| Can flexible work arrangements improve job satisfaction? | Employees who have flexible working hours will report greater job satisfaction than employees who work fixed hours. | There is no relationship between working hour flexibility and job satisfaction. |

| How effective is high school sex education at reducing teen pregnancies? | Teenagers who received sex education lessons throughout high school will have lower rates of unplanned pregnancy teenagers who did not receive any sex education. | High school sex education has no effect on teen pregnancy rates. |

| What effect does daily use of social media have on the attention span of under-16s? | There is a negative between time spent on social media and attention span in under-16s. | There is no relationship between social media use and attention span in under-16s. |

If you want to know more about the research process , methodology , research bias , or statistics , make sure to check out some of our other articles with explanations and examples.

- Sampling methods

- Simple random sampling

- Stratified sampling

- Cluster sampling

- Likert scales

- Reproducibility

Statistics

- Null hypothesis

- Statistical power

- Probability distribution

- Effect size

- Poisson distribution

Research bias

- Optimism bias

- Cognitive bias

- Implicit bias

- Hawthorne effect

- Anchoring bias

- Explicit bias

Prevent plagiarism. Run a free check.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

McCombes, S. (2023, November 20). How to Write a Strong Hypothesis | Steps & Examples. Scribbr. Retrieved September 29, 2024, from https://www.scribbr.com/methodology/hypothesis/

Is this article helpful?

Shona McCombes

Other students also liked, construct validity | definition, types, & examples, what is a conceptual framework | tips & examples, operationalization | a guide with examples, pros & cons, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- For authors

- Browse by collection

- BMJ Journals

You are here

- Volume 12, Issue 10

- Studies of prevalence: how a basic epidemiology concept has gained recognition in the COVID-19 pandemic

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- http://orcid.org/0000-0001-9761-206X Diana Buitrago-Garcia 1 , 2 ,

- http://orcid.org/0000-0002-3830-8508 Georgia Salanti 1 ,

- http://orcid.org/0000-0003-4817-8986 Nicola Low 1

- 1 Institute of Social and Preventive Medicine , University of Bern Faculty of Medicine , Bern , Switzerland

- 2 Graduate School of Health Sciences , University of Bern , Bern , Switzerland

- Correspondence to Professor Nicola Low; nicola.low{at}ispm.unibe.ch

Background Prevalence measures the occurrence of any health condition, exposure or other factors related to health. The experience of COVID-19, a new disease caused by SARS-CoV-2, has highlighted the importance of prevalence studies, for which issues of reporting and methodology have traditionally been neglected.

Objective This communication highlights key issues about risks of bias in the design and conduct of prevalence studies and in reporting them, using examples about SARS-CoV-2 and COVID-19.

Summary The two main domains of bias in prevalence studies are those related to the study population (selection bias) and the condition or risk factor being assessed (information bias). Sources of selection bias should be considered both at the time of the invitation to take part in a study and when assessing who participates and provides valid data (respondents and non-respondents). Information bias appears when there are systematic errors affecting the accuracy and reproducibility of the measurement of the condition or risk factor. Types of information bias include misclassification, observer and recall bias. When reporting prevalence studies, clear descriptions of the target population, study population, study setting and context, and clear definitions of the condition or risk factor and its measurement are essential. Without clear reporting, the risks of bias cannot be assessed properly. Bias in the findings of prevalence studies can, however, impact decision-making and the spread of disease. The concepts discussed here can be applied to the assessment of prevalence for many other conditions.

Conclusions Efforts to strengthen methodological research and improve assessment of the risk of bias and the quality of reporting of studies of prevalence in all fields of research should continue beyond this pandemic.

- EPIDEMIOLOGY

- STATISTICS & RESEARCH METHODS

This is an open access article distributed in accordance with the Creative Commons Attribution 4.0 Unported (CC BY 4.0) license, which permits others to copy, redistribute, remix, transform and build upon this work for any purpose, provided the original work is properly cited, a link to the licence is given, and indication of whether changes were made. See: https://creativecommons.org/licenses/by/4.0/ .

https://doi.org/10.1136/bmjopen-2022-061497

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

In introductory epidemiology, students learn about prevalence, an easy to understand concept, defined as ‘a proportion that measures disease occurrence of any type of health condition, exposure, or other factor related to health’, 1 or ‘the proportion of persons in a population who have a particular disease or attribute at a specified point in time or over a specified period.’ 2 Prevalence is an important measure for assessing the magnitude of health-related conditions, and studies of prevalence are an important source of information for estimating the burden of disease, injuries and risk factors. 3 Accurate information about prevalence enables health authorities to assess the health needs of a population, to develop prevention programmes and prioritise resources to improve public health. 4 Perhaps, owing to the apparent simplicity of the concept of prevalence, methodological developments to assess the quality of reporting, the potential for bias and the synthesis of prevalence estimates in meta-analysis have been neglected, 5 when compared with the attention paid to methods relevant to evidence from randomised controlled trials and comparative observational studies. 6 7

The COVID-19 pandemic has shown the need for epidemiological studies to describe and understand a new disease quickly but accurately. 8 Studies reporting on prevalence have been an important source of evidence to describe the prevalence of active SARS-CoV-2 infection and antibodies to SARS-CoV-2, the spectrum of SARS-CoV-2-related morbidity and helped to understand factors related to infection and disease to inform national decisions about containment measures. 9–11 Accurate estimates of prevalence of SARS-CoV-2 are crucial because they are used as an input for the estimation of other quantities, such as infection fatality ratios, which can be calculated indirectly using seroprevalence estimates. 12 Assessments of published studies have, however, highlighted methodological issues that affect study design, conduct, analysis, interpretation and reporting. 13–15 In addition, some questions about prevalence need to be addressed through systematic reviews and meta-epidemiological studies. A high proportion of published systematic reviews of prevalence, however, also have flaws in reporting and methodological quality. 5 16 Confidence in the results of systematic reviews is determined by the credibility of the primary studies and the methods used to synthesise them.

The objective of this communication is to highlight key issues about the risk of bias in studies that measure prevalence and about the quality of reporting, using examples about SARS-CoV-2 and COVID-19. We refer to prevalence at the level of a population, and not as a prediction at an individual level. The estimand is, therefore, ‘what proportion of the population is positive’ and not ‘what is the probability this person is positive.’ Although incidence and prevalence are related epidemiologically, we do not discuss incidence in this article because the study designs for measurement of the quantities differ. Bias is a systematic deviation of results or inferences from the underlying (unobserved) true values. 1 The risk of bias is a judgement about the degree to which the methods or findings of a study might underestimate or overestimate the true value in the target population, 7 in this case, the prevalence of a condition or risk factor. Quality of reporting refers to the completeness and transparency of the presentation of a research publication. 17 Risk of bias and quality of reporting are separate, but closely related, because it is only possible to assess the strengths and weaknesses of a study report if the methods and results are described adequately.

Bias in prevalence studies

The two main domains of bias in prevalence studies are those related to the study population (selection bias) and the condition being assessed (information bias) ( figure 1 ). Biases involved in the design, conduct and analysis of a study affect its internal validity. Selection bias also affects external validity, the extent to which findings from a specific study can be generalised to a wider, target population in time and space. There are many names given to different biases, often addressing the same concept. For this communication, we use the names and definitions published in the Dictionary of Epidemiology. 1

- Download figure

- Open in new tab

- Download powerpoint

Potential for selection bias and information bias in prevalence studies. Coloured lines relate to the coloured boxes, showing at which stage of study procedures selection bias (blue line) and information bias (purple line) can occur.

Selection bias

Selection bias relates to the representativeness of the sample used to estimate the prevalence in relation to the target population. The target population is the group of individuals to whom the findings, conclusions or inferences from a study can be generalised. 1 There are two steps in a prevalence study at which selection bias might occur: at the invitation to take part in the study and, among those invited, who takes part ( figure 1 ).

Selection bias in the invitation to take part in the study

The probability of being invited to take part in a study should be the same for every person in the target population. Evaluation of selection bias at this stage should, therefore, account for the complexity of the strategy for identification of participants. For example, if participants are invited from people who have previously agreed to participate in a registry or cohort, each level of invitation that has contributed to the final setting should be judged for the increasing risk of self-selection. Those who are invited to take part might be defined by demographic characteristics, for example, children below 10 years or study setting (eg, hospitalised patients), or a random sample of the general population. The least biased method to select participants in a prevalence study is to sample at random from the target population. For example, the Real-time Assessment of Community Transmission (REACT) Studies to assess the prevalence of the virus, using molecular diagnostic tests (REACT-1) and antibodies (REACT-2), invite random samples of people, stratified by area, from the National Health Service patient list in England. 9 Those invited are close to a truly random sample because almost everyone in England is registered with a general practitioner. In some cases, criteria applied to the selection of a random sample might still result in considerable bias. For example, a seroprevalence study conducted in Spain did not include care home addresses, which could have excluded around 6% of the Spanish older population. 18 Excluding people in care homes facilities might underestimate SARS-CoV-2 seroprevalence in older adults, if their risk of exposure was higher than the average in the general population. 13 Other methods of sampling are at risk of selection bias. For example, asking for volunteers through advertisements are liable to selection bias because not everyone has the same probability of seeing or replying to the advert. For example, the use of social media to invite people to a drive-in test centre to estimate the population prevalence of antibodies to SARS-CoV-2, 19 or online adverts to assess mental health symptoms during the pandemic, excludes those without an internet connection or who do not use social media, such as older people. 20

Selection bias related to who takes part in the study

Non-response bias occurs when people who have been invited, but do not take part in a study differ systematically from those who take part in ways that are associated with the condition of interest. 21 In the REACT-1 study, 22 for example, across four survey rounds, the investigators invited 2.4 million people; 596 000 swabs that were returned had a valid result (25%). The proportion of participants responding was lower in later than in earlier rounds, in men than women and in younger than older age groups. If the sociodemographic characteristics of the target population are known, the observed results could be weighted statistically to represent the overall population but might still be biased by unmeasurable characteristics that drive willingness to take part.

The direction of non-response bias is often not predictable (can result in over-or underestimation of the true prevalence) because information about the motivation to take part in a study, or not, is not usually collected. 13 In a multicentre cross-sectional survey of the prevalence of PCR-determined SARS-CoV-2 in hospitals in England, the authors suggested that different selection biases could have had opposing effects. 23 For example, staff might have volunteered to take part if they were concerned that they might have been exposed to COVID-19. If such people were more likely than unexposed people to be tested, prevalence might be overestimated. Alternatively, workers in lower-paid jobs, without financial support might have been less likely to take part than those at higher grades because of the consequences for themselves or their contacts if found to be infected. If the less-well paid jobs are also associated with a higher risk of exposure to SARS-CoV-2, the prevalence in the study population would be underestimated. Accorsi et al suggest that the risk of non-response bias in seroprevalence studies might be reduced by sampling from established and well-characterised cohorts with high levels of participation, in whom the characteristics of non-respondents are known. 13

As the proportion of invited people that do not take part in a study (non-respondents) increases, the probability of non-response bias might also increase if the topic of the study influences the probability and the composition of the study population. 24 Empirical evidence of bias was found in a systematic review of sexually transmitted Chlamydia trachomatis infection; prevalence surveys with the lowest proportion of respondents found the highest prevalence of infection, suggesting selective participation by those with a high risk of being infected. 25 Whether or not there is a dose–response relationship between the proportion of non-respondents and the likelihood of SARS-CoV-2 infection is unclear. The risks of selection bias at the stages of invitation and participation can be interrelated and might oppose each other. In the REACT-1 study, 22 it is not clear whether the reduction in selection bias through random sampling outweighed the potential for selection bias owing to the high and increasing proportion of non-respondents over time or vice versa.

Information bias

Information bias occurs when there are systematic errors affecting the completeness or accuracy of the measurement of the condition or risk factor of interest. There are different types of information bias.

Misclassification bias

This bias refers to the incorrect classification of a participant as having, or not having, the condition of interest. Misclassification is an important source of measurement bias in prevalence studies because diagnostic tests are imperfect and might not distinguish clearly among those with and without the condition. 26 For diagnostic tests, the predictive values will also be influenced by the prevalence of the condition in the study population. Seroprevalence studies are essential for determining the proportion of a population that has been exposed to SARS-CoV-2 up to a given time point. Detection of antibodies is affected by the test type and manufacturer, sample type such as serum, dried blood spots, saliva, urine or others, 27 28 and the time of sampling after infection. Different diagnostic tests might also be used in participants in the same study population, but adjustment for test performance is not always appropriate because the characteristics derived from studies in which the tests were validated might differ from the study population. 13 Accorsi et al have described in detail this issue and other biases in the ascertainment of SARS-CoV-2 seroprevalence studies. 13 Test accuracy can also change across populations, owing to the inherent characteristics of tests when clinical variability is present, 29 for example, when tests for SARS-CoV-2 detection are applied to people with or without symptoms.

In a new disease, such as COVID-19, diagnostic criteria might not be standardised or might change over time. For example, accurate assessment of the prevalence of persistent asymptomatic SARS-CoV-2 infection requires a complete list of symptoms and follow-up for a sufficiently long duration to ensure that symptoms did not develop later. 15 30 In a prevalence study conducted in a care home in March 2020, patients were asked about typical and non-typical symptoms of COVID-19. However, symptoms such as anosmia or ageusia had not been reported in association with SARS-CoV-2 at that time, so patients with these as isolated symptoms could have been wrongly classified as asymptomatic. 15 31 Poor quality of data collection has also been found in studies estimating the prevalence of mental health problems during the pandemic. 32 The use of non-validated scales, or dichotomisation to define the cases using inappropriate or unclear thresholds, will bias the estimated prevalence of the condition. Misclassification may also occur in calculations of the prevalence of SARS-CoV-2 in contacts of diagnosed cases if not all contacts are tested, and it is assumed that individuals that were not tested were also uninfected. 13

Recall bias

This bias results in misclassification when the condition has been measured through surveys or questionnaires that rely on memory. A study that aimed to describe the characteristics and symptom profile of individuals with SARS-CoV-2 infection in the USA collected information about symptoms before, and for 14 days after, being enrolled in the study. 33 The authors discuss the potential for recall bias when collecting symptoms retrospectively and if different people recollect different symptoms.

Observer bias

This bias occurs when an observer provides a wrong measurement due to lack of training or subjectivity. 21 For example, a study in the USA found variation between 14 universities in the prevalence of clinical and subclinical myocarditis in competitive athletes with SARS-CoV-2 infection. 34 One of the diagnostic tools was cardiac magnetic resonance imaging and authors attributed some of the variability to differences in the protocols and the expertise among assessors. To reduce the risk of observer bias, researchers should aim to use tools that minimise subjectivity and standardise training procedures.

Reporting studies of prevalence

There is no agreed list of preferred items for reporting studies of prevalence. The published article or a preprint are usually the only available record of a study to which most people, other than the investigators themselves, have access. The written report, therefore, needs to contain the information required to understand the possible biases and assess internal and external validity. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement is a widely used guideline, which includes recommendations for cross-sectional studies that examine associations between an exposure and outcome. 35 Table 1 shows selected items from the STROBE statement and recommendations for cross-sectional studies that are particularly relevant to the complete and transparent description of methods for studies of prevalence.

- View inline

Items from the STROBE checklist for cross-sectional studies that are relevant for prevalence studies

First, clear definitions of the target population, study setting and eligibility criteria to select the study population are required (STROBE items 5, 6a). These issues affect assessment of external validity 36 because estimates of prevalence in a specific population and setting are often generalised more widely. 1 14 Second, the denominator used to calculate the prevalence should be clearly stated, with a description of each stage of the study showing the numbers of individuals eligible, included and analysed (STROBE item 13a, b). Accurate reports of the numbers and characteristics of those who take part (responders) or do not take part (non-responders) in the study are needed for the assessment of selection bias, but this information is not always available. 24 37 Poor reporting about the proportion of responders has been described as one of the main limitations of studies in systematic reviews of prevalence. 38 As with reports of studies of any design, the statistical methods applied to provide prevalence estimates, including methods used to address missing data (STROBE item 12c) and to account for the sampling strategy (STROBE item 12d) need to be reported clearly. 35 The setting, location and periods of enrolment and data collection (STROBE item 5) are particularly important for studies of SARS-CoV-2; the stage of the pandemic, preventive measures in place and virus variants in circulation should all be described because these affect the interpretation of estimates of prevalence. Third, it is crucial to provide a clear definition of the condition or risk factor of interest (STROBE item 7) and how it was measured (STROBE item 8), so that the risk of information bias can be assessed. The definition may be straightforward if there are objective criteria for ascertainment. For example, studies of the prevalence of active SARS-CoV-2 infection should report the diagnostic test, manufacturer, sample type and criteria for a positive result. 39 40 For new conditions that have not been fully characterised, such as post-COVID-19 condition, also known as ‘long COVID-19’, reporting of prevalence can be challenging. 41 42 The WHO produced a case definition 43 in October 2021, but this might take time to be adopted widely.

The COVID-19 pandemic has produced an enormous amount of research about a single disease, published over a short time period. 44 45 Authors who have assessed the body of research on COVID-19 have highlighted concerns about the risks of bias in different study designs, including studies of prevalence. 13 44 In systematic reviews of a single topic, the occurrence of asymptomatic SARS-CoV-2 infection, we observed high between-study heterogeneity, serious risks of bias and poor reporting in the measurement of prevalence. 30 Biased results from prevalence studies can have a direct impact at the levels of the individual, community, global health and policy-making. This communication describes concepts about risks of bias and provides examples that authors can apply to the assessment of prevalence for many other conditions. Future research should be conducted to investigate sources of bias in studies of prevalence and empirical evidence of their influence on estimates of prevalence. The development of a tool that can be adapted to assess the risk of bias in studies of prevalence, and an extension to the STROBE reporting guideline, specifically for studies of prevalence, would help to improve the quality of published studies of prevalence in all fields of research beyond this pandemic.

Acknowledgments

We would like to thank Yuly Barón, who created figure 1.

- Rothman KJ ,

- Greenland S ,

- Institute for Health Metrics and Evaluation (IHME)

- GBD 2019 Diseases and Injuries Collaborators

- Hoffmann F ,

- Pieper D , et al

- Davey-Smith G ,

- Higgins JPT ,

- Lipsitch M ,

- Swerdlow DL ,

- Atchison C ,

- Ashby D , et al

- World Health Organization

- Siegler AJ ,

- Sullivan PS ,

- Sanchez T , et al

- Hanage WP ,

- Owusu-Boaitey N , et al

- Accorsi EK ,

- Rumpler E , et al

- Griffith GJ ,

- Morris TT ,

- Tudball MJ , et al

- Meyerowitz EA ,

- Richterman A ,

- Bogoch II , et al

- McKenzie JE ,

- Kirkham J , et al

- Hirst A , et al

- Pérez-Gómez B ,

- Pastor-Barriuso R , et al

- Bendavid E ,

- Mulaney B ,

- Sood N , et al

- Kalimullah NA ,

- Osuagwu UL , et al

- Catalogue of Bias Collaboration

- Chand M , et al

- Groves RM ,

- Peytcheva E

- Redmond SM ,

- Alexander-Kisslig K ,

- Woodhall SC , et al

- Bossuyt PMM , et al

- Kim H-S , et al

- Niedrig M ,

- El Wahed AA , et al

- Leeflang MMG ,

- Bossuyt PMM ,

- Buitrago-Garcia D ,

- Ipekci AM ,

- Heron L , et al

- Kimball A ,

- Hatfield KM ,

- Arons M , et al

- Yousaf AR ,

- Chu V , et al

- Daniels CJ ,

- Greenshields JT , et al

- Vandenbroucke JP ,

- von Elm E ,

- Altman DG , et al

- Baumann L ,

- Egli-Gany D , et al

- Cheng SMS , et al

- Corman VM ,

- Kaiser M , et al

- Whitaker M ,

- Elliott J ,

- Chadeau-Hyam M

- Michelen M ,

- Manoharan L ,

- Elkheir N , et al

- Raynaud M ,

- Louis K , et al

Twitter @dianacarbg, @Geointheworld, @nicolamlow

Contributors DB-G, GS and NL conceptualised the project. DB-G and NL wrote the manuscript. GS and NL provided feedback. GS and NL supervised the research. All authors edited the manuscript and approved the final manuscript.