- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Quasi-experimental Research: What It Is, Types & Examples

Much like an actual experiment, quasi-experimental research tries to demonstrate a cause-and-effect link between a dependent and an independent variable. A quasi-experiment, on the other hand, does not depend on random assignment, unlike an actual experiment. The subjects are sorted into groups based on non-random variables.

What is Quasi-Experimental Research?

“Resemblance” is the definition of “quasi.” Individuals are not randomly allocated to conditions or orders of conditions, even though the regression analysis is changed. As a result, quasi-experimental research is research that appears to be experimental but is not.

The directionality problem is avoided in quasi-experimental research since the regression analysis is altered before the multiple regression is assessed. However, because individuals are not randomized at random, there are likely to be additional disparities across conditions in quasi-experimental research.

As a result, in terms of internal consistency, quasi-experiments fall somewhere between correlational research and actual experiments.

The key component of a true experiment is randomly allocated groups. This means that each person has an equivalent chance of being assigned to the experimental group or the control group, depending on whether they are manipulated or not.

Simply put, a quasi-experiment is not a real experiment. A quasi-experiment does not feature randomly allocated groups since the main component of a real experiment is randomly assigned groups. Why is it so crucial to have randomly allocated groups, given that they constitute the only distinction between quasi-experimental and actual experimental research ?

Let’s use an example to illustrate our point. Let’s assume we want to discover how new psychological therapy affects depressed patients. In a genuine trial, you’d split half of the psych ward into treatment groups, With half getting the new psychotherapy therapy and the other half receiving standard depression treatment .

And the physicians compare the outcomes of this treatment to the results of standard treatments to see if this treatment is more effective. Doctors, on the other hand, are unlikely to agree with this genuine experiment since they believe it is unethical to treat one group while leaving another untreated.

A quasi-experimental study will be useful in this case. Instead of allocating these patients at random, you uncover pre-existing psychotherapist groups in the hospitals. Clearly, there’ll be counselors who are eager to undertake these trials as well as others who prefer to stick to the old ways.

These pre-existing groups can be used to compare the symptom development of individuals who received the novel therapy with those who received the normal course of treatment, even though the groups weren’t chosen at random.

If any substantial variations between them can be well explained, you may be very assured that any differences are attributable to the treatment but not to other extraneous variables.

As we mentioned before, quasi-experimental research entails manipulating an independent variable by randomly assigning people to conditions or sequences of conditions. Non-equivalent group designs, pretest-posttest designs, and regression discontinuity designs are only a few of the essential types.

What are quasi-experimental research designs?

Quasi-experimental research designs are a type of research design that is similar to experimental designs but doesn’t give full control over the independent variable(s) like true experimental designs do.

In a quasi-experimental design, the researcher changes or watches an independent variable, but the participants are not put into groups at random. Instead, people are put into groups based on things they already have in common, like their age, gender, or how many times they have seen a certain stimulus.

Because the assignments are not random, it is harder to draw conclusions about cause and effect than in a real experiment. However, quasi-experimental designs are still useful when randomization is not possible or ethical.

The true experimental design may be impossible to accomplish or just too expensive, especially for researchers with few resources. Quasi-experimental designs enable you to investigate an issue by utilizing data that has already been paid for or gathered by others (often the government).

Because they allow better control for confounding variables than other forms of studies, they have higher external validity than most genuine experiments and higher internal validity (less than true experiments) than other non-experimental research.

Is quasi-experimental research quantitative or qualitative?

Quasi-experimental research is a quantitative research method. It involves numerical data collection and statistical analysis. Quasi-experimental research compares groups with different circumstances or treatments to find cause-and-effect links.

It draws statistical conclusions from quantitative data. Qualitative data can enhance quasi-experimental research by revealing participants’ experiences and opinions, but quantitative data is the method’s foundation.

Quasi-experimental research types

There are many different sorts of quasi-experimental designs. Three of the most popular varieties are described below: Design of non-equivalent groups, Discontinuity in regression, and Natural experiments.

Design of Non-equivalent Groups

Example: design of non-equivalent groups, discontinuity in regression, example: discontinuity in regression, natural experiments, example: natural experiments.

However, because they couldn’t afford to pay everyone who qualified for the program, they had to use a random lottery to distribute slots.

Experts were able to investigate the program’s impact by utilizing enrolled people as a treatment group and those who were qualified but did not play the jackpot as an experimental group.

How QuestionPro helps in quasi-experimental research?

QuestionPro can be a useful tool in quasi-experimental research because it includes features that can assist you in designing and analyzing your research study. Here are some ways in which QuestionPro can help in quasi-experimental research:

Design surveys

Randomize participants, collect data over time, analyze data, collaborate with your team.

With QuestionPro, you have access to the most mature market research platform and tool that helps you collect and analyze the insights that matter the most. By leveraging InsightsHub, the unified hub for data management, you can leverage the consolidated platform to organize, explore, search, and discover your research data in one organized data repository .

Optimize Your quasi-experimental research with QuestionPro. Get started now!

LEARN MORE FREE TRIAL

MORE LIKE THIS

SWOT Analysis: What It Is And How To Do It?

Sep 27, 2024

Alchemer vs SurveyMonkey: Which Survey Tool Is Best for You

Sep 26, 2024

SurveySparrow vs SurveyMonkey: Choosing the Right Survey Tool

User Behavior Analytics: What it is, Importance, Uses & Tools

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

- Privacy Policy

Home » Quasi-Experimental Research Design – Types, Methods

Quasi-Experimental Research Design – Types, Methods

Table of Contents

Quasi-Experimental Design

Quasi-experimental design is a research method that seeks to evaluate the causal relationships between variables, but without the full control over the independent variable(s) that is available in a true experimental design.

In a quasi-experimental design, the researcher uses an existing group of participants that is not randomly assigned to the experimental and control groups. Instead, the groups are selected based on pre-existing characteristics or conditions, such as age, gender, or the presence of a certain medical condition.

Types of Quasi-Experimental Design

There are several types of quasi-experimental designs that researchers use to study causal relationships between variables. Here are some of the most common types:

Non-Equivalent Control Group Design

This design involves selecting two groups of participants that are similar in every way except for the independent variable(s) that the researcher is testing. One group receives the treatment or intervention being studied, while the other group does not. The two groups are then compared to see if there are any significant differences in the outcomes.

Interrupted Time-Series Design

This design involves collecting data on the dependent variable(s) over a period of time, both before and after an intervention or event. The researcher can then determine whether there was a significant change in the dependent variable(s) following the intervention or event.

Pretest-Posttest Design

This design involves measuring the dependent variable(s) before and after an intervention or event, but without a control group. This design can be useful for determining whether the intervention or event had an effect, but it does not allow for control over other factors that may have influenced the outcomes.

Regression Discontinuity Design

This design involves selecting participants based on a specific cutoff point on a continuous variable, such as a test score. Participants on either side of the cutoff point are then compared to determine whether the intervention or event had an effect.

Natural Experiments

This design involves studying the effects of an intervention or event that occurs naturally, without the researcher’s intervention. For example, a researcher might study the effects of a new law or policy that affects certain groups of people. This design is useful when true experiments are not feasible or ethical.

Data Analysis Methods

Here are some data analysis methods that are commonly used in quasi-experimental designs:

Descriptive Statistics

This method involves summarizing the data collected during a study using measures such as mean, median, mode, range, and standard deviation. Descriptive statistics can help researchers identify trends or patterns in the data, and can also be useful for identifying outliers or anomalies.

Inferential Statistics

This method involves using statistical tests to determine whether the results of a study are statistically significant. Inferential statistics can help researchers make generalizations about a population based on the sample data collected during the study. Common statistical tests used in quasi-experimental designs include t-tests, ANOVA, and regression analysis.

Propensity Score Matching

This method is used to reduce bias in quasi-experimental designs by matching participants in the intervention group with participants in the control group who have similar characteristics. This can help to reduce the impact of confounding variables that may affect the study’s results.

Difference-in-differences Analysis

This method is used to compare the difference in outcomes between two groups over time. Researchers can use this method to determine whether a particular intervention has had an impact on the target population over time.

Interrupted Time Series Analysis

This method is used to examine the impact of an intervention or treatment over time by comparing data collected before and after the intervention or treatment. This method can help researchers determine whether an intervention had a significant impact on the target population.

Regression Discontinuity Analysis

This method is used to compare the outcomes of participants who fall on either side of a predetermined cutoff point. This method can help researchers determine whether an intervention had a significant impact on the target population.

Steps in Quasi-Experimental Design

Here are the general steps involved in conducting a quasi-experimental design:

- Identify the research question: Determine the research question and the variables that will be investigated.

- Choose the design: Choose the appropriate quasi-experimental design to address the research question. Examples include the pretest-posttest design, non-equivalent control group design, regression discontinuity design, and interrupted time series design.

- Select the participants: Select the participants who will be included in the study. Participants should be selected based on specific criteria relevant to the research question.

- Measure the variables: Measure the variables that are relevant to the research question. This may involve using surveys, questionnaires, tests, or other measures.

- Implement the intervention or treatment: Implement the intervention or treatment to the participants in the intervention group. This may involve training, education, counseling, or other interventions.

- Collect data: Collect data on the dependent variable(s) before and after the intervention. Data collection may also include collecting data on other variables that may impact the dependent variable(s).

- Analyze the data: Analyze the data collected to determine whether the intervention had a significant impact on the dependent variable(s).

- Draw conclusions: Draw conclusions about the relationship between the independent and dependent variables. If the results suggest a causal relationship, then appropriate recommendations may be made based on the findings.

Quasi-Experimental Design Examples

Here are some examples of real-time quasi-experimental designs:

- Evaluating the impact of a new teaching method: In this study, a group of students are taught using a new teaching method, while another group is taught using the traditional method. The test scores of both groups are compared before and after the intervention to determine whether the new teaching method had a significant impact on student performance.

- Assessing the effectiveness of a public health campaign: In this study, a public health campaign is launched to promote healthy eating habits among a targeted population. The behavior of the population is compared before and after the campaign to determine whether the intervention had a significant impact on the target behavior.

- Examining the impact of a new medication: In this study, a group of patients is given a new medication, while another group is given a placebo. The outcomes of both groups are compared to determine whether the new medication had a significant impact on the targeted health condition.

- Evaluating the effectiveness of a job training program : In this study, a group of unemployed individuals is enrolled in a job training program, while another group is not enrolled in any program. The employment rates of both groups are compared before and after the intervention to determine whether the training program had a significant impact on the employment rates of the participants.

- Assessing the impact of a new policy : In this study, a new policy is implemented in a particular area, while another area does not have the new policy. The outcomes of both areas are compared before and after the intervention to determine whether the new policy had a significant impact on the targeted behavior or outcome.

Applications of Quasi-Experimental Design

Here are some applications of quasi-experimental design:

- Educational research: Quasi-experimental designs are used to evaluate the effectiveness of educational interventions, such as new teaching methods, technology-based learning, or educational policies.

- Health research: Quasi-experimental designs are used to evaluate the effectiveness of health interventions, such as new medications, public health campaigns, or health policies.

- Social science research: Quasi-experimental designs are used to investigate the impact of social interventions, such as job training programs, welfare policies, or criminal justice programs.

- Business research: Quasi-experimental designs are used to evaluate the impact of business interventions, such as marketing campaigns, new products, or pricing strategies.

- Environmental research: Quasi-experimental designs are used to evaluate the impact of environmental interventions, such as conservation programs, pollution control policies, or renewable energy initiatives.

When to use Quasi-Experimental Design

Here are some situations where quasi-experimental designs may be appropriate:

- When the research question involves investigating the effectiveness of an intervention, policy, or program : In situations where it is not feasible or ethical to randomly assign participants to intervention and control groups, quasi-experimental designs can be used to evaluate the impact of the intervention on the targeted outcome.

- When the sample size is small: In situations where the sample size is small, it may be difficult to randomly assign participants to intervention and control groups. Quasi-experimental designs can be used to investigate the impact of an intervention without requiring a large sample size.

- When the research question involves investigating a naturally occurring event : In some situations, researchers may be interested in investigating the impact of a naturally occurring event, such as a natural disaster or a major policy change. Quasi-experimental designs can be used to evaluate the impact of the event on the targeted outcome.

- When the research question involves investigating a long-term intervention: In situations where the intervention or program is long-term, it may be difficult to randomly assign participants to intervention and control groups for the entire duration of the intervention. Quasi-experimental designs can be used to evaluate the impact of the intervention over time.

- When the research question involves investigating the impact of a variable that cannot be manipulated : In some situations, it may not be possible or ethical to manipulate a variable of interest. Quasi-experimental designs can be used to investigate the relationship between the variable and the targeted outcome.

Purpose of Quasi-Experimental Design

The purpose of quasi-experimental design is to investigate the causal relationship between two or more variables when it is not feasible or ethical to conduct a randomized controlled trial (RCT). Quasi-experimental designs attempt to emulate the randomized control trial by mimicking the control group and the intervention group as much as possible.

The key purpose of quasi-experimental design is to evaluate the impact of an intervention, policy, or program on a targeted outcome while controlling for potential confounding factors that may affect the outcome. Quasi-experimental designs aim to answer questions such as: Did the intervention cause the change in the outcome? Would the outcome have changed without the intervention? And was the intervention effective in achieving its intended goals?

Quasi-experimental designs are useful in situations where randomized controlled trials are not feasible or ethical. They provide researchers with an alternative method to evaluate the effectiveness of interventions, policies, and programs in real-life settings. Quasi-experimental designs can also help inform policy and practice by providing valuable insights into the causal relationships between variables.

Overall, the purpose of quasi-experimental design is to provide a rigorous method for evaluating the impact of interventions, policies, and programs while controlling for potential confounding factors that may affect the outcome.

Advantages of Quasi-Experimental Design

Quasi-experimental designs have several advantages over other research designs, such as:

- Greater external validity : Quasi-experimental designs are more likely to have greater external validity than laboratory experiments because they are conducted in naturalistic settings. This means that the results are more likely to generalize to real-world situations.

- Ethical considerations: Quasi-experimental designs often involve naturally occurring events, such as natural disasters or policy changes. This means that researchers do not need to manipulate variables, which can raise ethical concerns.

- More practical: Quasi-experimental designs are often more practical than experimental designs because they are less expensive and easier to conduct. They can also be used to evaluate programs or policies that have already been implemented, which can save time and resources.

- No random assignment: Quasi-experimental designs do not require random assignment, which can be difficult or impossible in some cases, such as when studying the effects of a natural disaster. This means that researchers can still make causal inferences, although they must use statistical techniques to control for potential confounding variables.

- Greater generalizability : Quasi-experimental designs are often more generalizable than experimental designs because they include a wider range of participants and conditions. This can make the results more applicable to different populations and settings.

Limitations of Quasi-Experimental Design

There are several limitations associated with quasi-experimental designs, which include:

- Lack of Randomization: Quasi-experimental designs do not involve randomization of participants into groups, which means that the groups being studied may differ in important ways that could affect the outcome of the study. This can lead to problems with internal validity and limit the ability to make causal inferences.

- Selection Bias: Quasi-experimental designs may suffer from selection bias because participants are not randomly assigned to groups. Participants may self-select into groups or be assigned based on pre-existing characteristics, which may introduce bias into the study.

- History and Maturation: Quasi-experimental designs are susceptible to history and maturation effects, where the passage of time or other events may influence the outcome of the study.

- Lack of Control: Quasi-experimental designs may lack control over extraneous variables that could influence the outcome of the study. This can limit the ability to draw causal inferences from the study.

- Limited Generalizability: Quasi-experimental designs may have limited generalizability because the results may only apply to the specific population and context being studied.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Triangulation in Research – Types, Methods and...

Case Study – Methods, Examples and Guide

Correlational Research – Methods, Types and...

Experimental Design – Types, Methods, Guide

Descriptive Research Design – Types, Methods and...

Transformative Design – Methods, Types, Guide

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Quasi Experimental Design Overview & Examples

By Jim Frost Leave a Comment

What is a Quasi Experimental Design?

A quasi experimental design is a method for identifying causal relationships that does not randomly assign participants to the experimental groups. Instead, researchers use a non-random process. For example, they might use an eligibility cutoff score or preexisting groups to determine who receives the treatment.

Quasi-experimental research is a design that closely resembles experimental research but is different. The term “quasi” means “resembling,” so you can think of it as a cousin to actual experiments. In these studies, researchers can manipulate an independent variable — that is, they change one factor to see what effect it has. However, unlike true experimental research, participants are not randomly assigned to different groups.

Learn more about Experimental Designs: Definition & Types .

When to Use Quasi-Experimental Design

Researchers typically use a quasi-experimental design because they can’t randomize due to practical or ethical concerns. For example:

- Practical Constraints : A school interested in testing a new teaching method can only implement it in preexisting classes and cannot randomly assign students.

- Ethical Concerns : A medical study might not be able to randomly assign participants to a treatment group for an experimental medication when they are already taking a proven drug.

Quasi-experimental designs also come in handy when researchers want to study the effects of naturally occurring events, like policy changes or environmental shifts, where they can’t control who is exposed to the treatment.

Quasi-experimental designs occupy a unique position in the spectrum of research methodologies, sitting between observational studies and true experiments. This middle ground offers a blend of both worlds, addressing some limitations of purely observational studies while navigating the constraints often accompanying true experiments.

A significant advantage of quasi-experimental research over purely observational studies and correlational research is that it addresses the issue of directionality, determining which variable is the cause and which is the effect. In quasi-experiments, an intervention typically occurs during the investigation, and the researchers record outcomes before and after it, increasing the confidence that it causes the observed changes.

However, it’s crucial to recognize its limitations as well. Controlling confounding variables is a larger concern for a quasi-experimental design than a true experiment because it lacks random assignment.

In sum, quasi-experimental designs offer a valuable research approach when random assignment is not feasible, providing a more structured and controlled framework than observational studies while acknowledging and attempting to address potential confounders.

Types of Quasi-Experimental Designs and Examples

Quasi-experimental studies use various methods, depending on the scenario.

Natural Experiments

This design uses naturally occurring events or changes to create the treatment and control groups. Researchers compare outcomes between those whom the event affected and those it did not affect. Analysts use statistical controls to account for confounders that the researchers must also measure.

Natural experiments are related to observational studies, but they allow for a clearer causality inference because the external event or policy change provides both a form of quasi-random group assignment and a definite start date for the intervention.

For example, in a natural experiment utilizing a quasi-experimental design, researchers study the impact of a significant economic policy change on small business growth. The policy is implemented in one state but not in neighboring states. This scenario creates an unplanned experimental setup, where the state with the new policy serves as the treatment group, and the neighboring states act as the control group.

Researchers are primarily interested in small business growth rates but need to record various confounders that can impact growth rates. Hence, they record state economic indicators, investment levels, and employment figures. By recording these metrics across the states, they can include them in the model as covariates and control them statistically. This method allows researchers to estimate differences in small business growth due to the policy itself, separate from the various confounders.

Nonequivalent Groups Design

This method involves matching existing groups that are similar but not identical. Researchers attempt to find groups that are as equivalent as possible, particularly for factors likely to affect the outcome.

For instance, researchers use a nonequivalent groups quasi-experimental design to evaluate the effectiveness of a new teaching method in improving students’ mathematics performance. A school district considering the teaching method is planning the study. Students are already divided into schools, preventing random assignment.

The researchers matched two schools with similar demographics, baseline academic performance, and resources. The school using the traditional methodology is the control, while the other uses the new approach. Researchers are evaluating differences in educational outcomes between the two methods.

They perform a pretest to identify differences between the schools that might affect the outcome and include them as covariates to control for confounding. They also record outcomes before and after the intervention to have a larger context for the changes they observe.

Regression Discontinuity

This process assigns subjects to a treatment or control group based on a predetermined cutoff point (e.g., a test score). The analysis primarily focuses on participants near the cutoff point, as they are likely similar except for the treatment received. By comparing participants just above and below the cutoff, the design controls for confounders that vary smoothly around the cutoff.

For example, in a regression discontinuity quasi-experimental design focusing on a new medical treatment for depression, researchers use depression scores as the cutoff point. Individuals with depression scores just above a certain threshold are assigned to receive the latest treatment, while those just below the threshold do not receive it. This method creates two closely matched groups: one that barely qualifies for treatment and one that barely misses out.

By comparing the mental health outcomes of these two groups over time, researchers can assess the effectiveness of the new treatment. The assumption is that the only significant difference between the groups is whether they received the treatment, thereby isolating its impact on depression outcomes.

Controlling Confounders in a Quasi-Experimental Design

Accounting for confounding variables is a challenging but essential task for a quasi-experimental design.

In a true experiment, the random assignment process equalizes confounders across the groups to nullify their overall effect. It’s the gold standard because it works on all confounders, known and unknown.

Unfortunately, the lack of random assignment can allow differences between the groups to exist before the intervention. These confounding factors might ultimately explain the results rather than the intervention.

Consequently, researchers must use other methods to equalize the groups roughly using matching and cutoff values or statistically adjust for preexisting differences they measure to reduce the impact of confounders.

A key strength of quasi-experiments is their frequent use of “pre-post testing.” This approach involves conducting initial tests before collecting data to check for preexisting differences between groups that could impact the study’s outcome. By identifying these variables early on and including them as covariates, researchers can more effectively control potential confounders in their statistical analysis.

Additionally, researchers frequently track outcomes before and after the intervention to better understand the context for changes they observe.

Statisticians consider these methods to be less effective than randomization. Hence, quasi-experiments fall somewhere in the middle when it comes to internal validity , or how well the study can identify causal relationships versus mere correlation . They’re more conclusive than correlational studies but not as solid as true experiments.

In conclusion, quasi-experimental designs offer researchers a versatile and practical approach when random assignment is not feasible. This methodology bridges the gap between controlled experiments and observational studies, providing a valuable tool for investigating cause-and-effect relationships in real-world settings. Researchers can address ethical and logistical constraints by understanding and leveraging the different types of quasi-experimental designs while still obtaining insightful and meaningful results.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin

Share this:

Reader Interactions

Comments and questions cancel reply.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 7: Nonexperimental Research

Quasi-Experimental Research

Learning Objectives

- Explain what quasi-experimental research is and distinguish it clearly from both experimental and correlational research.

- Describe three different types of quasi-experimental research designs (nonequivalent groups, pretest-posttest, and interrupted time series) and identify examples of each one.

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). [1] Because the independent variable is manipulated before the dependent variable is measured, quasi-experimental research eliminates the directionality problem. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables. In terms of internal validity, therefore, quasi-experiments are generally somewhere between correlational studies and true experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here.

Nonequivalent Groups Design

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions.

Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This design would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments, might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Design

In a pretest-posttest design , the dependent variable is measured once before the treatment is implemented and once after it is implemented. Imagine, for example, a researcher who is interested in the effectiveness of an antidrug education program on elementary school students’ attitudes toward illegal drugs. The researcher could measure the attitudes of students at a particular elementary school during one week, implement the antidrug program during the next week, and finally, measure their attitudes again the following week. The pretest-posttest design is much like a within-subjects experiment in which each participant is tested first under the control condition and then under the treatment condition. It is unlike a within-subjects experiment, however, in that the order of conditions is not counterbalanced because it typically is not possible for a participant to be tested in the treatment condition first and then in an “untreated” control condition.

If the average posttest score is better than the average pretest score, then it makes sense to conclude that the treatment might be responsible for the improvement. Unfortunately, one often cannot conclude this with a high degree of certainty because there may be other explanations for why the posttest scores are better. One category of alternative explanations goes under the name of history . Other things might have happened between the pretest and the posttest. Perhaps an antidrug program aired on television and many of the students watched it, or perhaps a celebrity died of a drug overdose and many of the students heard about it. Another category of alternative explanations goes under the name of maturation . Participants might have changed between the pretest and the posttest in ways that they were going to anyway because they are growing and learning. If it were a yearlong program, participants might become less impulsive or better reasoners and this might be responsible for the change.

Another alternative explanation for a change in the dependent variable in a pretest-posttest design is regression to the mean . This refers to the statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion. For example, a bowler with a long-term average of 150 who suddenly bowls a 220 will almost certainly score lower in the next game. Her score will “regress” toward her mean score of 150. Regression to the mean can be a problem when participants are selected for further study because of their extreme scores. Imagine, for example, that only students who scored especially low on a test of fractions are given a special training program and then retested. Regression to the mean all but guarantees that their scores will be higher even if the training program has no effect. A closely related concept—and an extremely important one in psychological research—is spontaneous remission . This is the tendency for many medical and psychological problems to improve over time without any form of treatment. The common cold is a good example. If one were to measure symptom severity in 100 common cold sufferers today, give them a bowl of chicken soup every day, and then measure their symptom severity again in a week, they would probably be much improved. This does not mean that the chicken soup was responsible for the improvement, however, because they would have been much improved without any treatment at all. The same is true of many psychological problems. A group of severely depressed people today is likely to be less depressed on average in 6 months. In reviewing the results of several studies of treatments for depression, researchers Michael Posternak and Ivan Miller found that participants in waitlist control conditions improved an average of 10 to 15% before they received any treatment at all (Posternak & Miller, 2001) [2] . Thus one must generally be very cautious about inferring causality from pretest-posttest designs.

Does Psychotherapy Work?

Early studies on the effectiveness of psychotherapy tended to use pretest-posttest designs. In a classic 1952 article, researcher Hans Eysenck summarized the results of 24 such studies showing that about two thirds of patients improved between the pretest and the posttest (Eysenck, 1952) [3] . But Eysenck also compared these results with archival data from state hospital and insurance company records showing that similar patients recovered at about the same rate without receiving psychotherapy. This parallel suggested to Eysenck that the improvement that patients showed in the pretest-posttest studies might be no more than spontaneous remission. Note that Eysenck did not conclude that psychotherapy was ineffective. He merely concluded that there was no evidence that it was, and he wrote of “the necessity of properly planned and executed experimental studies into this important field” (p. 323). You can read the entire article here: Classics in the History of Psychology .

Fortunately, many other researchers took up Eysenck’s challenge, and by 1980 hundreds of experiments had been conducted in which participants were randomly assigned to treatment and control conditions, and the results were summarized in a classic book by Mary Lee Smith, Gene Glass, and Thomas Miller (Smith, Glass, & Miller, 1980) [4] . They found that overall psychotherapy was quite effective, with about 80% of treatment participants improving more than the average control participant. Subsequent research has focused more on the conditions under which different types of psychotherapy are more or less effective.

Interrupted Time Series Design

A variant of the pretest-posttest design is the interrupted time-series design . A time series is a set of measurements taken at intervals over a period of time. For example, a manufacturing company might measure its workers’ productivity each week for a year. In an interrupted time series-design, a time series like this one is “interrupted” by a treatment. In one classic example, the treatment was the reduction of the work shifts in a factory from 10 hours to 8 hours (Cook & Campbell, 1979) [5] . Because productivity increased rather quickly after the shortening of the work shifts, and because it remained elevated for many months afterward, the researcher concluded that the shortening of the shifts caused the increase in productivity. Notice that the interrupted time-series design is like a pretest-posttest design in that it includes measurements of the dependent variable both before and after the treatment. It is unlike the pretest-posttest design, however, in that it includes multiple pretest and posttest measurements.

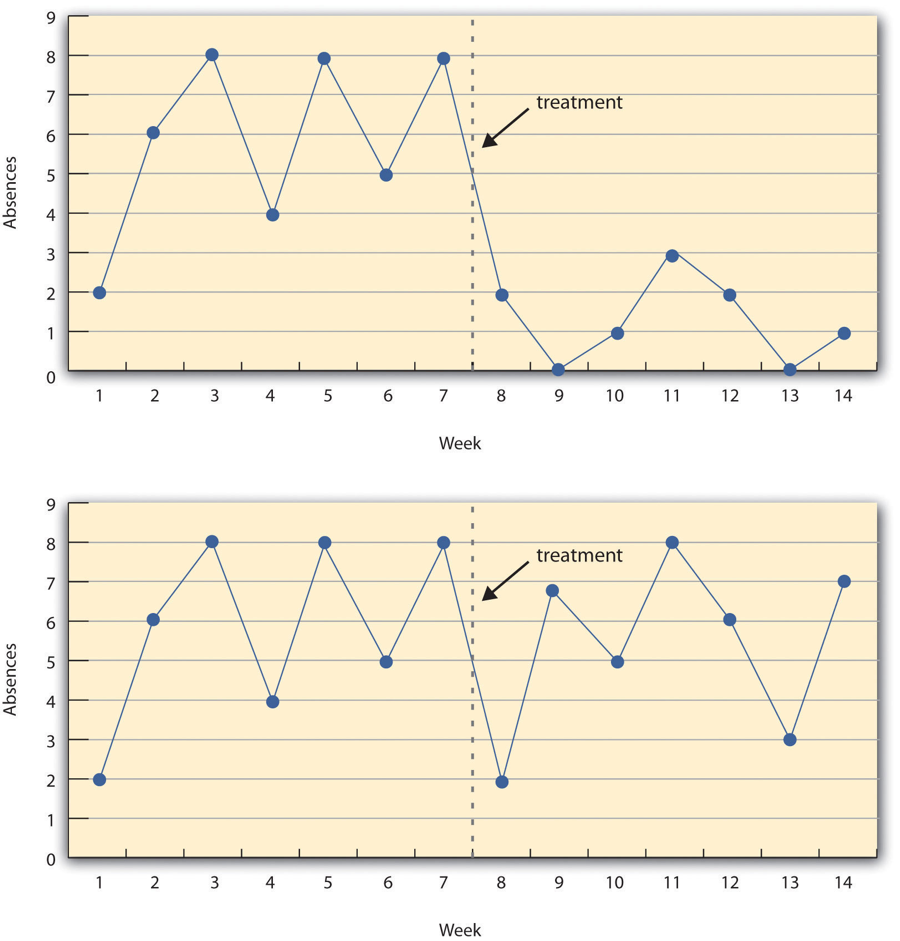

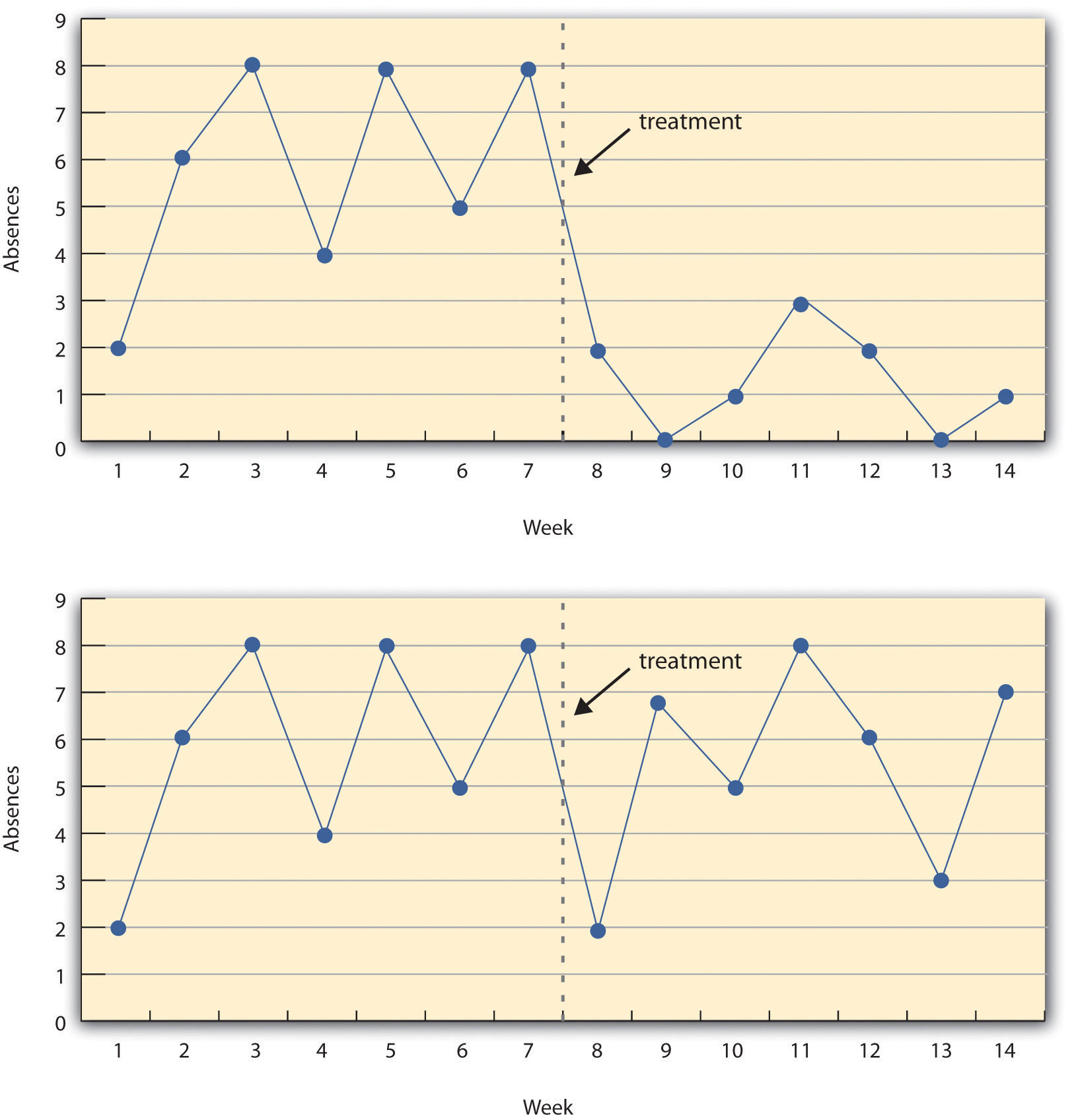

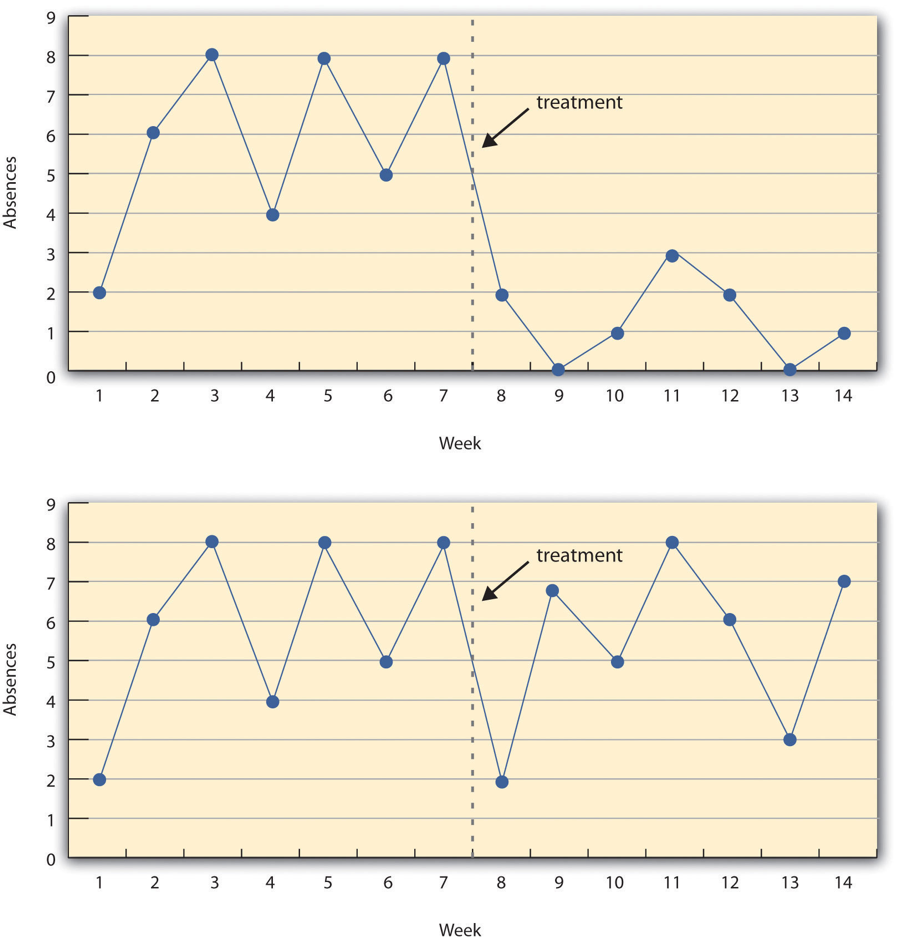

Figure 7.3 shows data from a hypothetical interrupted time-series study. The dependent variable is the number of student absences per week in a research methods course. The treatment is that the instructor begins publicly taking attendance each day so that students know that the instructor is aware of who is present and who is absent. The top panel of Figure 7.3 shows how the data might look if this treatment worked. There is a consistently high number of absences before the treatment, and there is an immediate and sustained drop in absences after the treatment. The bottom panel of Figure 7.3 shows how the data might look if this treatment did not work. On average, the number of absences after the treatment is about the same as the number before. This figure also illustrates an advantage of the interrupted time-series design over a simpler pretest-posttest design. If there had been only one measurement of absences before the treatment at Week 7 and one afterward at Week 8, then it would have looked as though the treatment were responsible for the reduction. The multiple measurements both before and after the treatment suggest that the reduction between Weeks 7 and 8 is nothing more than normal week-to-week variation.

Combination Designs

A type of quasi-experimental design that is generally better than either the nonequivalent groups design or the pretest-posttest design is one that combines elements of both. There is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve but whether they improve more than participants who do not receive the treatment.

Imagine, for example, that students in one school are given a pretest on their attitudes toward drugs, then are exposed to an antidrug program, and finally are given a posttest. Students in a similar school are given the pretest, not exposed to an antidrug program, and finally are given a posttest. Again, if students in the treatment condition become more negative toward drugs, this change in attitude could be an effect of the treatment, but it could also be a matter of history or maturation. If it really is an effect of the treatment, then students in the treatment condition should become more negative than students in the control condition. But if it is a matter of history (e.g., news of a celebrity drug overdose) or maturation (e.g., improved reasoning), then students in the two conditions would be likely to show similar amounts of change. This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not.

Finally, if participants in this kind of design are randomly assigned to conditions, it becomes a true experiment rather than a quasi experiment. In fact, it is the kind of experiment that Eysenck called for—and that has now been conducted many times—to demonstrate the effectiveness of psychotherapy.

Key Takeaways

- Quasi-experimental research involves the manipulation of an independent variable without the random assignment of participants to conditions or orders of conditions. Among the important types are nonequivalent groups designs, pretest-posttest, and interrupted time-series designs.

- Quasi-experimental research eliminates the directionality problem because it involves the manipulation of the independent variable. It does not eliminate the problem of confounding variables, however, because it does not involve random assignment to conditions. For these reasons, quasi-experimental research is generally higher in internal validity than correlational studies but lower than true experiments.

- Practice: Imagine that two professors decide to test the effect of giving daily quizzes on student performance in a statistics course. They decide that Professor A will give quizzes but Professor B will not. They will then compare the performance of students in their two sections on a common final exam. List five other variables that might differ between the two sections that could affect the results.

- regression to the mean

- spontaneous remission

Image Descriptions

Figure 7.3 image description: Two line graphs charting the number of absences per week over 14 weeks. The first 7 weeks are without treatment and the last 7 weeks are with treatment. In the first line graph, there are between 4 to 8 absences each week. After the treatment, the absences drop to 0 to 3 each week, which suggests the treatment worked. In the second line graph, there is no noticeable change in the number of absences per week after the treatment, which suggests the treatment did not work. [Return to Figure 7.3]

- Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin. ↵

- Posternak, M. A., & Miller, I. (2001). Untreated short-term course of major depression: A meta-analysis of studies using outcomes from studies using wait-list control groups. Journal of Affective Disorders, 66 , 139–146. ↵

- Eysenck, H. J. (1952). The effects of psychotherapy: An evaluation. Journal of Consulting Psychology, 16 , 319–324. ↵

- Smith, M. L., Glass, G. V., & Miller, T. I. (1980). The benefits of psychotherapy . Baltimore, MD: Johns Hopkins University Press. ↵

A between-subjects design in which participants have not been randomly assigned to conditions.

The dependent variable is measured once before the treatment is implemented and once after it is implemented.

A category of alternative explanations for differences between scores such as events that happened between the pretest and posttest, unrelated to the study.

An alternative explanation that refers to how the participants might have changed between the pretest and posttest in ways that they were going to anyway because they are growing and learning.

The statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion.

The tendency for many medical and psychological problems to improve over time without any form of treatment.

A set of measurements taken at intervals over a period of time that are interrupted by a treatment.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Instant insights, infinite possibilities

The use and interpretation of quasi-experimental design

Last updated

6 February 2023

Reviewed by

Miroslav Damyanov

Short on time? Get an AI generated summary of this article instead

- What is a quasi-experimental design?

Commonly used in medical informatics (a field that uses digital information to ensure better patient care), researchers generally use this design to evaluate the effectiveness of a treatment – perhaps a type of antibiotic or psychotherapy, or an educational or policy intervention.

Even though quasi-experimental design has been used for some time, relatively little is known about it. Read on to learn the ins and outs of this research design.

Make research less tedious

Dovetail streamlines research to help you uncover and share actionable insights

- When to use a quasi-experimental design

A quasi-experimental design is used when it's not logistically feasible or ethical to conduct randomized, controlled trials. As its name suggests, a quasi-experimental design is almost a true experiment. However, researchers don't randomly select elements or participants in this type of research.

Researchers prefer to apply quasi-experimental design when there are ethical or practical concerns. Let's look at these two reasons more closely.

Ethical reasons

In some situations, the use of randomly assigned elements can be unethical. For instance, providing public healthcare to one group and withholding it to another in research is unethical. A quasi-experimental design would examine the relationship between these two groups to avoid physical danger.

Practical reasons

Randomized controlled trials may not be the best approach in research. For instance, it's impractical to trawl through large sample sizes of participants without using a particular attribute to guide your data collection .

Recruiting participants and properly designing a data-collection attribute to make the research a true experiment requires a lot of time and effort, and can be expensive if you don’t have a large funding stream.

A quasi-experimental design allows researchers to take advantage of previously collected data and use it in their study.

- Examples of quasi-experimental designs

Quasi-experimental research design is common in medical research, but any researcher can use it for research that raises practical and ethical concerns. Here are a few examples of quasi-experimental designs used by different researchers:

Example 1: Determining the effectiveness of math apps in supplementing math classes

A school wanted to supplement its math classes with a math app. To select the best app, the school decided to conduct demo tests on two apps before selecting the one they will purchase.

Scope of the research

Since every grade had two math teachers, each teacher used one of the two apps for three months. They then gave the students the same math exams and compared the results to determine which app was most effective.

Reasons why this is a quasi-experimental study

This simple study is a quasi-experiment since the school didn't randomly assign its students to the applications. They used a pre-existing class structure to conduct the study since it was impractical to randomly assign the students to each app.

Example 2: Determining the effectiveness of teaching modern leadership techniques in start-up businesses

A hypothetical quasi-experimental study was conducted in an economically developing country in a mid-sized city.

Five start-ups in the textile industry and five in the tech industry participated in the study. The leaders attended a six-week workshop on leadership style, team management, and employee motivation.

After a year, the researchers assessed the performance of each start-up company to determine growth. The results indicated that the tech start-ups were further along in their growth than the textile companies.

The basis of quasi-experimental research is a non-randomized subject-selection process. This study didn't use specific aspects to determine which start-up companies should participate. Therefore, the results may seem straightforward, but several aspects may determine the growth of a specific company, apart from the variables used by the researchers.

Example 3: A study to determine the effects of policy reforms and of luring foreign investment on small businesses in two mid-size cities

In a study to determine the economic impact of government reforms in an economically developing country, the government decided to test whether creating reforms directed at small businesses or luring foreign investments would spur the most economic development.

The government selected two cities with similar population demographics and sizes. In one of the cities, they implemented specific policies that would directly impact small businesses, and in the other, they implemented policies to attract foreign investment.

After five years, they collected end-of-year economic growth data from both cities. They looked at elements like local GDP growth, unemployment rates, and housing sales.

The study used a non-randomized selection process to determine which city would participate in the research. Researchers left out certain variables that would play a crucial role in determining the growth of each city. They used pre-existing groups of people based on research conducted in each city, rather than random groups.

- Advantages of a quasi-experimental design

Some advantages of quasi-experimental designs are:

Researchers can manipulate variables to help them meet their study objectives.

It offers high external validity, making it suitable for real-world applications, specifically in social science experiments.

Integrating this methodology into other research designs is easier, especially in true experimental research. This cuts down on the time needed to determine your outcomes.

- Disadvantages of a quasi-experimental design

Despite the pros that come with a quasi-experimental design, there are several disadvantages associated with it, including the following:

It has a lower internal validity since researchers do not have full control over the comparison and intervention groups or between time periods because of differences in characteristics in people, places, or time involved. It may be challenging to determine whether all variables have been used or whether those used in the research impacted the results.

There is the risk of inaccurate data since the research design borrows information from other studies.

There is the possibility of bias since researchers select baseline elements and eligibility.

- What are the different quasi-experimental study designs?

There are three distinct types of quasi-experimental designs:

Nonequivalent

Regression discontinuity, natural experiment.

This is a hybrid of experimental and quasi-experimental methods and is used to leverage the best qualities of the two. Like the true experiment design, nonequivalent group design uses pre-existing groups believed to be comparable. However, it doesn't use randomization, the lack of which is a crucial element for quasi-experimental design.

Researchers usually ensure that no confounding variables impact them throughout the grouping process. This makes the groupings more comparable.

Example of a nonequivalent group design

A small study was conducted to determine whether after-school programs result in better grades. Researchers randomly selected two groups of students: one to implement the new program, the other not to. They then compared the results of the two groups.

This type of quasi-experimental research design calculates the impact of a specific treatment or intervention. It uses a criterion known as "cutoff" that assigns treatment according to eligibility.

Researchers often assign participants above the cutoff to the treatment group. This puts a negligible distinction between the two groups (treatment group and control group).

Example of regression discontinuity

Students must achieve a minimum score to be enrolled in specific US high schools. Since the cutoff score used to determine eligibility for enrollment is arbitrary, researchers can assume that the disparity between students who only just fail to achieve the cutoff point and those who barely pass is a small margin and is due to the difference in the schools that these students attend.

Researchers can then examine the long-term effects of these two groups of kids to determine the effect of attending certain schools. This information can be applied to increase the chances of students being enrolled in these high schools.

This research design is common in laboratory and field experiments where researchers control target subjects by assigning them to different groups. Researchers randomly assign subjects to a treatment group using nature or an external event or situation.

However, even with random assignment, this research design cannot be called a true experiment since nature aspects are observational. Researchers can also exploit these aspects despite having no control over the independent variables.

Example of the natural experiment approach

An example of a natural experiment is the 2008 Oregon Health Study.

Oregon intended to allow more low-income people to participate in Medicaid.

Since they couldn't afford to cover every person who qualified for the program, the state used a random lottery to allocate program slots.

Researchers assessed the program's effectiveness by assigning the selected subjects to a randomly assigned treatment group, while those that didn't win the lottery were considered the control group.

- Differences between quasi-experiments and true experiments

There are several differences between a quasi-experiment and a true experiment:

Participants in true experiments are randomly assigned to the treatment or control group, while participants in a quasi-experiment are not assigned randomly.

In a quasi-experimental design, the control and treatment groups differ in unknown or unknowable ways, apart from the experimental treatments that are carried out. Therefore, the researcher should try as much as possible to control these differences.

Quasi-experimental designs have several "competing hypotheses," which compete with experimental manipulation to explain the observed results.

Quasi-experiments tend to have lower internal validity (the degree of confidence in the research outcomes) than true experiments, but they may offer higher external validity (whether findings can be extended to other contexts) as they involve real-world interventions instead of controlled interventions in artificial laboratory settings.

Despite the distinct difference between true and quasi-experimental research designs, these two research methodologies share the following aspects:

Both study methods subject participants to some form of treatment or conditions.

Researchers have the freedom to measure some of the outcomes of interest.

Researchers can test whether the differences in the outcomes are associated with the treatment.

- An example comparing a true experiment and quasi-experiment

Imagine you wanted to study the effects of junk food on obese people. Here's how you would do this as a true experiment and a quasi-experiment:

How to carry out a true experiment

In a true experiment, some participants would eat junk foods, while the rest would be in the control group, adhering to a regular diet. At the end of the study, you would record the health and discomfort of each group.

This kind of experiment would raise ethical concerns since the participants assigned to the treatment group are required to eat junk food against their will throughout the experiment. This calls for a quasi-experimental design.

How to carry out a quasi-experiment

In quasi-experimental research, you would start by finding out which participants want to try junk food and which prefer to stick to a regular diet. This allows you to assign these two groups based on subject choice.

In this case, you didn't assign participants to a particular group, so you can confidently use the results from the study.

When is a quasi-experimental design used?

Quasi-experimental designs are used when researchers don’t want to use randomization when evaluating their intervention.

What are the characteristics of quasi-experimental designs?

Some of the characteristics of a quasi-experimental design are:

Researchers don't randomly assign participants into groups, but study their existing characteristics and assign them accordingly.

Researchers study the participants in pre- and post-testing to determine the progress of the groups.

Quasi-experimental design is ethical since it doesn’t involve offering or withholding treatment at random.

Quasi-experimental design encompasses a broad range of non-randomized intervention studies. This design is employed when it is not ethical or logistically feasible to conduct randomized controlled trials. Researchers typically employ it when evaluating policy or educational interventions, or in medical or therapy scenarios.

How do you analyze data in a quasi-experimental design?

You can use two-group tests, time-series analysis, and regression analysis to analyze data in a quasi-experiment design. Each option has specific assumptions, strengths, limitations, and data requirements.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 22 August 2024

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 9 March 2023

Last updated: 30 April 2024

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 4 July 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next, log in or sign up.

Get started for free

Our systems are now restored following recent technical disruption, and we’re working hard to catch up on publishing. We apologise for the inconvenience caused. Find out more: https://www.cambridge.org/universitypress/about-us/news-and-blogs/cambridge-university-press-publishing-update-following-technical-disruption

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- > Quasi-Experimental Research

Book contents

- The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- Cambridge Handbooks in Psychology

- Copyright page

- Contributors

- Part I From Idea to Reality: The Basics of Research

- Part II The Building Blocks of a Study

- Part III Data Collection

- 13 Cross-Sectional Studies

- 14 Quasi-Experimental Research

- 15 Non-equivalent Control Group Pretest–Posttest Design in Social and Behavioral Research

- 16 Experimental Methods

- 17 Longitudinal Research: A World to Explore

- 18 Online Research Methods

- 19 Archival Data

- 20 Qualitative Research Design

- Part IV Statistical Approaches

- Part V Tips for a Successful Research Career

14 - Quasi-Experimental Research

from Part III - Data Collection

Published online by Cambridge University Press: 25 May 2023

In this chapter, we discuss the logic and practice of quasi-experimentation. Specifically, we describe four quasi-experimental designs – one-group pretest–posttest designs, non-equivalent group designs, regression discontinuity designs, and interrupted time-series designs – and their statistical analyses in detail. Both simple quasi-experimental designs and embellishments of these simple designs are presented. Potential threats to internal validity are illustrated along with means of addressing their potentially biasing effects so that these effects can be minimized. In contrast to quasi-experiments, randomized experiments are often thought to be the gold standard when estimating the effects of treatment interventions. However, circumstances frequently arise where quasi-experiments can usefully supplement randomized experiments or when quasi-experiments can fruitfully be used in place of randomized experiments. Researchers need to appreciate the relative strengths and weaknesses of the various quasi-experiments so they can choose among pre-specified designs or craft their own unique quasi-experiments.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Quasi-Experimental Research

- By Charles S. Reichardt , Daniel Storage , Damon Abraham

- Edited by Austin Lee Nichols , Central European University, Vienna , John Edlund , Rochester Institute of Technology, New York

- Book: The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences

- Online publication: 25 May 2023

- Chapter DOI: https://doi.org/10.1017/9781009010054.015

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Research Methodologies Guide

- Action Research

- Bibliometrics

- Case Studies

- Content Analysis

- Digital Scholarship This link opens in a new window

- Documentary

- Ethnography

- Focus Groups

- Grounded Theory

- Life Histories/Autobiographies

- Longitudinal

- Participant Observation

- Qualitative Research (General)

Quasi-Experimental Design

- Usability Studies

Quasi-Experimental Design is a unique research methodology because it is characterized by what is lacks. For example, Abraham & MacDonald (2011) state:

" Quasi-experimental research is similar to experimental research in that there is manipulation of an independent variable. It differs from experimental research because either there is no control group, no random selection, no random assignment, and/or no active manipulation. "

This type of research is often performed in cases where a control group cannot be created or random selection cannot be performed. This is often the case in certain medical and psychological studies.

For more information on quasi-experimental design, review the resources below:

Where to Start

Below are listed a few tools and online guides that can help you start your Quasi-experimental research. These include free online resources and resources available only through ISU Library.

- Quasi-Experimental Research Designs by Bruce A. Thyer This pocket guide describes the logic, design, and conduct of the range of quasi-experimental designs, encompassing pre-experiments, quasi-experiments making use of a control or comparison group, and time-series designs. An introductory chapter describes the valuable role these types of studies have played in social work, from the 1930s to the present. Subsequent chapters delve into each design type's major features, the kinds of questions it is capable of answering, and its strengths and limitations.

- Experimental and Quasi-Experimental Designs for Research by Donald T. Campbell; Julian C. Stanley. Call Number: Q175 C152e Written 1967 but still used heavily today, this book examines research designs for experimental and quasi-experimental research, with examples and judgments about each design's validity.

Online Resources

- Quasi-Experimental Design From the Web Center for Social Research Methods, this is a very good overview of quasi-experimental design.

- Experimental and Quasi-Experimental Research From Colorado State University.

- Quasi-experimental design--Wikipedia, the free encyclopedia Wikipedia can be a useful place to start your research- check the citations at the bottom of the article for more information.

- << Previous: Qualitative Research (General)

- Next: Sampling >>

- Last Updated: Sep 11, 2024 11:05 AM

- URL: https://instr.iastate.libguides.com/researchmethods

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.3 Quasi-Experimental Research

Learning objectives.

- Explain what quasi-experimental research is and distinguish it clearly from both experimental and correlational research.

- Describe three different types of quasi-experimental research designs (nonequivalent groups, pretest-posttest, and interrupted time series) and identify examples of each one.

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). Because the independent variable is manipulated before the dependent variable is measured, quasi-experimental research eliminates the directionality problem. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables. In terms of internal validity, therefore, quasi-experiments are generally somewhere between correlational studies and true experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here.

Nonequivalent Groups Design

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions.

Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments, might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Design